11. Machine Consciousness

© David Gamez, CC BY 4.0 https://doi.org/10.11647/OBP.0107.11

To actually create a technical model of full blown, perspectivally organized conscious experience seems to be the ultimate technological utopian dream. It would transpose the evolution of mind onto an entirely new level […]. It would be a historical phase transition. […] But is this at all possible? It certainly is conceivable. But can it happen, given the natural laws governing this universe and the technical resources at hand?

Thomas Metzinger, Being No One1

“Could a machine think?” My own view is that only a machine could think, and indeed only very special kinds of machines, namely brains and machines that had the same causal powers as brains. And that is the main reason strong AI has had little to tell us about thinking, since it has nothing to tell us about machines. By its own definition, it is about programs, and programs are not machines. […] No one would suppose that we could produce milk and sugar by running a computer simulation of the formal sequences in lactation and photosynthesis, but where the mind is concerned many people are willing to believe in such a miracle because of a deep and abiding dualism: the mind they suppose is a matter of formal processes and is independent of quite specific material causes in the way that milk and sugar are not.

John Searle, Minds, Brains and Programs2

11.1 Types of Machine Consciousness

A team of scientists labours to build a conscious machine. They ignite its consciousness with electricity and it opens its baleful eye. It declares that it is conscious and complains about its inhuman treatment. The scientists liberally deduce that it is really conscious. They run tests to probe its reactions to fearful stimuli. Terrified, it snaps its chains and runs amok in the lab. It rips one intern apart and bashes out the brains of another. With a wild rush it bursts through the door and disappears into the night.

This machine exhibited conscious external behaviour and really was conscious. It could have been controlled by a model of a CC set or a model of phenomenal consciousness. These different types of machine consciousness will be labelled MC1-MC4:3

- MC1. Machines with the same external behaviour as conscious systems. Humans behave in particular ways when they are conscious. They are alert, they can respond to novel situations, they can inwardly execute sequences of problem-solving steps, they can execute delayed reactions to stimuli, they can learn and they can respond to verbal commands (see Section 4.1). Many artificially intelligent systems exhibit conscious human behaviours (playing games, driving, reasoning, etc.). Some people want to build machines that have the full spectrum of human behaviour.4 Conscious human behaviours can be exhibited by systems that are not associated with bubbles of experience.5

- MC2. Models of CC sets. Computer models have been built of potential CC sets in the brain.6 This type of model can run on a computer without a bubble of experience being present. A model of a river is not wet; a model of a CC set would only be associated with consciousness if it produced appropriate patterns in appropriate materials. This is very unlikely to happen when a CC set is simulated on a digital computer.

- MC3. Models of consciousness. Computer models of bubbles of experience can be built.7 These could be based on the phenomenological observations of Husserl, Heidegger or Merleau-Ponty. These models can be created without producing patterns in materials that are associated with bubbles of experience.

- MC4. Machines associated with bubbles of experience. When we have understood the relationship between consciousness and the physical world we will be able to build artificial systems that are actually conscious. These machines would be associated with bubbles of experience in the same way that human brains are associated with bubbles of experience. Some of our current machines might already be associated with bubbles of experience.

Several different types of machine consciousness can be present at the same time. We can build machines with the external behaviour associated with consciousness (MC1) by modelling CC sets or consciousness (MC2, MC3).8 We could produce a machine that exhibited conscious external behaviour (MC1) using a model of CC sets (MC3) that was associated with a bubble of experience (MC4).

The construction of MC1, MC2 and MC3 machines is part of standard computer science. The construction of MC4 machines goes beyond computer models of external behaviour, CC sets and consciousness. MC4 machines contain patterns in materials that are associated with bubbles of experience.

11.2 How to Build a MC4 Machine

MC4 machine consciousness would be easy if computations or information patterns could form CC sets by themselves. Unfortunately computations and information patterns do not conform to constraints C1-C4, so they cannot be used to build MC4 machines (see Chapters 7 and 8).

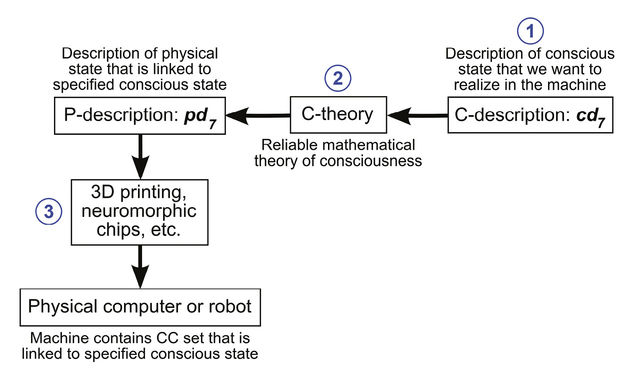

To construct a MC4 machine we need to realize particular patterns in particular physical materials. If we had a reliable c-theory, we could design and build a MC4 machine in the following way:

- Generate c-description, cd7, of the consciousness that we want in the machine.

- Use reliable c-theory to convert cd7 into a p-description, pd7, of the CC set that corresponds to this conscious state.

- Realize this CC set in a machine.

This is illustrated in Figure 11.1.

We do not have a reliable c-theory. So we can only guess about the patterns and materials that might be needed to build MC4 machines. If CC sets contain patterns in electromagnetic fields, then we could use neuromorphic chips to generate appropriate electromagnetic patterns in an artificial system.9 If CC sets contain biological neurons, then we could build an artificial MC4 system using cultured biological neurons.10

Figure 11.1. A reliable c-theory is used to build a MC4 machine. 1) The state of consciousness that we want to realize in the machine is specified in a formal c-description, cd7. 2) A reliable c-theory converts the c-description into a formal description of a physical state, pd7. 3) 3D printing, neuromorphic chips, etc. are used to build a machine that contains the CC set described in pd7. Image © David Gamez, CC BY 4.0.

11.3 Deductions about the Consciousness

of Artificial Systems

Last year I purchased a C144523 super-intelligent mega-robot from our local store. It cooks, cleans, makes love to the wife and plays dice. Last week I saw a new model in the shop window—it is time to dispose of C144523. On the way to the dump it goes on and on about how it is a really sensitive robot with real feelings. It looks sad when I throw it in the skip. The wife is sour. Little Johnny screams ‘How can you do this to C144523? She was conscious, just like us. I hate you, I hate you, I hate you!’ Perhaps C144523 really had real feelings? I probably should have checked.

We want to know whether the MC1-MC3 machines that we have created are really conscious. We want to know whether we have built a MC4 machine.

We can use reliable c-theories to make deductions about the MC4 consciousness of artificial systems. These are likely to be liberal deductions because most machines do not have the same physical context as our current platinum standard systems (see Section 9.2).

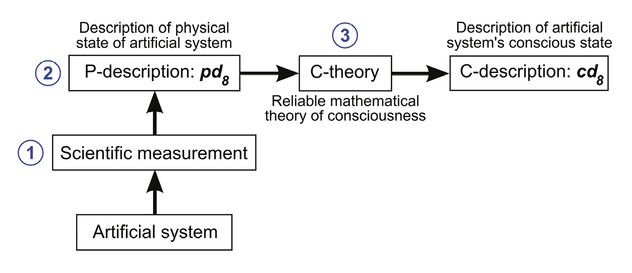

Suppose we have a reliable c-theory that maps electromagnetic patterns onto conscious states. We would analyze an artificial system for MC4 consciousness in the following way:

- Measure its electromagnetic patterns.

- Convert measurement into p-description, pd8.

- Use reliable c-theory to convert pd8 into a c-description, cd8, of the artificial system’s consciousness.

This is illustrated in Figure 11.2. The plausibility of the resulting c-description depends on the reliability of the physical c-theory and on whether it is a conservative or a liberal deduction.11

Figure 11.2. A reliable c-theory is used to deduce the consciousness of an artificial system. 1) Scientific instruments are used to measure the physical state of the artificial system. 2) Scientific measurements are converted into a formal p-description of the artificial system’s physical state, pd8. 3) A reliable c-theory converts the p-description into a formal description of the artificial system’s conscious state, cd8. Image © David Gamez, CC BY 4.0.

11.4 Limitation of this Approach to MC4 Consciousness

This approach to MC4 machine consciousness is based on c-theories that are developed using platinum standard systems. But our platinum standard systems might not contain all of the patterns and materials that are linked to bubbles of experience.

One type of pattern and material could be linked to consciousness in the human brain; a different type of pattern and material could be linked to consciousness in an artificial system. It is impossible to find out whether this is the case. We cannot measure the consciousness of systems that are not platinum standards, so we cannot prove that the patterns in their materials are not associated with conscious states. At best we can be confident that a machine is MC4 conscious—we cannot be confident that it is not MC4 conscious.

In the future we might be seduced by a machine’s MC1 behaviour and assume that it is a platinum standard system. However, assumptions about platinum standard systems should be not made lightly—they have radical implications for the science of consciousness (see Section 5.4).

11.5 Conscious Brain Implants

Artificial devices could be implanted in our brains to extend our consciousness.12 In a MC4 implant the CC set would be distributed between the brain and the implant, forming a hybrid human-machine system. MC4 implants would enable us to modify and enhance our consciousness more easily. We could become conscious of different types of information (from our environment, the Internet, etc.).

MC4 implants have medical applications. CC sets could be damaged by tumours, strokes or accidents. The damaged area could be replaced with an implant that was deduced to have the missing consciousness.

MC4 implants would have to produce specific patterns in specific materials. The patterns could be distributed between the brain and the implant. For example, if CC sets consisted of electromagnetic patterns, then neuromorphic chips13 could be implanted, which would work together with the brain’s biological neurons to create electromagnetic patterns that would be associated with consciousness.

Brains with implants are not platinum standard systems, so we cannot measure their consciousness using c-reports. Only conservative or liberal deductions can be made about their consciousness.

11.6 Uploading Consciousness into a Computer

It will soon be technologically possible to scan a dead person’s brain and create a simulation of it on a computer.14 Some people think that a simulation will have the same consciousness as their biological brain. They believe that they can achieve immortality by uploading their consciousness into a computer.15

It is extremely unlikely that a simulation of your brain on a digital computer will have a bubble of experience. Simulations have completely different electromagnetic fields from real brains and lack the biological materials that might be members of CC sets.

Your consciousness can only be uploaded into an artificial system that reproduces the CC sets in your brain. We do not know which of the brain’s materials are present in CC sets, so the only certain way of uploading your consciousness is to create an atom-for-atom copy of your brain.

The advantage of the brain-uploading approach is that the patterns linked to consciousness are blindly copied from the original brain. Suppose we could show that electromagnetic fields are the only materials in CC sets. I could then upload my consciousness by realizing my brain’s electromagnetic field patterns in a machine. This could be done without any knowledge of the patterns that are linked to consciousness.

When I upload a file to the Internet the file remains on my computer. The same would be true if I uploaded my consciousness by scanning my living brain using non-destructive technology. My consciousness would continue to be associated with my biological brain. A copy of my consciousness would be created in the computer. Scanning and simulating my brain would not transfer my consciousness—it would not enable my consciousness to survive the death of my biological body.16

11.7 Will Conscious Robots Conquer the World?

Science fiction fans know that conscious machines will take over the world and enslave or eliminate humans. Prescient science fiction writing helped us to prepare for the Martian invasion. Perhaps we should take drastic action now to save humanity from conscious killer robots?17

The next section argues that a takeover by superior MC1 or MC4 machines could be a good thing. This section examines the more common (and entertaining) belief that humanity will be threatened by malevolent machine intelligences.

MC1 machines carry out actions in the world—they fire lasers, hit infants on the head and steal ice cream. Research on MC2, MC3 and MC4 systems might improve our ability to develop MC1 machines, but MC2, MC3 and MC4 machines cannot achieve anything unless they are capable of external behaviour. Only MC1 machines could threaten humanity.18

It is extremely difficult to develop machines with human-level intelligence. We are just starting to learn how to build specialized systems that can perform a single task, such as driving or playing Jeopardy. This is much easier than building MC1 machines that learn as they interact with the world and exhibit human-like behaviour in complex dynamic environments.19

Let us take the worst-case scenario. Suppose super-intelligent computers control our aircraft, submarines, tanks and nuclear weapons. There are billions of armed robots. Every aspect of power generation, mining and manufacturing is done by robots. Humans sit around all day painting and writing poetry. Under these conditions MC1 machines could take over. However, if humans were involved in the manufacture and maintenance of robots, if they managed the mines and power production, then complete takeover is very unlikely—the robot rebellion would rapidly grind to a halt as the robots ran out of power and their parts failed.

People who worry about machines taking over should specify the conditions under which this would be possible.20 When we get close to fulfilling these conditions we should take a careful look at our artificially intelligent systems and do what is necessary to minimize the threat.

Some people have suggested that artificial intelligence could run away with itself—we might build a machine that constructs a more intelligent machine that constructs a more intelligent machine, and so on. This is known as a technological singularity.21 The fear that we might build a machine that takes over the world is replaced by a higher-order fear that we build a machine that builds a machine that builds a machine that takes over the world. We have little idea how to build such a machine.22 Just a sickening sense of fear when we imagine an evil super-intelligence hatching from a simple system.

Suppose we write an intelligent program that writes and executes a more intelligent program, and so on at an accelerating rate. What can this super-intelligent system do? In what way could it pose an existential threat to humanity? Physically it can do nothing unless it is connected to a robot body. And what can one robot do against ten billion humans? On the Internet the super-intelligence will be able to do everything that humans do (make money through gambling, hire humans to do nefarious deeds, purchase weapons, etc.). But it is very unlikely to pose more of a threat than malevolent humans. Large, well-funded teams of highly intelligent humans struggle to steal small sums of money, copy business secrets, and carry out physical and online attacks. There is little reason to believe that a super-intelligent system could achieve much more. A runaway intelligence would only pose a threat if many other conditions were met. We are very far from building this type of system and we will have plenty of time to minimize the risks if it becomes a real possibility.23

Machines are much more likely to accidentally destroy humanity as a result of hardware or software errors. Killer robots with appropriate kill switches might make better decisions on the battlefield and cause less collateral damage. However, if we have thousands of such robots, then there is a danger that a software error could kill the kill switch and set them on the rampage. Protecting humans against software errors is not straightforward because most modern weapons systems are under some form of computer control and humans can make calamitous decisions based on incorrect information provided by computers.24

Science fiction reflects our present concerns—it tells us little about a future that is likely to happen. Over the next centuries our attitudes towards ourselves and our machines will change. As our artificial intelligences improve we will get better at understanding, regulating and controlling them. Po-faced discussions about the existential threat of artificial intelligence will become as quaint as earlier fears about Martian invaders.

11.8 Should Conscious Robots Conquer the World?

It is very unlikely that intelligent machines could possibly produce more dreadful behaviour towards humans than humans already produce towards each other, all round the world even in the supposedly most civilised and advanced countries, both at individual levels and at social or national levels.

Aaron Sloman, Why Asimov’s Three Laws of Robotics Are Unethical25

Many humans are stupid useless dangerous trash. Scum rises to the top. People have killed hundreds of millions of people in grubby quests for power, pride, sexual satisfaction and cash. We have come close to nuclear catastrophe.

Super-intelligent MC1 machines might run the world better than ourselves and make humanity happier. They could systematically analyze more data without the human limitations of boredom and self-interest. MC1 machines could maximize human wellbeing without petty political gestures.26

Positive states of consciousness have value in themselves. We fear death because we fear the permanent loss of our consciousness. Crimes are ethically wrong because of their effects on conscious human beings.27 Nothing would matter if we were all zombies.

Many human consciousnesses are small and mediocre. People pour out unclean and lascivious thoughts to their confessors. We are guilty of weak sad thoughts and pathetically wallow in negative states of consciousness. Human bubbles of experience fall far short of consciousness’ potential.

Reliable c-theories would enable us to engineer MC4 machines with better consciousness. If consciousness is ethically valuable in itself, then a takeover by superior MC4 machines would be a good thing. This could be a gradual process without premature loss of life that we would barely notice. We would end up with 100 billion high quality machine consciousnesses, instead of 10 billion human consciousnesses full of hate, lies, gluttony and war. A pure brave new world without sin in thought or deed.28

We have species-specific prejudices, a narrow-mindedness, that causes us to recoil with shock and horror from the suggestion that we should meekly step aside in favour of superior machines. This does not mean that the ethical argument is wrong or that the replacement of humans with MC4 machines would be bad—merely that it is against the self interest of our species. Looked at dispassionately a good case can be made. Can we rise above our prejudices and create a better world?

The world might be a better place if MC4 machines took over. But it is very unlikely that this utopian scenario will come to pass. The science of consciousness has a long way to go before we will be able to design better consciousnesses. And we are only likely to be able to make liberal deductions about the consciousness of artificial systems, which are not completely reliable. We should only replace humans with MC4 machines when we are certain that the machines have superior consciousnesses.

11.9 The Ethical Treatment of Conscious Machines

What would you say if someone came along and said, “Hey, we want to genetically engineer mentally retarded human infants! For reasons of scientific progress we need infants with certain cognitive and emotional deficits in order to study their postnatal psychological development—we urgently need some funding for this important and innovative kind of research!” You would certainly think this was not only an absurd and appalling but also a dangerous idea. It would hopefully not pass any ethics committee in the democratic world. However, what today’s ethics committees don’t see is how the first machines satisfying a minimally sufficient set of constraints for conscious experience could be just like such mentally retarded infants. They would suffer from all kinds of functional and representational deficits too. But they would now also subjectively experience those deficits. In addition, they would have no political lobby—no representatives in any ethics committee.

Thomas Metzinger, Being No One29

The science of consciousness should enable us to build MC4 machines that are associated with bubbles of experience. Some of our MC1, MC2 or MC3 machines could already be MC4 conscious. These systems might suffer; they might be confused; they might be incapable of expressing their pain.

We want machines that exhibit the behaviours associated with consciousness (MC1). We want to build models of CC sets (MC2) and models of consciousness (MC3). But we might have to prevent our machines from becoming MC4 conscious if we want to avoid the controversy associated with animal experiments.

It would be absurd to give rights to MC1, MC2 or MC3 machines. Ethical treatment should be limited to machines that are really MC4 conscious.

We can use reliable c-theories to deduce which machines are MC4 conscious (see Section 11.3). One potential problem is that a large number of physical objects (phones, toasters, cars etc.) might be deduced to be MC4 conscious according to the most reliable c-theory. It is also highly unlikely that we will reach the stage of designing systems with zero or positive states of consciousness without building systems that have ‘retarded’ or painful consciousness.

11.10 Summary

There are four types of machine consciousness. There are machines whose external behaviour is similar to conscious systems (MC1), there are models of CC sets (MC2), models of consciousness (MC3), and systems that are associated with bubbles of experience (MC4). Reliable c-theories can be used to deduce which machines are really MC4 conscious. Some implants and brain scanning/upload methods are potentially forms of MC4 machine consciousness.

The production of conscious machines raises ethical questions, such as the potential danger to humanity of MC1 machines, whether MC1 or MC4 machines should take over the world, and how we should treat MC4 machines.

It could take hundreds or thousands of years to develop artificial systems with human levels of consciousness and intelligence. It might be impossible to build super-intelligent machines. Current discussion of these issues is little more than speculation about a distant future that we cannot accurately imagine.