19 Evaluation of an Online Learning Environment

© L. Ekenberg, K. Hansson, M. Danielson, G. Cars et al., CC BY-NC-ND 4.0 http://dx.doi.org/10.11647/OBP.0108.19

This chapter is based on Kivunike, F., Ekenberg, L., Danielson, M. and Tusubira, F.F. Towards a Structured Approach for Evaluating the ICT Contribution to Development, The International Journal on Advances in ICT for Emerging Regions. 7(1). pp. 1–15. 2014.

The evaluation and selection of initiatives in ICT for development (ICT4D) is a complex decision problem that would benefit from the application of MCDA techniques. Besides facilitating multidimensional and multi-stakeholder assessments MCDA provides a means for handling uncertainty arising from incomplete and vague information. This is a key requirement for the evaluation of the contribution of ICT to development, which relies on stakeholders’ value judgements, perceptions and beliefs about how ICT has affected people’s lives. In addition MCDA techniques offer a structured process for evaluation of development outcomes as an alternative to the predominantly descriptive, and often difficult to report, ICT4D evaluation approaches. They further relax the requirement of quantitative measures that call for data that is in some cases not accessible, and may be more taxing for the stakeholders.

As a structured decision making process the MCDA methodology typically consists of three stages: (1) information gathering or problem structuring – involves the definition of the decision problem to be addressed as well as the criteria and alternatives where necessary, (2) modelling stakeholder preferences – the structured decision problem, that is, criteria and alternatives are modelled using a decision support tool; and (3) evaluation and comparison of alternatives. While the application of MCDA techniques to decision making situations in the context of developing countries is appropriate, it is challenged by cultural, organisational, and infrastructural barriers, among other factors. Examples of such barriers include low literacy levels, lack of reliable electricity supply, and uneven access to ICT infrastructure, as well as resistance from elites resulting from leaders being afraid of losing their political position. This calls for adoption of the MCDA approach and process in a way that takes into account the contextual limitations in the developing country context, and the specific ICT4D evaluation exercise. This section illustrates how an MCDA technique can be applied for the evaluation of an ICT4D initiative. It specifically applies the technique using a subsection of the proposed criteria for the evaluation of the impact of an online learning environment on students’ access to learning.

Evaluating the Impact of e-Learning Environments on Students

The Makerere University E-Learning Environment (MUELE) is one of several initiatives aimed at leveraging faculty effectiveness and improving access to learning at Makerere University. MUELE is a learning management system (LMS) based on Moodle which has been in existence since 2009 and boasts a steady growth of users over the years. Active courses increased from 253 in 2011 to 456 in 2013, while the users increased to 45,000 in 2013 from 20,000 in 2011. Despite this progress, and even after lecturers were trained in online course authoring and delivery, the use of MUELE is mostly as a repository of course information. This has been attributed to attitudes towards the adoption of e-learning, concerns from lecturers regarding the increase in workload resulting from large student numbers, and increased course preparation time. Consequently this illustrative study seeks to establish whether MUELE has improved students’ access to learning. More specifically we sought to establish whether MUELE contributed to improved (access to) learning; and to assess how the initiative performed on the different output and outcome indicators, highlighting the most significant outcomes. The criteria consist of the output and outcome indicators relevant for the evaluation of the impact of MUELE on access to learning. This is a subsection of the criteria suggested in Chapter 8, specifically aimed at evaluating improved access to formal and/or non-formal education. The criteria also include the contextual factors known to have an effect on the use of ICT to support learning.

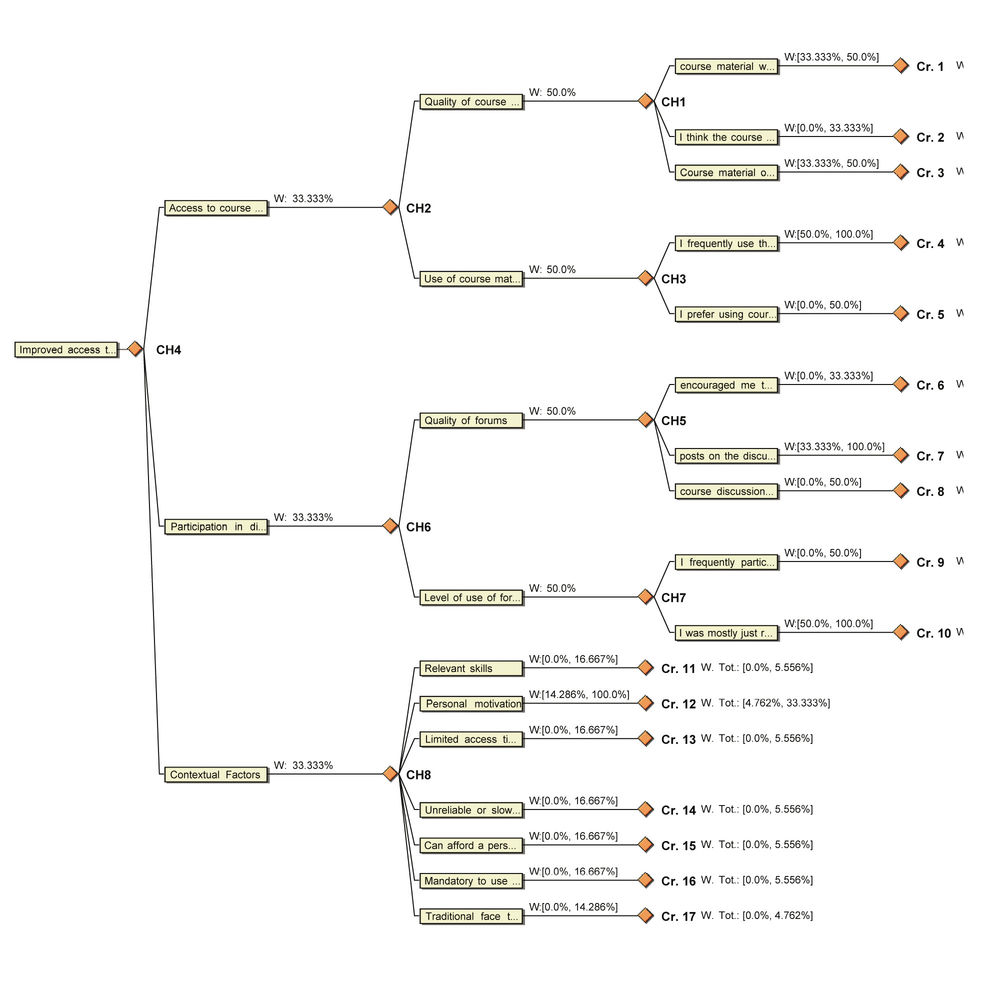

Figure 1. Output evaluation model.

The proposed criteria consisted of two decision models; the outputs and outcomes decision models. Using the output model (see Figure 1) we sought to establish the perception of students on whether MUELE had improved their access to learning. Using the outcomes model we sought to measure the actual improvement in student learning. The contextual factors had an influence on both models, either facilitating or restricting the improved access to learning. Preference modelling and elicitation considered two aspects: (1) evaluating the relative importance of criteria (eliciting weights); and (2) evaluating the initiative performance against criteria (eliciting scores).

Weight Elicitation: This is expressed through the assignment of a weight which reflects the importance of one dimension (criteria) relative to the others, and can be achieved through various methods. Ideally weight elicitation should be performed for each of the levels of the decision tree hierarchy. This study applied the rank-order approach in which criteria were ranked in order of importance from most to least important including equal ranks, as well as the assigning of weights. Rank-ordering was performed for the outcome model and the bottom-level criteria of the output model (output indicators). Equal weights were assumed for the other levels of the hierarchy, i.e. outputs and output indicator categories. The weights were developed through consultation with experts in the field – lecturers and MUELE administrators – who assessed the relative importance of the criteria.

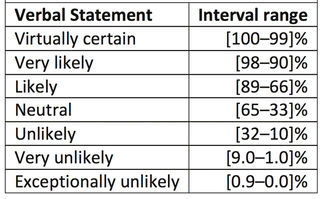

Eliciting Scores: This involved evaluating perceptions of how MUELE had performed on various criteria. Responses were elicited from students who had used MUELE for at least a year or more. Verbal-numerical scales which have been applied in various domains as well as binary (yes/no) scales were used for the elicitation. The verbal-numerical scale is a combination of verbal expressions (e.g. unlikely, strongly agree etc.) and the corresponding numerical intervals (see Table 1). Since elicitation involved vague and imprecise value judgements of how e-learning had improved learning, it was appropriate to adopt a verbal-numerical scale. While the verbal responses enabled stakeholders to state their preferences in a vague manner, the corresponding numerical ranges were applied for representation and analysis in the decision analysis tool. Studies have established that people assess in terms of words or numbers in varied ways; however the use of a combined verbal-numerical scale is a more effective and simplified elicitation approach.

Table 1. Example of a verbal-numerical scale.

Since multiple responses were elicited from the students and experts, aggregation was required for the elicited information. The aggregation approach was dependent on the nature of response scales; for example, the simple weighted sum approach was applied for the aggregation of the students’ responses obtained from the verbal-numerical scale. This involved assuming equal weights for each stakeholder and calculating the expected value. The simple weighted sum approach has been used in the aggregation of imprecise values because it has proven to be the most effective aggregation approach. Since the ranking and binary (yes/no) scales are ordinal, the mode was applied as the preferred measure of central tendency to obtain the aggregate value(s) for the analysis.

Results: Analysis and Evaluation

In this study the DecideIT decision support tool was used to analyse and evaluate the decision problem. DecideIT is based on multi-attribute value theory and supports both precise and imprecise information. DecideIT supports various data formats: imprecise data in terms of interval values, comparative statements or weights and even precise values. The rank-ordered values depicting the relative importance of criteria were modelled as comparative statements, while the student perceptions obtained through the verbal-numerical and binary (yes/no) scales were modelled as intervals and precise values respectively. Evaluation was performed for each of the models (outputs and outcomes) and the results are discussed below.

Respondent Demographics

Eight experts, four male and four female, were consulted on the ranking of importance of indicators used to evaluate the impact of an e-learning environment on improved student learning. Seven were lecturers, while one of them was an administrator in charge of MUELE. Twenty students, seventeen male and three female, participated in the evaluation of the impact of MUELE on improved access to learning. With the exception of two students in their second year and three who had completed their studies, the majority (15) were in their final year of study, and had used MUELE for an overall period of two to four years. Most of the participants (10) used it two or three days a week, seven used it almost every day, while two rarely used it and one used it once a week. Finally, while fourteen of the student participants were aged 16 to 25 years, the remaining six were aged 26 to 35. Clearly the sample is not sufficiently representative of the student population that uses MUELE, however it was sufficient to illustrate the structured evaluation process.

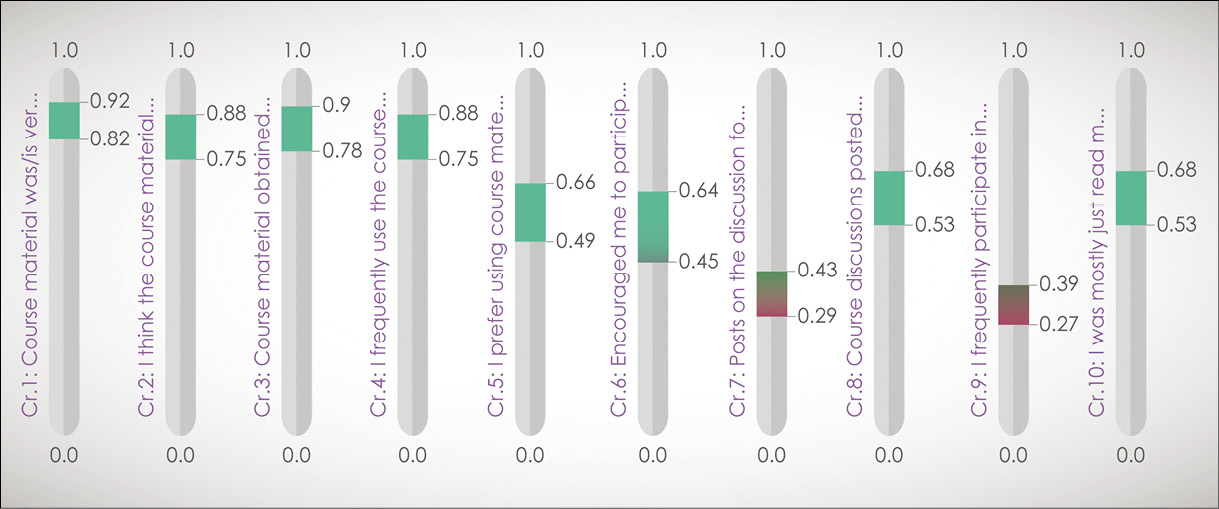

Value Profiling: provides an assessment of how outputs (evaluated in terms of output indicators) have performed in meeting the overall objective. It assesses the contribution or relevance of the outputs to the overall objective. In this case the quality and level of use of course material are the most significant contributors to improvements in accessing learning materials, while participation in online discussions is average. Finally, satisfaction with the quality of discussion forum posts is the least contributor to improved access to learning (Figure 2). Evidently MUELE is mostly used as a repository of course materials, as previously established.

Figure 2. Performance of individual outputs on improved access to learning.

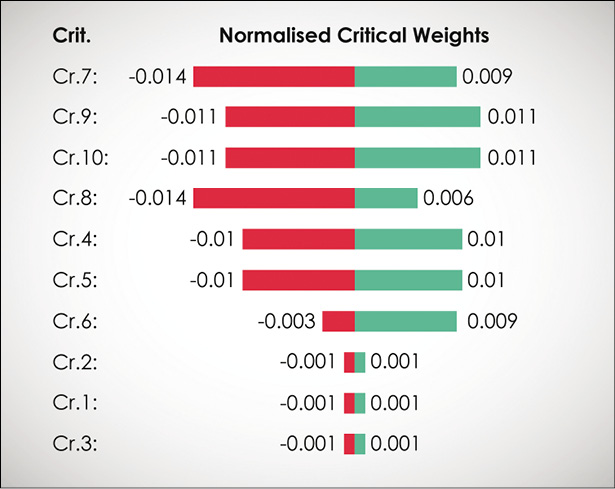

Figure 3. Critical outputs in the realisation of student learning.

Tornado Graphs: These facilitate the identification of the critical issues that have the highest impact on the expected value (Figure 3). The least contributing (outputs) indicators as per the value profiling analysis above, i.e. participation in the discussion forums (Cr.7, Cr.9, Cr.10, Cr.8), were the most critical aspects affecting the expected value measure. On the other hand, the high contributors, i.e. the quality and level of use of course material, had the least impact on the expected value. Such information may challenge decision-makers to develop strategies for the improvement of the current initiative or streamline the development of future similar initiatives. For example, establishing that participation in discussion forums is a critical aspect in realising improved learning through MUELE would challenge the lecturers to engage the students actively in this activity, or to investigate further why this is an important aspect.

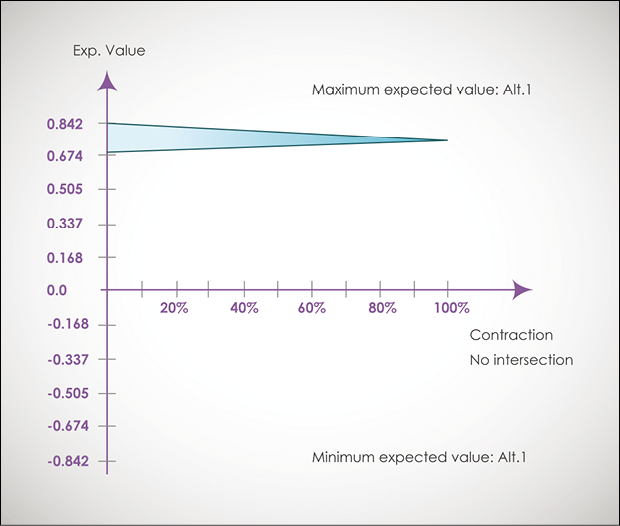

Expected Value Graph: The expected value interval of the outputs measures student performance in terms of the extent to which access to MUELE has improved access to learning (Figure 4). This implies that, based on the outputs, it is perceived that MUELE had a fairly high potential for improving access to learning with very limited possibility or chances of failure. This serves as confirmation of the ICT potential to improve access to learning in this particular context.

Figure 4. Expected value of the outputs’ contribution to improved access to learning.

Figure 5. Expected value graph evaluating the outcomes’ contribution to improved access to learning.

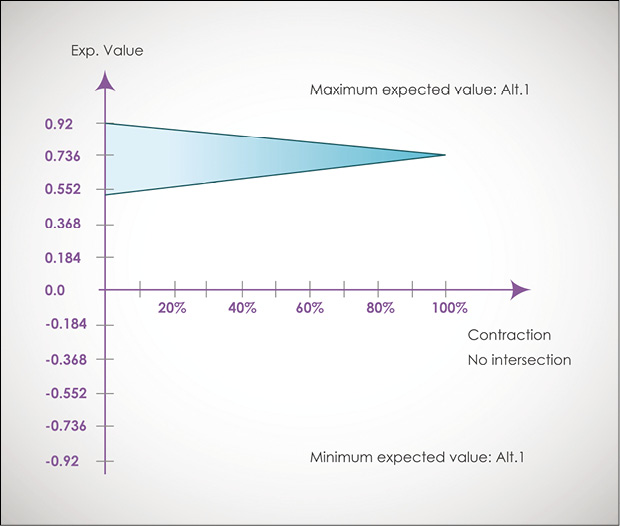

Outcome Model Evaluation

Expected Value Graph: This depicts an expected value interval and the focal point of all interval statements (the 100% contraction value) at 0.73 (Figure 5). This implies that the different outcomes derived from MUELE effectively contributed to improved access to student learning.

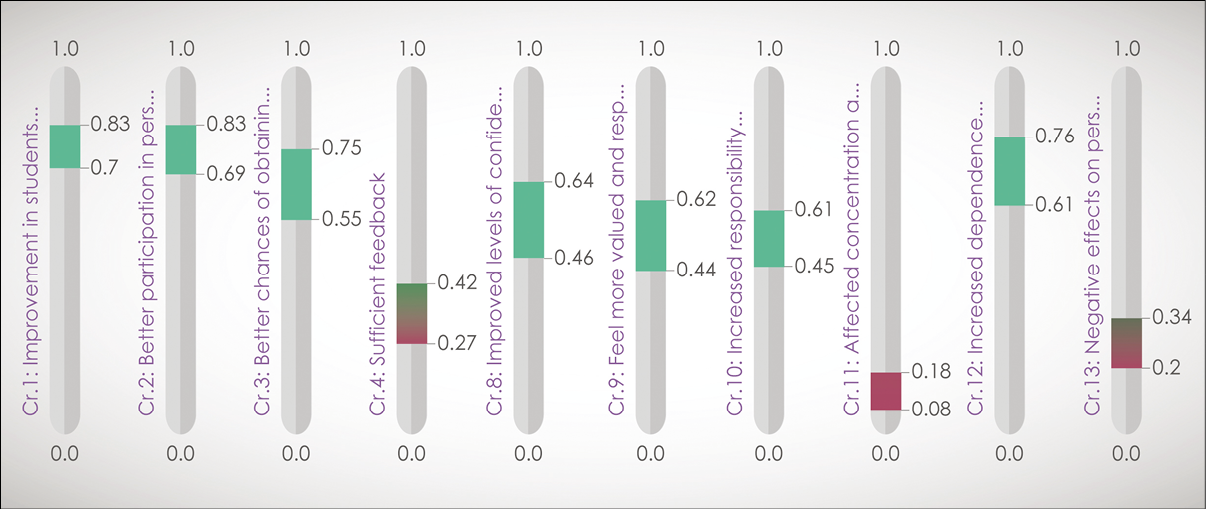

Value Profiling: The outcomes to which MUELE most significantly contributed were improvements in student learning, facilitation of student participation in personal learning, and a better chance of obtaining employment (Figure 6). There was an average effect on the psychological aspects, i.e. improved levels of confidence and whether people felt more valued or respected. There was however a low chance that MUELE had a significant negative impact such as affecting concentration or self-discipline, as well as personal health. On the other hand, there was a high chance that access to MUELE increased student dependence on computers.

Figure 6. Performance of individual outcomes in terms of outcome indicators.

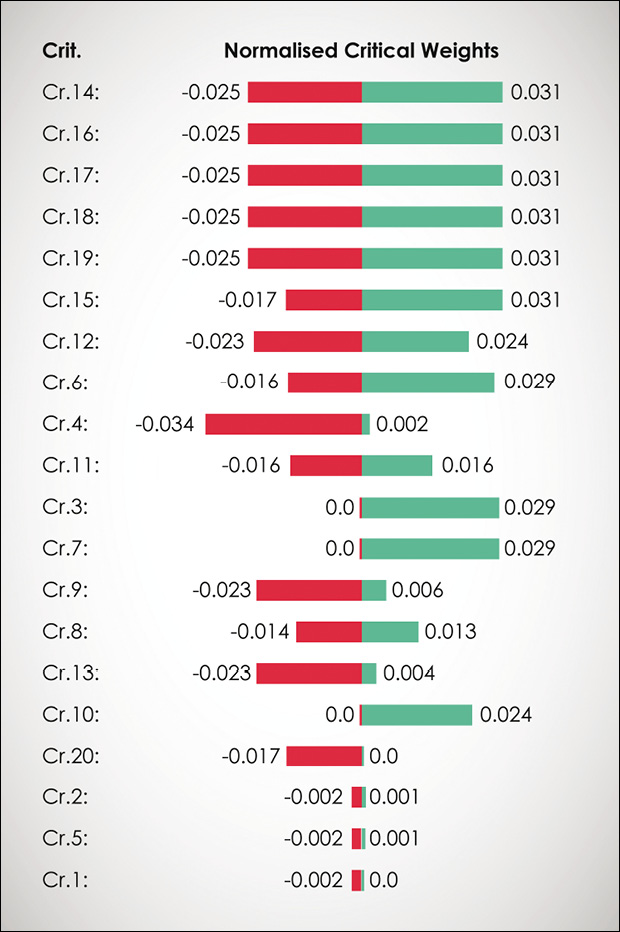

Tornado Graphs: The contextual factors, i.e. relevant skills, limited access to computers, unreliable or slow internet connection, ability to afford a personal computer, as well as the mandatory requirement to use MUELE, were the most critical aspects affecting the realisation of improved access to student learning (Figure 7). The difference in factors affecting the realisation of outputs and outcomes is essential for mid-term evaluation; helping implementers address the identified gaps and ensure the success of the initiative.

Figure 7. Critical outputs in the realisation of student learning.

Conclusion

As is seen from the results above, the aim in such an analysis is not necessarily to obtain an aggregated value explaining the overall performance of an initiative. The focus is on facilitating a structured approach to explaining various aspects, such as how an initiative performs on different outcomes or the most critical factors affecting the realisation of the overall objective. It is important to note that while the findings in this illustrative example may not be representative of the status of e-learning at Makerere University, they are a good illustration of the evaluation process. For example, the realisation that contextual factors are an essential aspect in meeting the initiative goal will shift the focus from just providing the e-learning environment to addressing the most critical contextual factors. Furthermore, the low performance of discussion forums will probably encourage further investigation into the pedagogical requirements that would integrate forums into the students’ learning process.

The MCDA tool provides a rich, detailed and structured assessment of the different factors which warrants further investigation into its use as an ICT4D evaluation approach, indicating that such a structured approach can facilitate a sufficient assessment of the performance of the development initiative as well as the most critical factors influencing the attainment of the development goals. The model does not, however, explicitly address any unintended benefits or negative consequences that are prevalent in any development initiative.

Further reading

Kivunike, F., Ekenberg, L., Danielson, M. and Tusubira, F. Towards an ICT4D Evaluation Model Based on the Capability Approach. International Journal on Advances in ICT for Emerging Regions. 7(1), pp. 1–15. 2014.

Kivunike, F., Ekenberg, L., Danielson, M. and Tusubira, F. Criteria for the Evaluation of the ICT Contribution to Social and Economic Development. Sixth Annual SIG GlobDev Pre-ICIS Workshop, Milan, Italy. 2013.

Talantsev, A., Larsson, A., Kivunike, F. and Sundgren, D. Quantitative Scenario-Based Assessment of Contextual Factors for ICT4D Projects: Design and Implementation in a Web Based Tool, in Á. Rocha, A.M. Correia, F.B. Tan and K.A. Stroetmann (Eds.), New Perspectives in Information Systems and Technologies 1(275), pp. 477–490. Cham: Springer. 2014.