PART I Opening out the Copyright Debate: Open Access, Ethics and Creativity

1. A Statement by The Readers Project1 Concerning Contemporary Literary Practice, Digital Mediation, Intellectual Property, and Associated Moral Rights

© 2019 John Cayley and Daniel C. Howe, CC BY 4.0 https://doi.org/10.11647/OBP.0159.01

During an era already defined as digital, in the second decade of the twenty-first century, we have arrived at a historical moment when processes designated as artificially intelligent are engineered to work with vast quantities of aggregated ‘data’ in order to generate new artefacts, statements, visualizations, and even decisions derived from patterns ‘discovered’ in this data. The data in question may well be linguistic and, occasionally, the AI-processed outcomes may be aesthetically motivated. Thus, our brave new world contains experiments in virtual literary art, linguistic artefacts ‘created’ by artificial intelligence. Or, a little more accurately, we are able to read, if we want to, works of language art that have been generated by rebranded, connectionist-based machine ‘learning,’ by recurrent or convolutional neural networks. Who or what is the ‘author’ of such outcomes if we are to consider them as works of language art? The ‘death of the author’ or, at least, the problematic question of authorship was raised well before electronic literary practice appeared to actualize postmodern theories in the late 1990s. Even then, works were authored by what N. Katherine Hayles now characterizes as ‘cognitive assemblages’ — human, medial, and computational. For these earlier works, however, their coding and its operations could be accounted for and anticipated by human author-engineers, still able to identify with their software and claim some moral rights with respect to the aesthetics of these processes.

Neural networks are not at all new. Yet with huge increases in raw computing power, and the spectacular accumulation of vast data sets, they are back in fashion and, likely, here to stay. One of a number of valid critiques of such algorithms is that they derive their patterns from the data in a manner that is highly abstract and largely inaccessible to human scrutiny, in the sense that we cannot, exhaustively, or in any detail, account for the specificities of what they produce. Thus, we might ask — in similar but differing circumstances as compared with earlier digital language art: is the language generated in this way original? Are we to consider an AI to be the author of such language?

But we must also ask: who owns the data? Who controls and benefits from its use? Answers to these questions will have momentous, potentially catastrophic consequences for cultural practice and production. The feverish enthusiasm surrounding AI seems likely to distort not only jurisprudence, but also socio- and political economic regulation with respect, for example, to the custom and law of intellectual property. If data sets and corpora are publicly available and these contain, for example, linguistic artefacts that are protected by copyright, AI processes operating on these corpora may appeal to the concept of ‘non-expressive fair use.’ It is presumed that the AI processes do not understand, appreciate, or care about — we might say they cannot ‘read’ — the expressive content of the protected artefacts in the corpora that they process. Thus the copyright holders of this content may have no claim, based on infringement, concerning whatever it is that the AI processes produce, whether or not this generates commercial or other benefits for the AI and its owners. That legal conceptions of this kind are being debated and, less often, established in court should not, in our opinion, cause us concern for the erosion of authors’ rights (as subject to non-expressive fair use). Instead we should see this as a kind of retrospective justification — perhaps calculated on behalf of the beneficiaries — for land-grab, enclosure-style theft from and of the cultural commons, all within legislative regimes that are propping up an inequitable, non-mutual, and unworkable framework for the generative creativity that copyright is supposed to encourage. The conventional custom and law of intellectual property, we say, does not and cannot encourage such creativity once culture is subject to digitalization. Arguably, copyright law has been ‘an ass’ since at least the age of mechanical reproduction. These days, calling it a dinosaur is too polite; it is a transnational swarm of Jurassic-Park raptors in the service of vectoralist superpowers.2

The raptors need retrospective justification because, in the guise of the robots and spiders that scurry over the Web, for example, they undertake their activities regardless of copyright, finding whatever they find with no sense of moral rights concerning association or integrity, and effectively proceeding on the basis of something like a presumption of ‘non-expressive fair use.’ They, the robots, don’t know what they’re doing, so we all consider what they are doing to be ‘OK’ — copying, appropriating, and processing whatever they find, regardless of who may ‘own’ it in terms of copyright. And there is a lot of stuff that these robots process that is clearly and absolutely ‘protected’ in terms of current legislation. All blogs, for instance, whether or not copyright is explicitly claimed for them. The robots’ owners may not, at first, historically, have known what their robots were doing (any more than the robots do), but this hasn’t prevented them from translating such ‘non-expressive fair use’ into privately owned wealth, value, and power to an unprecedented extent and at historically breakneck velocities.

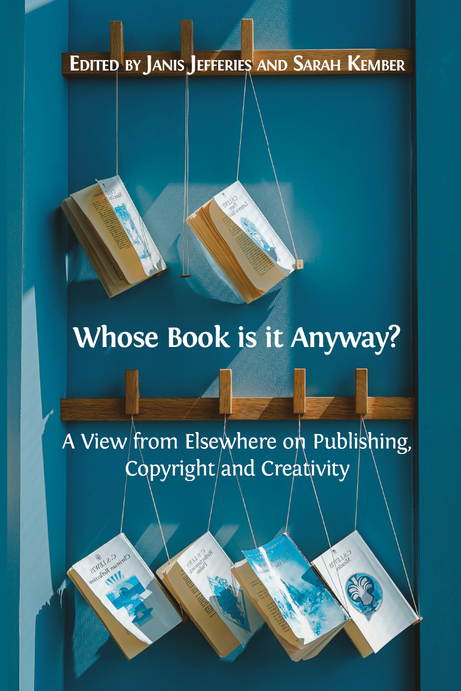

Compare the situation with respect to one of The Readers Project’s prominent outcomes. The Project is still squarely, for the moment, within the ‘earlier’ field of practice that I characterized, following Hayles, as a matter of cognitive assemblage: algorithmic cognisors working with human cognisors, the latter more or less self-conscious concerning the aesthetics, innovation, and originality of The Project as a whole. The installation Common Tongues and the artists’ book How It Is in Common Tongues both make transgressive use of networked search services in order to produce aesthetic works of conceptual and computational literature.3 These works read and reframe Samuel Beckett’s late novella, How It Is (1961). The Project’s software entities read through the work, seeking out and resolving its text into a sequence of phrases with particular characteristics. We call these Longest Common Phrases (or LCPs). Each LCP is the longest sequence of words, beginning from any specific point in the text, that can be found on the Internet, not written by or attributed to its author (as far as we can tell). We use Internet searches to find these phrases in other contexts, proving their continued circulation in the commons of language (an essential part of the cultural commons referred to above), unfettered by any liens of association or integrity. We then cite the web occurrences of these LCPs in How It Is in Common Tongues, a book released by The Project’s artists. In fact, we resolve the entire text of How It Is into common phrases as inscribed by thousands of other English language users. By doing so, we produce both an elegant aesthetic object and a text that reads quite differently from the original.

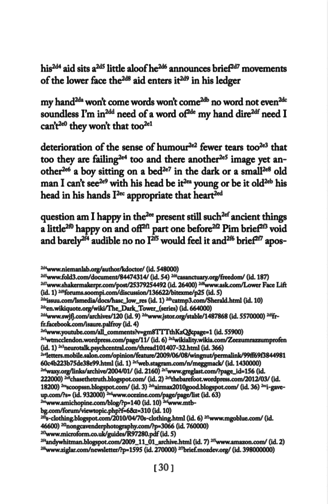

Fig. 1.1. How It Is In Common Tongues. Image provided by the authors, CC BY 4.0.

Fig. 1.2. How It Is In Common Tongues. Image provided by the authors, CC BY 4.0.

This new text is constantly interrupted by reference — by distracting invitations to turn to other networked writings. As such its paratext and punctuation is entirely novel and calls attention to alternate phrasings that generate strange, new, and differently engaging prose rhythms. It is a conceptual work: a new, distinct instance of digital language art. Nonetheless, it is also exhaustively associated with the Beckett’s novella. Its punctuation and annotation have been produced by algorithmic processes that transact with Internet search services in deliberate contravention of these services’ terms of use. Services providers, typically and non-mutually, regulate or deny robots — programmatic or algorithmic clients — access to their hoards. We claim that the transgressive — and controversial — practices by which we have created this work are significant additions to the existing repertoire of literary aesthetic practices. The work fully acknowledges and is highly respectful of its sources. It is undoubtedly non- if not anti-commercial. It is practice-based research that has already achieved considerable pedagogical traction with scholars and students internationally. Nonetheless, it is difficult to conceive that it would be read as an entirely original work, with little in the way of a ‘regular’ or ‘mechanical’ relation to its sources. The Project’s position, however, is that it is an original work, taking phrases from the commons of language, composing and punctuating them in manner that produces an incisive work of critical language art. In any immediate or longer-term future, if the kind of algorithmic and human compositional processes that underlie this piece were denied or contradicted — either by inadequate custom and law, or by vectoralist superpowers unilaterally and arbitrarily enforcing the force majeure of their terms of service — this would be part, we believe, of nothing less than a more general cultural and artistic catastrophe.

Moreover, in installations of Common Tongues, LCPs from a section of How It Is are used to discover and present, as textual collage, additional human-selected contexts for these LCP phrases, additional aesthetic language composed neither by Beckett, nor by The Project’s artists. These selections are quasi-algorithmically hand-stitched together (human authors editing generated text), maintaining syntactic regularity such that a new text is formed: one for which algorithmic processes guarantee that none of the constituent language is authored by the text’s makers. This text has its own significance and affect, though generated in regular relation with Beckett, and with hundreds of other writers. It could not have been made without the digital, without algorithms. It could not have been made without the writing of many others. As a creative work, it runs counter to the customs and laws of intellectual property and defies traditional conventions of authorship, yet it is clearly a critical and aesthetic response to the evolving circumstances of linguistic and literary practice. This is, we believe, another way of saying that it has value. But how should we recognize and preserve this value? How will we protect it from traditional literary estates and the aggressive cultural vectors that threaten? In the current historical moment, The Readers Project believes, we must allow interventions such as How It Is in Common Tongues to exist in the world of language art alongside conventional forms from the world of letters, and also in relation to commensurate works embraced and lauded by the visual and conceptual art worlds. Christian Marclay’s The Clock comes immediately to mind, recently proclaimed as a ‘masterpiece’ and newly on show in Tate Modern as of autumn 2018.4

We argue that the existing custom and law of intellectual property is unable to comprehend or regulate a significant proportion, if not the majority, of contemporary literary aesthetic practices; not only the productions of conceptual and uncreative writing but also, for example, compositional practices of collage, which writers have always deployed, but which have been fundamentally reconfigured by instant networked access to material — much of it ‘protected’ — amenable to ‘cut and paste.’ In circumstances like these, the custom and law of intellectual property reveals itself to be irremediably flawed. We claim that the types of processes and procedures implemented in The Project show how literary practices have been so altered by digital affordances and mediation that the fundamental expectations of human writers and readers — regarding their roles, their relationships to one another and to the text that travels between them, and the associated commercial relationships — are changed beyond easy recognition, and beyond the scope of existing custom and law. All practices of reading and writing are now inextricably intertwined with their network mediation — the Internet and its services — and so the questions and conflicts surrounding copyright and intellectual property have shifted from who creates and owns what, to who controls the most privileged and profitable tools for creation and dissemination. The Readers Project works to address this new situation and highlight its inconsistencies and inequities.

Network services have arisen that allow practices of reading and writing to be automatically and algorithmically captured, processed, indexed, and otherwise co-opted for the commercially-motivated creation and maintenance of vectors of attention (advertising) and transaction (actual commerce). These vectors are themselves, as the results of indexing, processing, and analysis, fed back to human readers and writers, profoundly affecting, in turn, their subsequent practices of reading and writing. In cyclical fashion, reading and writing is fed back into the continually refined black boxes of proprietary, corporate-controlled, algorithmic process: the ‘big software’ of capture, analysis, index and so on. This is the grand feedback loop of ‘big data,’ encompassing and enclosing the commonwealth of linguistic practice. As a function of proprietary control and the predominance of neoliberal ideology amongst its supermanagers, this system is regulated by little more than calculations of the marginal profit that the vectoralist service providers derive. And now, as noted above, the ‘black boxes’ are filled with inscrutable, energy-consuming, waste-generating, expensive AI.

In the perhaps naïve belief that we might all benefit, human readers and writers have willingly thrown themselves into this artefactual cultural vortex. Is it too late now to reconsider, to endeavour to radically change both the new and traditional institutions that allowed us to enter this maelstrom? Or have inequalities in the distribution of power over the vectors of transaction and attention — commercial but especially cultural — simply become too great? This power was acquired far too quickly by naive and untried corporate entities that still remain largely untried and unregulated, though they are, perhaps, far less naïve than they once were.5 Huge marginal profits allow the new corporations to acquire, on a grand scale, the estates of conventionally licensed intellectual property along with the interest and means to conserve them, via both legal and technical mechanisms. In a particularly vicious aspect of this cycle of wealth-and-power aggregation, these same mechanisms remain wholly inadequate to the task of regulating the culture and commerce of networks, clouds, and big data: the very culture and commerce that grant big software their profits. The raptors are out of the park.

Works Cited

Cayley, John (2013) ‘Terms of Reference & Vectoralist Transgressions: Situating Certain Literary Transactions over Networked Services’, Amodern 2, [n.p.], http://amodern.net/article/terms-of-reference-vectoralist-transgressions/, https://doi.org/10.5040/9781501335792.ch-012

Dworkin, Craig Douglas and Kenneth Goldsmith (eds.) (2011) Against Expression: An Anthology of Conceptual Writing (Evanston, IL: Northwestern University Press).

Goldsmith, Kenneth (2011) Uncreative Writing: Managing Language in the Digital Age (New York: Columbia University Press), https://doi.org/10.1353/jjq.2012.0020

Place, Vanessa and Robert Fitterman (2009) Notes on Conceptualisms (Brooklyn, NY: Ugly Duckling Presse).

Wark, McKenzie (2004) A Hacker Manifesto (Cambridge, MA: Harvard University Press), https://archive.org/stream/pdfy-RtCf3CYEbjKrXgFe/A Hacker Manifesto - McKenzie Wark_djvu.txt

1 The Readers Project is a collection of distributed, performative, quasi-autonomous poetic ‘readers’ — active, procedural entities with distinct reading behaviors and strategies that explore the culture of reading, http://thereadersproject.org/

2 ‘Vectoralist’ is the name that McKenzie Wark gave, persuasively, to a new exploitative ruling class which controls and profits from vectors of political and economic attention in a world where information is treated as a natural resource, extending earlier capitalist models and critiques. McKenzie Wark, A Hacker Manifesto (Cambridge, MA: Harvard University Press, 2004), https://archive.org/stream/pdfy-RtCf3CYEbjKrXgFe/A Hacker Manifesto - McKenzie Wark_djvu.txt

3 Please refer to The Project’s website http://thereadersproject.org, and, for further linked documentation of these works, to the ELMCIP Knowledge Base at http://elmcip.net/node/4677 (Common Tongues) and http://elmcip.net/node/5194 (How it Is in Common Tongues). For conceptual literature see, inter alia: Kenneth Goldsmith, Uncreative Writing: Managing Language in the Digital Age (New York: Columbia University Press, 2011) https://doi.org/10.1353/jjq.2012.0020; Craig Douglas Dworkin and Kenneth Goldsmith (eds.), Against Expression: An Anthology of Conceptual Writing (Evanston, IL: Northwestern University Press, 2011); and Vanessa Place and Robert Fitterman, Notes on Conceptualisms (Brooklyn, NY: Ugly Duckling Presse, 2009). Computational literature does not yet have so readily identifiable apologia. However Nick Montfort, http://nickm.com, is a major exponent and the work of Noah Wardrip-Fruin, both aesthetic and theoretical, is highly relevant and significant.

5 See John Cayley, ‘Terms of Reference & Vectoralist Transgressions: Situating Certain Literary Transactions over Networked Services’, Amodern 2 (2013), [n.p.], http://amodern.net/article/terms-of-reference-vectoralist-transgressions/,