12. Transforming Practice

Checklists for Delivering Change

© 2022 Chapter Authors, CC BY-NC 4.0 https://doi.org/10.11647/OBP.0321.12

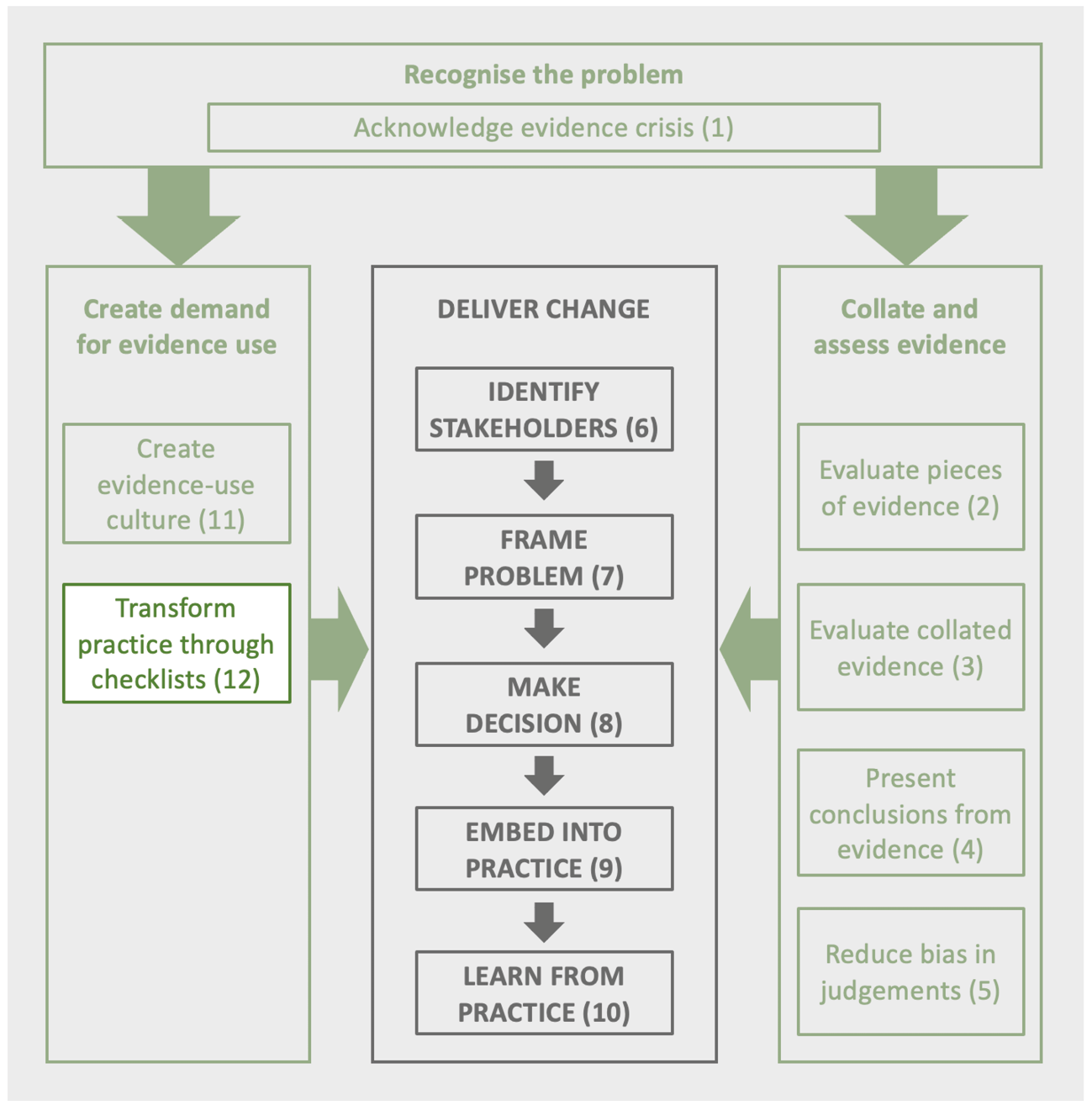

Delivering a revolution in evidence use requires a cultural change across society. For a wide range of groups (practitioners, knowledge brokers, organisations, organisational leaders, policy makers, funders, researchers, journal publishers, the wider conservation community, educators, writers, and journalists), options are described to facilitate a change in practice, and a series of downloadable checklists is provided.

1 School of Biological Sciences, University of Queensland, Brisbane, 4072 Queensland, Australia

2 The AP Leventis Ornithological Research Institute (APLORI), University of Jos Biological Conservatory, PO Box 13404, Laminga, Jos-East LGA, Plateau State, 930001, Nigeria

3 Parks Canada Agency, Indigenous Conservation, Indigenous Affairs and Cultural Heritage, 30 Rue Victoria, Gatineau, Quebec, Canada

4 Foundations of Success Europe, 7211 AA Eefde, The Netherlands

5 British Trust for Ornithology, The Nunnery Thetford Norfolk, UK; Department of Geography, University of Florida, Gainesville, FL 32611, USA

6 Houses of Parliament, Parliament Square, London SW1A 0PW

7 Biosecurity Research Initiative at St Catharine’s College (BioRISC), St Catharine’s College, Cambridge, UK

8 School of Natural Sciences, Trinity College, Dublin, Ireland

9 Centre for Environmental Policy, Imperial College London, London, UK

10 Biological Sciences, University of Toronto-Scarborough, 1265 Military Trail, Toronto, ON, Canada

11 Institute of Zoology, Zoological Society London, Regent’s Park, London, UK

12 Center for Biodiversity and Conservation, American Museum of Natural History, New York, NY 10026, USA

13 Downing College, University of Cambridge, Regent Street, Cambridge, UK

14 Department of Zoology, University of Cambridge, The David Attenborough Building, Pembroke Street, Cambridge, UK

15 BirdLife International, Mermoz Pyrotechnie, Lot 23 Rue MZ 56, Dakar, Senegal

16 School of Biological Sciences, Monash University, Clayton, VIC 3800, Australia

17 Carleton University, Ottawa, ON, Canada

18 University of Cambridge Institute for Sustainability Leadership, The Entopia Building, 1 Regent Street, Cambridge CB2 1GG, UK

19 Beeflow, E Los Angeles Ave., Somis CA, USA 93066

20 Birdlife International, The David Attenborough Building, Pembroke Street, Cambridge, UK

21 Woodland Trust, Kempton Way, Grantham, Lincolnshire, UK

22 Digital Geography Lab, Department of Geosciences and Geography, Faculty of Sciences, University of Helsinki, Helsinki, Finland

23 The Wilder Institute, 1300 Zoo Road NE, Calgary, Alberta, Canada

24 Women for the Environment Africa (WE Africa) Fellow

25 The Center for Behavioral & Experimental Agri-Environmental Research, Johns Hopkins University, Baltimore, MD, USA

26 Field and Forest, Land Management, Winchester, UK

27 Fauna & Flora International, The David Attenborough Building, Pembroke Street, Cambridge, UK

28 School of Biosciences, University of Sheffield, Sheffield, UK

29 Bat Conservation International, 1012 14th Street NW, Suite 905, Washington, D.C. 20005, USA

30 Department of Ecology and Evolutionary Biology, University of California Santa Cruz, 130 McAllister Way, Santa Cruz, CA 95060, US

31 Oxford Martin School, University of Oxford, Oxford, UK

32 Norwegian Institute for Nature Research (NINA), PO Box 5685 Torgarden, 7485 Trondheim, Norway

33 RSPB Centre for Conservation Science, RSPB Scotland, North Scotland Office, Etive House, Beechwood Park, Inverness, UK

34 Cairngorms Connect, Achantoul, Aviemore, UK

35 Department of Forest and Conservation Sciences, The University of British Columbia, Forest Sciences Centre, 3041 – 2424 Main Mall, Vancouver BC, V6T 1Z4 Canada

36 UN Environment World Conservation Monitoring Centre, 219 Huntingdon Rd, Cambridge, UK

37 Parks Canada Agency, Ecosystem Conservation, Protected Areas Establishment and Conservation, 30 Rue Victoria, Gatineau, Quebec, Canada

38 Woodland Trust, Kempton Way, Grantham, Lincolnshire, UK

39 US Fish and Wildlife Service, National Wildlife Refuge System 2800 Cottage Way, Suite W-2606

40 Department of Biological Sciences, Royal Holloway University of London, Egham, Surrey, UK

41 Partner, Conservation Measures Partnership (CMP), Montreal, Canada,

42 Ingleby Farms and Forests, Slotsgade 1A, 4600 Køge, Denmark

43 United States Fish and Wildlife Service International Affairs, 5275 Leesburg Pike, Falls Church, VA 22041, USA

44 College of Business, Arts and Social Sciences, Brunel University, London, UK

45 NatureScot, Great Glen House, Leachkin Road, Inverness, UK

46 Endangered Landscapes Programme, Cambridge Conservation Initiative, The David Attenborough Building, Pembroke Street, Cambridge, UK

47 The Whitley Fund for Nature, 110 Princedale Road, London, UK

48 Ocean Discovery League, P.O. Box 182, Saunderstown, RI 02874, USA

49 Centre for Evidence Based Agriculture, Harper Adams University, Newport, Shropshire, UK

50 Department of Civil Engineering, University of Asia Pacific, Dhaka 1205, Bangladesh

51 International Institute for Environment and Development, 235 High Holborn, London, UK

52 School of Water, Energy and the Environment, Cranfield University, College Road, Cranfield, Bedford, UK

53 School of Ecosystem and Forest Sciences, University of Melbourne, Parkville 3052, Melbourne, Australia

54 Centre for Environmental and Climate Science, Lund University, Lund, Sweden

55 Measures of Success, Bethesda, Maryland, USA

56 Department Biodiversity & Nature Conservation, Environment Agency Austria (Umweltbundesamt), Vienna, Austria

57 Science Media Centre, 183 Euston Road, London, UK

58 Small Mammal Conservation Organisation SMACON, Plot 8, Christiana Imudia, Irhirhi, Benin City, Nigeria

59 Forestry England, 620 Bristol Business Park, Coldharbour Lane, Bristol, UK

60 NatureScot, Silvan House, 231 Corstorphine Road, Edinburgh, UK

61 Kent Wildlife Trust, Tyland Barn, Chatham Road, Sandling, Maidstone, UK; current address: Butterfly Conservation, Manor Yard, East Lulworth, Wareham, Dorset, UK

62 RSPB Centre for Conservation Science, 2 Lochside View, Edinburgh, UK

63 School of Ecosystem and Forest Sciences, University of Melbourne, Victoria 3010 Australia

64 Ristumeikan Asia Pacific University, Beppu, Oita, Japan; Nagoya University Graduate School of Environmental Studies, Nagoya, Aichi, Japan

65 Pacific Rim Conservation, PO Box 61827, Honolulu, HI, USA 96839

* The findings and conclusions in this chapter are those of the authors and do not necessarily represent the views of the US Fish and Wildlife Service.

12.1 The Importance of Checklists

A checklist is a list of systematically ordered actions that enables users to consistently perform each action and record its completion while reducing any errors that may be caused by missing out crucial steps (Gawande, 2010). Checklists can be used to help organisations and individuals consistently measure and monitor outputs and outcomes, and hence develop a culture of continuous quality improvement. They have been shown to be effective in improving outcomes in other areas of practice, powerfully described in The Checklist Manifesto (Gawande, 2010). Aircraft safety has been transformed by the use of checklists. This was exemplified in the ‘Miracle of the Hudson’ landing of US Airways Flight 1549 on the River Hudson, where the pilots ran through a checklist before flying, worked through the engine failure checklists when attempting to restart the engines after colliding with a flock of geese, and then, after landing on the Hudson River, ran through the evacuation checklist.

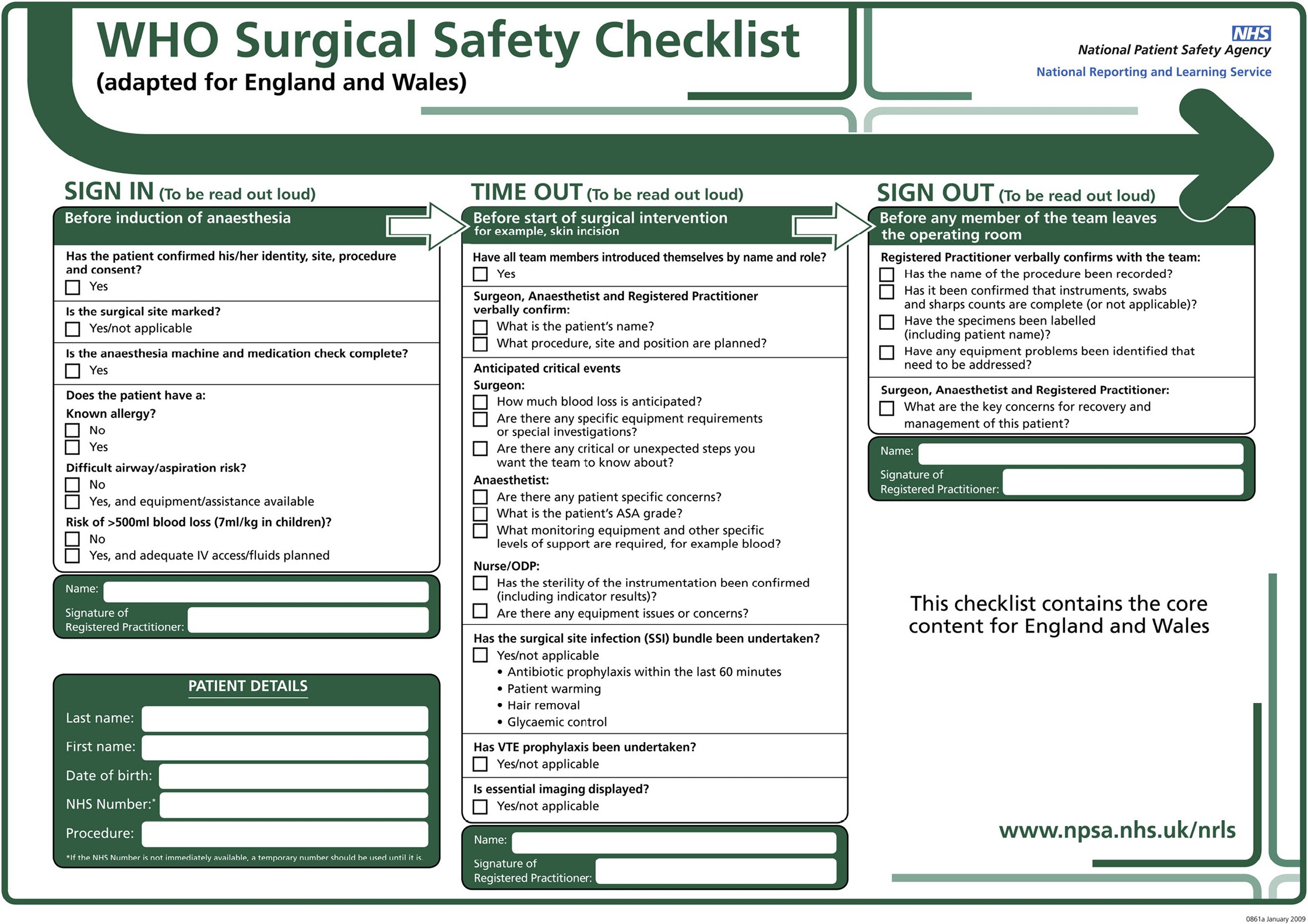

Gawande (2010) organised the creation of a checklist for use in surgery: the World Health Organization’s Surgical Safety Checklist (Figure 12.1). Some of the 19 questions appear obvious. Are you operating on the correct patient? Is it the correct operation? Are you at the correct site for operation? Are you operating on the correct side of the patient? Has the area been disinfected recently? Have all the tools and swabs been removed after the operation? A randomised controlled experiment to test if the checklist was actually effective (Haugen et al., 2015) found that when the checklist was used, rates of complications during surgery decreased from 19.9% to 11.5%, and the mean length of a hospital stay was reduced by 0.8 days. The reduction in mortality from 1.6% to 1.0% was not statistically significant.

Figure 12.1 The World Health Organization checklist for surgeries (adapted for England and Wales). (Source: Panesar et al., 2010, © 2010, with permission from Elsevier)

This chapter provides a series of checklists, each aimed at a different group of users, to help improve processes in using evidence and making decisions. Whilst the narrative of this chapter focuses on conservation, the checklists are equally applicable to other disciplines. These checklists are available on the associated website at https://doi.org/10.11647/OBP.0321#resources so can be modified for individual use. They are open access (Creative Commons CC-BY-4.0). Whilst attribution is welcome, it is not essential.

The checklists provided here differ from those in surgery or aircraft safety in which the user has to check every applicable box. These are checklists of what ideal evidence-based practice looks like and thus are targets, providing the opportunity to reflect on actions that are not checked. The checklists are inevitably somewhat repetitive across groups.

12.2 The Decision-Making Process

The heart of this book is a set of processes for delivering more effective conservation through improving the use of evidence in policy and practice. The process for making decisions based on evidence is summarised in Checklist 12.1, as a way to enable a simple check of whether good practice is being adopted.

12.3 Organisations

As the importance of using evidence becomes accepted, organisations will need a shift in emphasis such that evidence use is routinely embedded in decision making, as described in Chapter 9.

Embedding evidence use will require organisations to build capacity, train and mentor staff, and commit to employing new recruits skilled in using evidence. Recruitment and training should ensure conservation practitioners develop the motivation and necessary skills to embed evidence in practice. Checklist 12.2 describes the activities that can be considered by organisations interested in becoming evidence based.

There is also a need to add to the existing evidence base by testing actions and ensuring the results are made available to others, for example, by publishing results, adding them online and documenting methods, results and data on open science platforms (such as the Center for Open Science). Some journals have a specific objective of attracting articles from practitioners such as Ecological Solutions and Evidence, the associated Applied Ecology Resources platform (https://www.britishecologicalsociety.org/applied-ecology-resources/) and the Conservation Evidence Journal, which also encourages the publication of short papers by practitioners.

Documenting actions that are not successful, or which are only partially successful, is as informative as documenting those that do work. This allows practitioners to learn from the problems faced or mistakes made by others and thus avoid potentially costly errors. In a review of the papers in the Conservation Evidence Journal, Spooner et al. (2015) showed that 31% of tested interventions were considered as unsuccessful.

Ideally, at least annually, organisations should establish a test (which can be very simple) to examine the effectiveness of an action. This could be done by comparing the action to no treatment, or by directly comparing different treatments or different means of carrying out a technique in one study (Smith et al., 2014).

12.3.1 Easy wins for organisation leaders

A key role of leaders is to create a culture where evidence use is encouraged and to enable the activities described in Checklist 12.1. This can be achieved through a combination of processes to ensure evidence use is embedded, such as those in Chapter 9, and routinely asking about the basis of decisions.

While some of the proposals in this chapter will take time to deliver, there are easy wins that the leaders of organisations could put into action very simply, listed in Checklist 12.3.

12.4 Knowledge Brokers

The fundamental aim of this book is to facilitate the process of embedding evidence into practice, in order to make conservation actions more effective and efficient. Many of the anticipated changes will occur through practitioners and decision makers being more involved in research, by researchers being more involved in practice, and by organisations encouraging such collaborations.

Knowledge brokers can be useful in creating these cross-disciplinary communities (Checklist 12.4 suggests possible actions for knowledge brokers). Evidence-based medicine, for example, has a network of knowledge brokers bridging the gap between the researcher and frontline doctors and nurses. These serve to translate research findings into implications for clinical practice. Similarly, there is a need to create a cohort of evidence-based conservation knowledge brokers. These advisors could either be employed within organisations or within established external entities providing services to a range of organisations, for example, research institutes such as universities (Cook et al., 2013). Kadykalo et al. (2021) call such individuals and organizations ‘evidence bridges’ that would help foster research-practitioner connections and synthesise and distribute research. Knowledge brokers would have three main roles:

- Aiding the interpretation of evidence for decision-making: As evidence-based conservation becomes increasingly accepted, the evaluation and interpretation of evidence will likely become more important. This consists of both evaluating the strength of evidence from different sources and relating the relevance of research to local conditions. For example, we expect that sometimes this interpretation will be provided in response to questions about an individual case (such as an advisor answering a question from a land manager) and sometimes provided as generic advice (such as an organisation providing advice to their staff about how they can treat invasive plants in their region). Interpreting the evidence for local conditions should be done in close consultation with practitioners.

- Producing accessible information that incorporates evidence: Knowledge brokers can also help the creation of evidence-based guidance. As described in Chapter 9, this is key, in particular as an accepted standard for actions undertaken by ecological consultants.

- Assisting with the design of tests to assess effectiveness: It is unreasonable to expect that most practitioners have the training and skills to design experiments, collect and analyse data or disseminate the results. There is a need for knowledge brokers who can assist with these tasks.

The Woodland Trust (UK) is one example of an organisation fulfilling this knowledge broker role through their Outcomes and Evidence team, which consists of 21 staff members (at the time of writing) whose expertise includes woodland ecology, citizen science, evidence use, land management, monitoring and evaluation, tree health, carbon and soil science, data science, and more. The team funds, co-designs, and co-delivers research with policy makers and practitioners, provides evidence syntheses and summaries, produces evidence-based guidance, provides bespoke advice to landowners, helps in the design of projects and experiments, disseminates results, and engages with internal and external stakeholders to enable evidence-based delivery of conservation outcomes in a wide variety of accessible formats.

12.5 Practitioners and Decision Makers

Those who make decisions and those who convert these into actions are central to the use of evidence in practice (see Checklist 12.5). These include a diverse range of individuals (e.g. nature reserve managers, reserve wardens, farmers, rangers, environmental site managers, and foresters). As a result of changes in expectations and requirements, and as evidence-use skills become essential for employment, over time we expect that evidence use will become standard practice.

12.6 Commissioners of Reports and Advice

Checklist 12.6 looks at means of establishing whether reports are evidence-based. These may be reports from consultants or internal reports. There is research indicating that conservation guidance documents, and actions then commonly recommended, are weakly based on evidence. Hunter et al. (2021) showed that ecological mitigation and compensation measures recommended in practice often have a limited evidence base backing them up, and most actions were justified by using guidance documents. However, Downey et al. (2022) showed that the majority of guidance documents for the mitigation and management of species and habitats in the UK and Ireland do not cite scientific evidence to justify the recommended actions and are often outdated. This risks carrying out actions that are ineffective, or not carrying out the most cost-effective action. As a result of ineffective actions, limited funds could be wasted, staff or volunteer morale could be damaged, and there is a risk of bad publicity or reputational effects.

12.7 Funders and Philanthropists

Funders may be in the best position to deliver a fundamental change in evidence use. Conservation practice would be transformed if funders expected transparent evidence use, and agreed to fund work that improves the evidence base (see Checklist 12.7). Furthermore, the expected efficiency gains, as outlined in Chapter 1, should result in the delivery of substantially enhanced outcomes for the same funding. In turn, such increased efficiency may make funding conservation more attractive.

The most important questions for funders to ask applicants are: Does the applicant have a history of using evidence? What processes are in place for using evidence? How well are the proposed actions evidenced? The main ways in which funders can help increase and embed the use of evidence in practice are listed below. They can:

- Ask applicants why they believe their proposal will work: There is a range of ways (see Chapter 9) in which funders can ask why the applicant believes their proposal will be effective and ensure that evidence has not been cherry-picked to support a particular course of action.

- Encourage rigorous testing of actions: Funders can encourage experimental testing, analysis and publication of the effects of interventions by allowing a) a percentage of grant money to be spent assessing the effectiveness and b) sufficient time to complete this evaluation. This could be an expected component of large projects and an optional element of small grants focussing on those opportunities where testing is appropriate (Section 10.3).

- Fund learning organisations: Funders can favour organisations that are learning by evaluating and testing interventions and ideas; they can rate applicants on their past record of project evaluation and their record of making results publicly available. Funders should, however, make it clear that the criterion relates to whether their evaluation is rigorous and the organisation is learning, rather than to claims about how wonderful the programme is.

- Promote open data: Funders can make it a requirement that the data collected, such as species recorded or responses to interventions, is stored in standard open access databases (using adequate geospatial data protection where necessary in order to protect vulnerable species and habitats), and they can provide funds for the necessary data curation work.

- Encourage submission to journals that are making progress toward open science, for example by following the Transparency and Openness Promotion Guidelines (https://www.cos.io/initiatives/topguidelines).

- Ensure evidence base and infrastructures are funded: Effective delivery of evidence-based practice requires that the evidence is collated, synthesised and made available for use, for example, through guidance or decision support tools. There is also a need for funding of adequate training/capacity building and materials.

- Accept publication as part of the reporting process: A major barrier to dissemination is that many organisations do not have the time to both write a report for the funders and to publish a scientific paper. Accepting a final report in the form of a draft paper, or papers, reduces this problem.

- Establish a common register of projects: Funders can establish an open register of funded projects (preferably a joint register across many funders), which also raises the expectation that robust data will be generated and subsequently made available to practitioners and scientists. This is also useful for testing for publication bias (e.g. on the success of a given type of project) and reducing duplication of projects or tests of interventions.

- Fund organisations committed to evidence use: Funders can show a preference for funding organisations with a clear, demonstrated commitment to evidence-based practice (e.g. Conservation Evidence ‘Evidence Champions’). There is a need for evidence certification such as through professional bodies.

12.8 The Research and Education Community

Evidence-based medicine depends on an enormous pool of scientists who conduct basic research to underpin medical practice. It also relies on entire institutions that conduct syntheses of evidence, including members of the Cochrane Collaboration. Researchers and the education community have a large part to play in the practice of evidence-based conservation. Their roles are to:

- Identify priorities for evidence synthesis: Engage with stakeholders, practitioners, managers, and policy makers to identify gaps in synthesis; to decide whether an evidence map, subject-wide evidence synthesis, systematic review, or dynamic synthesis is the most appropriate approach; and to create a process for delivering.

- Conduct research that fills in gaps in the evidence base: Questions suitable for research might be a) identified by policy makers or practitioners (Sutherland et al., 2006, 2009), b) identified as gaps in policy (Sutherland et al., 2010a), c) identified as gaps in systematic reviews or synopses of evidence (e.g. evidence synthesis for primate conservation identified numerous evidence gaps, Junker et al., 2020). Building relationships with policy makers and practitioners assists in identifying knowledge gaps and facilitating the dissemination of research findings.

- Facilitate timely publication, and dissemination, of research findings: The average length of time between the end of data collection and the final date of publication for studies of conservation interventions has been estimated at 3.2 years (Christie et al., 2021); this delay is further hindering practitioners from accessing up-to-date evidence. Such delays can be reduced by disseminating findings to relevant stakeholders, and timely publishing of results, including preprints where appropriate, in open access sources if possible.

- Encourage a culture of evidence-based practice: Routinely develop questions to test the evidence base, and disseminate the need for evidence synthesis or primary research. Setting an agenda for priority conservation activities would direct projects to areas where learning could provide the greatest benefits.

- Teach effective decision making: We envisage a major growth area to be the provision of training in effective decision-making skills (as described in this book) in a range of languages and at different levels. Chapter 10 gives further details on how this could be achieved.

- Teach approaches to quantifying the effectiveness of interventions: Opportunities for generating convincing evidence are often missed or ineffective experimental designs are employed. There is thus a need for improved knowledge of experimental design, data collation, data analysis and the publication process.

- Introduce, by journals, reporting standards: It is often difficult or impossible to extract key data from papers. This situation would be improved by introducing reporting standards, in a machine-readable manner, for research papers to facilitate subsequent use of data in synthesis and meta-analysis; similar advances have been made by editors of medical journals (Pullin and Salafsky, 2010). One solution is to form an International Committee, similar to that of the Medical Journal Editors (https://www.icmje.org/), and publish ‘uniform requirements for manuscripts’ such as reference styles.

- Introduce, by journals, standards for evidence synthesis methodology and reporting: Review articles vary considerably in their rigour and reliability. Editors should consider using published checklists to improve the standards of review articles that are attempting to synthesise existing evidence (Pullin et al., 2022; O’Dea et al., 2021).

- Request, by journals, that authors report the costs of interventions: Encourage authors to report the cost of an intervention in a manner that allows policy makers to translate this information to their management context and to determine the most cost-effective alternative. Consistent methods for reporting costs would enable economic return-on-investment evaluations as part of evidence synthesis.

- Introduce, by journals, measures to reduce barriers to the publication of evidence: Publishers can take a number of measures to reduce hindrance and delays in publication. For example, they could introduce less strict formatting requirements for the initial screening of articles, or conduct preliminary peer review before submission, or move to new publication models where articles are published before peer assessment.

- Standardise methods: A major problem with reviewing environmental evidence is that a wide range of methods is used, which makes comparisons difficult. Some of this variation is for sensible reasons, but much appears not to be. Standardising methods and outcome measurements, as has been done for reintroductions (Sutherland et al., 2010b) is, therefore, a useful step that could be applied more widely.

- Standardise terminology: When comparing studies, standard terminology is required. Salafsky et al. (2008) suggest standard terms for the major threats, actions and habitats. Similarly, Mascia et al. (2014) provide standardised terminology for approaches to monitoring and evaluation. A useful analogy is to consider medicine before common names were agreed for diseases and potential cures, greatly hindering systematic science.

- Agree upon and use standard information repositories: Create effective, attractive information systems for storing the outcomes of evidence-based planning and decision making, and monitoring and testing. Applied Ecology Resources (https://www.britishecologicalsociety.org/applied-ecology-resources/), Conservation Evidence (https://www.conservationevidence.com/), Panorama (https://panorama.solutions/en) and GBIF (https://www.gbif.org/conservation) are some stores for such material.

- Proactively bridge gaps between practice and research (see Kadykalo et al. 2021): Facilitate conversations and networking between researchers and practitioners to help identify evidence needs, disseminate evidence produced to the wider community. Consult practitioner groups frequently to help mould research plans, and identify practical effective solutions.

References

Christie, A.P., White, T.B., Martin, P.A., et al. 2021. Reducing publication delay to improve the efficiency and impact of conservation science. PeerJ 9: e12245, https://doi.org/10.7717/peerj.12245.

Cook, C.N., Mascia, M.B., Schwartz, M.W., et al. 2013. Achieving conservation science that bridges the knowledge-action boundary. Conservation Biology 27: 669–78, https://doi.org/10.1111/cobi.12050.

Downey, H., Bretagnolle, V., Brick, C., et al. 2022. Principles for the production of evidence-based guidance for conservation actions. Conservation Science and Practice 4: e12663, https://doi.org/10.1111/csp2.12663.

Gawande, A. 2010. The Checklist Manifesto: How to Get Things Right (London: Profile Books).

Haugen, A.S., Søfteland, E., Almeland, S.K., et al. 2015. Effect of the World Health Organisation checklist on patient outcomes. Annals of Surgery 261: 821–28, https://doi.org/10.1097/SLA.0000000000000716.

Hunter, S.B., zu Ermgassen, S.O.S.E., Downey, H., et al. 2021. Evidence shortfalls in the recommendations and guidance underpinning ecological mitigation for infrastructure developments. Ecological Solutions and Evidence 2: e12089, https://doi.org/10.1002/2688-8319.12089.

Junker, J., Petrovan, S.O., Arroyo-Rodríguez, V., et al. 2020. A severe lack of evidence limits effective conservation of the world’s primates. BioScience 70: 794–803, https://doi.org/10.1093/biosci/biaa082.

Kadykalo, A.N., Buxton, R.T., Morrison, P. et al. 2021. Bridging research and practice in conservation. Conservation Biology 35:1725–1737, https://doi.org/10.1111/cobi.13732.

Mascia, M.B., Pailler, S., Thieme, M.L., et al. 2014. Commonalities and complementarities among approaches to conservation monitoring and evaluation. Biological Conservation 169: 258–67, https://doi.org/10.1016/j.biocon.2013.11.017.

O’Dea, R.E., Lagisz, M., Jennions, M.D. et al. 2021. Preferred reporting items for systematic reviews and meta-analyses in ecology and evolutionary biology: a PRISMA extension. Biological Review, 96:1695–1722, doi:10.1111/brv.12721.

Panesar, S.S., Carson-Stevens, A., Fitzgerald, J.E., et al. 2010. The WHO surgical safety checklist — junior doctors as agents for change. International Journal of Surgery 8: 414–6, https://doi.org/10.1016/j.ijsu.2010.06.004.

Pullin, A.S., Cheng, S.H., Jackson, J.D., et al. 2022. Standards of conduct and reporting in evidence syntheses that could inform environmental policy and management decisions. Environmental Evidence 11: 16, https://doi.org/10.1186/s13750-022-00269-9.

Pullin, A.S. and Salafsky, N. 2010. Save the whales? Save the rainforest? Save the data! Conservation Biology 24: 915–17, https://doi.org/10.1111/j.1523-1739.2010.01537.x.

Salafsky, N., Salzer, D., Stattersfield, A.J., et al. 2008. A standard lexicon for biodiversity conservation: Unified classifications of threats and actions. Conservation Biology 22: 897–911, https://doi.org/10.1111/j.1523-1739.2008.00937.x.

Smith, R.K., Dicks, L.V., Mitchell, R., et al. 2014. Comparative effectiveness research: The missing link in conservation. Conservation Evidence 11: 2–6, https://conservationevidencejournal.com/reference/pdf/5475.

Spooner, F., Smith, R.K. and Sutherland, W.J. 2015. Trends, biases and effectiveness in reported conservation interventions. Conservation Evidence 12: 2–7, https://conservationevidencejournal.com/reference/pdf/5494.

Sutherland, W.J., Adams, W.M., Aronson, R.B. et al. 2009. One hundred questions of importance to the conservation of global biological diversity. Conservation Biology 23: 557–67, https://doi.org/10.1111/j.1523-1739.2009.01212.x.

Sutherland, W.J., Albon, S.D., Allison, H., et al. 2010a. The identification of priority policy options for UK nature conservation. Journal of Applied Ecology 47: 955–65, https://doi.org/10.1111/j.1365-2664.2010.01863.x.

Sutherland, W.J., Armstrong-Brown, S., Armsworth, P.R., et al. 2006. The identification of 100 ecological questions of high policy relevance in the UK. Journal of Applied Ecology 43: 617–27, https://doi.org/10.1111/j.1365-2664.2006.01188.x.

Sutherland, W.J., Armstrong, D., Butchart, S.H.M., et al. 2010b. Standards for documenting and monitoring bird reintroduction projects. Conservation Letters 3: 229–35, https://doi.org/10.1111/j.1755-263X.2010.00113.x.