7. Health Care and Information Technology–Co-evolving Services

© 2023 David Ingram, CC BY-NC 4.0 https://doi.org/10.11647/OBP.0384.02

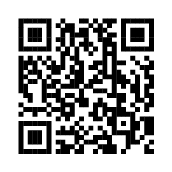

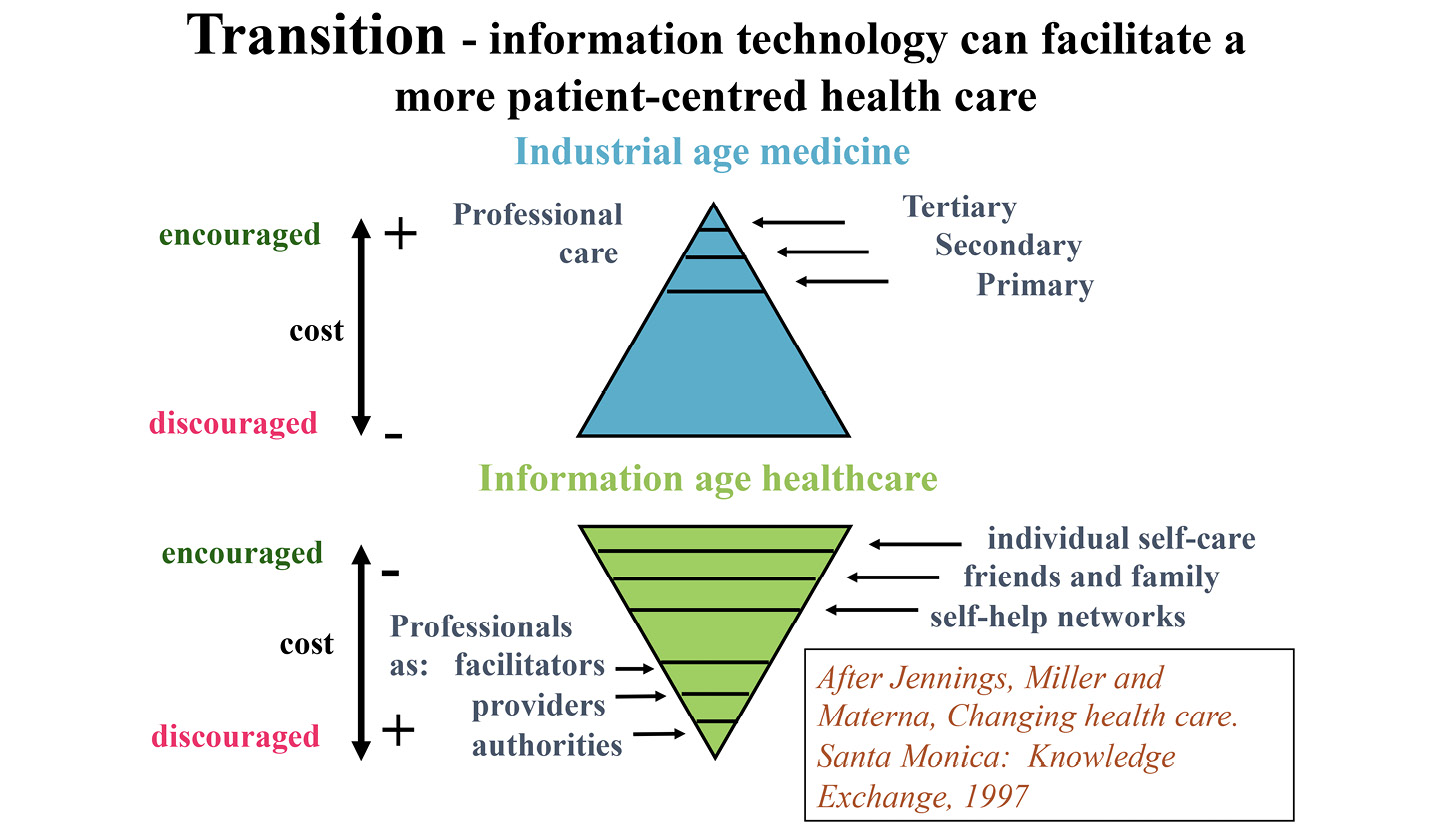

This chapter tells a story of seventy-five years of coevolution that has connected the practice of health care with the science and technology of information. It moves from experience of health care in the remote village life of my childhood to that in global village life today. It explores decades of transition onto a new landscape of disciplines, professions and services, played out within rapidly changing social, economic and political contexts. This transition has been described as turning the world of health care upside down, from an Industrial Age to an Information Age–the former grouped around service providers and the latter with a more patient-centred focus. Changing means and opportunities for preventing and combating disease have succeeded in saving lives and extending lifespans, albeit with increased years of ageing life often spent living with chronic and incurable conditions. The contributions of good nutrition, clean environment, shelter, sense of community and security to longer lifespan and healthier lifestyle, understood now in greater detail, give pause for thought about the balance, continuity and governance of health care services. Three contrasting commentaries on this era of change are introduced–from industry, science and social commentators of the times.

With the arrival of new measurement and computational methods, spanning from genome to physiome science and to population level informatics and now machine intelligence, the Information Age has pressured health services with continually changing challenges, characterized by what has been described as ‘wicked problems’, the nature of which is discussed. Wholly new industries, providing products and services for diagnosis and treatment, many of these increasingly offered directly to citizens, have grown in scope and scale. In an era when powerful new treatments have come with increased risk of harm to patients, ethical and legal aspects of care services and their governance frameworks have come under increasing public and regulatory scrutiny. The changing scenes of education, assessment of competence to practice, accountability for care services, clinical risk, patient safety and research, are introduced, all dependent on the quality of relevant sources of information.

This kaleidoscopic image of change sets the scene for discussion of the increasingly centre stage focus on information policy. The timeline of wide-ranging policy initiatives and related organizational changes in the UK NHS, such as sought to improve safety, contain costs, and improve outcomes for patients, is reviewed. This starts with seminal documents and policy goals from fifty years ago, highlighting issues then identified that have remained unresolved through the intervening years, despite huge public and international investment and opportunity cost in relation to competing priorities. Changing needs and increased expectations of citizens continue to challenge the status quo. This situation is reassessed, fifty years on, setting the scene for the programme for reform envisaged in Part Three of the book. The chapter concludes with a rueful reflection on the rush to computerize that has contributed significantly to the anarchy experienced in health care of recent decades, characterized as a gold rush.

The most conspicuous example of truth and falsehood arises in the comparison of existences in the mode of possibility with existences in the mode of actuality.

–Alfred North Whitehead (1861–1947)1

In the legend of Daedelus and Icarus, Icarus flew too close to the sun and his waxed-together wings melted and brought him down. By flying too low, in his escape over the sea from Crete, he would have risked the wings becoming waterlogged by spray, also risking bringing him down. There was a narrow range of viable altitudes. The flux of energy emitted from the sun sustains life on earth and provides and enables us with the energy and wings needed to fly. It can also bring us down. Physics toys with the idea of information as a form of energy. Information is in continuous flux in life, and its corruption or misuse can bring us down, too. Genetic mutations, epidemics, manipulative distortions of news and financial crashes all have common threads of information in flux.

Tracking photons from the sun into the cascades of mechanisms in living organisms is fascinating science. Tracking information of all kinds through environment and human society is hard to think about logically and carefully, but important, too. It is not experimental science, in the same way that economics or sociology are not and cannot be. There is only one laboratory and there is little rigorous controlled experiment possible. We can base decisions on an imagined and projected reality, but Whitehead’s caution about this, headlined again, above, must be heeded. In times of great change–and contemporary information anarchy signifies great change–black swans arrive, flap their powerful wings, and multiply.

In seeking health care benefit from investment in information technology, we should take heed of the story of Icarus, and rather avoid flying too high or too low, buoyed too high on hubristic wing and feeble prayer, or staying too low, unimaginative, incurious or cripplingly risk averse. In the slowly maturing landscape of health care information systems, there lurks much ageing and obsolescent string and sealing wax.

The scope of this chapter is very large, as was that of Chapter Two on knowledge, which led into Part One of the book. It seeks to provide a historical context for the changing face of health care, in its transition into and through the Information Age. The chapter sets the scene and provides the basis of the perspective and proposals of Part Three of the book, which is concerned with the goal of creating an information utility that can meet and sustain the evolving needs of health care in a wished-for, more mature and settled future Information Society.

Thus far, health care services have tended to bend to the limited capabilities and exigencies of embryonic and immature information technology. The challenge today is to refocus attention on the values, principles and goals of health care services, making use of today’s considerably different and increasingly mature information technology, to live up to and improve on these, while continuing the exploration of new needs and potential that arise. As of today, the words of national information policy continue to mirror much of what was set out fifty years ago, dressed now in the dazzle and hubris of contemporary discovery and hype. Meanwhile, throughout the National Health Service (NHS), especially in remote locations far from London and other major cities, teams struggle with obsolete desktop computers and user interfaces, by far lagging those that they use in their personal lives at home. How can efficiency and improvement be truly the focus of policy when basic tools of personal productivity, available now, remain withheld, and much of the resource for innovation that is available is focused on futuristic ambitions of yet unknown efficacy and efficiency?

The next chapter, Chapter Eight, which leads into Part Three of the book, focuses on the changing nature of health care and an information utility matched to its evolving requirements. This is turning the world upside down, from what has been described as an Industrial Age preoccupation with disciplines, professions and institutions, to an Information Society focused on citizens and professionals, and their co-creation of health care in the communities they live in and serve. This brings a new perspective on roles and responsibilities at all levels, from the local to the global, with new focus on the balance, continuity and governance of trusted services, and on teams and environments capable to lead and deliver them, including in support of education, research and innovation.

To be brazenly provocative for a moment, just to highlight the challenge and cost of wasted opportunities: we have sometimes spent ten times too much, badly, ten times too slowly, and achieved a tenth of what we can and must now realize from investments in information technology if we are to emerge from the past decades of information pandemic in health care. A paper from the Humana Foundation, highlighted in a recent report on health care trends by the Deloitte Consultancy, concluded that a quarter of expenditure on health care in the United States is wasted money.2 That in a health system that spends much more, and is rated to achieve rather less, overall, for its citizens than international comparator systems. The report signalled a major reorientation of expenditure over coming decades, away from ‘process and money’ focused services to ‘outcome and value’ focused services, very much in line with the vision I am peering towards in Part Three of the book.

In Chapter Eight and a Half, mirroring Julian Barnes’s parenthetical Chapter Eight and a Half in his A History of the World in 10½ Chapters, I describe major initiatives into which I have placed much of my personal creative efforts of the past thirty years. For me, these hold the key to reimagining the current Pandora’s box of health informatics, to support an oncoming reinvention of health care services. They hold important lessons about how we should set out to make and do things, as much as about what we set out to make and do. In saying this, I fully recognize and welcome the fact that such ideas must become embedded within viable and successful supporting businesses, as well as in new health care services. I, myself, have not been a person sufficiently interested or capable to give such a commercial lead. I have, though, worked without financial reward, to support and collaborate with people brave and competent to do so, and been fortunate to have had role and remuneration from academic employment to enable me to do so. From this position, I have all the while argued for and held to a vision of the common ground on which I believe future commercial endeavours must be based, if they are to succeed in their mission of supporting a now essential programme of reform and reinvention of health care, matched to the evolving needs of the coming Information Society.

The question then arises as to how to create and sustain an information utility which serves the wishes and needs of citizens, by achieving greater and enduring rigour, engagement and trust in health care information and infrastructure, based on standards of consistent, coherent, affordable, well governed, safe and sustainable systems. This is the theme of Chapter Nine, which ventures into the sometimes-contentious world of Creative Commons, open standards and openly shared tools, methods and software.

Health care services seem always to be a work in progress and in an agitated state of flux. Circumstances, and ways of thinking about them, change continuously, as do political ends and means for achieving and financing them. If the reorganization of health care services at a national level might be compared with passage through the gate described in T. S. Eliot’s (1888–1965) poem Little Gidding, it seems that we have gone full-circle several times, seeing the gate anew each time.3 From the centre of government, it is inevitably a high-level view, as if from a helicopter circling above the fray. A bit like the image of President George Bush, filmed viewing the Katrina hurricane-induced floods from the encircling Airforce One presidential jet!

In contrast with the poet, we cannot reasonably claim that, based on experience and learning gained in each circuit, we are seeing the gate more clearly, as if for the first time. We are seeing a different gate in different context. Some of its structures are old and some are new; changing times rot and weather them. Some of them are hardy and others less so. Maybe different materials would fare better, but the downsides of new materials arrive with them, too. The enduring thought and perception after each circuit remains, however, of a rickety gate in need of fixing. We should always strive to make things better and more equitable, while recognizing that life itself tends towards becoming a rickety gate, and that health care services cannot always fix them!

This chapter now traces the recurring dilemmas about health care experienced through the Information Age, alongside the social, scientific and professional contexts of their times, the advent of information technology, and the information revolution it heralded. I draw on my childhood experience of social care and my career-long engagement in academic and professional communities of health care around the world. I start with some memories of health care in my childhood, revisiting the remote English village life I lived then.

Village Medicine–Snapshots from Earlier Times

The detective in Agatha Christie’s (1890–1976) novels, Miss Marple, was an amateur detective sleuth who lived her life in a small village. She claimed that all human nature was revealed in observation of village life, and this was all she had, or needed to go on, in solving its crimes. Village doctors did not have or need a lot more, either, in diagnosing its illnesses.

Two of my great aunts lived in a tiny village, Hawkesbury Upton, on the Cotswold Hills in rural Gloucestershire. The family ran the village shop, which doubled as the pharmacy and bakery, and their uncle was the village doctor. The shopfront window had large glass flasks on display, each filled with a different coloured water, the trademark of a pharmacy. The doctor’s surgery was immediately behind the shop and the family lived in a small cottage, in a row of them behind the shop building. Each cottage had a large back garden, and a small driveway led from the front of the cottages out onto the road, for horses and carts–originally no electricity and no cars, of course, just water and limited local drainage. In their living memory, relatives had walked the twenty or so miles, to and fro to the city of Bristol, to sell their wares in the markets there. I recall my aunts’ mention of the famed Dr. Jenner, in their stories of village life.

Edward Jenner (1749–1823), the pioneer of vaccination and founder of the science of immunology, had lived and worked nearby in Berkeley. He trained in London at St George’s Medical School. In his early village life, he observed the immunity conferred on women milking the cattle, immunized by their close contact with cowpox, against infection by smallpox. He had himself been painfully inoculated with pus collected from patients infected with smallpox. This led him to conduct experiments on combating smallpox through vaccination. A person of very wide scientific interests, he devoted much of his time to development of the method.

Smallpox is thought to have emerged ten thousand years ago in Africa, then spread to Europe in the fifth to seventh centuries. It was frequently epidemic in the Middle Ages and was taken to the Americas, by the Conquistadors, and spread elsewhere around the world. A spread occurring over centuries, that nowadays occurs in weeks and months. In 1797, Jenner submitted a paper seeking to alert the Royal Society to the importance of vaccination, but the idea was rebuffed as too revolutionary, and he was told to go away and do more work. There was a powerful anti-vaccination movement in those times, too! Vaccination was subsequently recognized as of huge benefit to the country’s health, but Jenner did not pursue it for his personal gain–his income from other sources suffered as a result.4

My family often visited these great aunts as they lived on into their mid-nineties, hauling themselves up and down the very steep staircase in the cottage, cooking on a coal-fired range, gardening and talking. My grandmother from the same family had diabetes. Equipped with tiny weighing scales and spirit flame to sterilize insulin injection needles, she showed us grandchildren how to manage her medication and diet. Pneumonia struck and killed her, in the same village, in her early eighties.

In their childhood, my great aunts told us, patients would come to their doctor uncle, for such insight, advice and medicine for their ailments that could be provided, and often comfort and encouragement was the most useful. But there was trust and expectation for medicine and cure, and this had to be addressed, too. At the completion of the consultation, a note or prescription was written out and sent through to the shop. Among these, they said, was sometimes a request to dispense doses of ADTWD, which was duly acted upon. Laughingly, they joked that this stood for Any Da… Thing Will Do!

It sounded a bit harsh and unkind to our ears, no doubt, but it was probably not so much dismissive prescription for the worried well as rueful reflection of the reality that the problem was beyond medical scope or means. It is easy for a stage play to laughingly dismiss the craft of the physicians managing ‘the madness of King George’, and his unknown-about porphyria, by extraction of vapours, or regulation of high blood pressure by letting blood, but ways of thinking about illness and attempts to combat disease are very much the art of the possible, in time, place and wider context.

There are and have been many doctors in my immediate family. I wrote in the Introduction about my polymath uncle Geoffrey, a casualty surgeon, as the Emergency Medicine speciality of today was then known. My mother’s other brother, Jack, a general practitioner (GP), sadly took his own life at a young age. Medicine can be a very tough profession. Those of today are from a different mould; no longer living on or placed on pedestals. The medical arts have been demystified in the Information Age, while the expectations placed on them have grown.

I recall another family visit in the garden of my great uncle Edwin, a retired GP, at his house in Southsea on the coast of Hampshire. My father was a keen gardener. He gardened on a large scale, feeding twenty-five children and staff from a huge, partly walled kitchen garden in the twenty acres of land belonging to the children’s home we grew up in, run by my parents. He produced enough to send to other children’s homes in the county. I can see us, now, gathered in the garden in Southsea and Uncle Edwin talking about his life as a GP. He showed us the device used for excising infected tonsils. In early times, this was commonly done by a GP, with the patient, usually a young child, lying chloroformed on a table in the surgery. He pulled up a cabbage and demonstrated the procedure by chopping off the stalk. I can still see that image in my mind. I was six years old and had recently had my own tonsils removed, after a long period with persistent sore throats, so my experience of the episode and the post-operative pain was still fresh. Maybe he was thinking it might make me realize that I had come off lightly!

My own most serious childhood brush with acute medicine was a major concussion and bruising, remaining unconscious for a long time, after crashing while riding my new bicycle. I had been racing along the long gravel drive from the gate to the house of the children’s home, against one of the other children, who was running. The beloved bike had been renovated and painted by my dad, for a birthday present when I was about nine years old. I came to, lying on my parents’ bed with village GP in attendance and worried parents and others around. I was sick, aching, cut and sore, severely concussed and confined to bed for days, and then slowly nursed back to recovery at home. No ambulance, accident and emergency department, or hospital attendance. Just the one GP covering several local villages, his box of tricks, bed rest and my parents’ care at home.

In everyday life, care was largely based on domestic skills and country folklore, gargling salt for throat infection, inhaling menthol vapour for colds and lung congestion, and taking aspirin for pain relief. My great aunts took half an aspirin tablet every day throughout their adult lives, as anticoagulant, they told me. In my village, such remedies were available at the small village general store, which doubled as post-office, bakery and grocery, although most families grew vegetables. Village communication centred around the primary school for fifty pupils, church and church hall, pub, sweets shop, farmyard, woodyard and the village bobby’s (policeman) house. School dentistry came in the form of a dental team, who extracted numerous rotten teeth in their mobile caravan-based surgery parked in the school playground. Those awaiting their turn for so called ‘laughing gas’, were not laughing, but subdued.

Health Care Services Today

My childhood village is no more; it now has quite different global contexts and connections. Geography no longer functions in the same way as a moderator of information, service, expectation and demand. And health care services today are more complex, beyond recognition. They are separated but not separable; managerially segregated more than integrated. The village health services of my childhood were largely centred on care, as when dealing with my severe concussion after the bicycle accident. They are now more heavily focused on treatment. Resolution and palliation of exacerbated chronic back pain, in city and village today, is predicated on access to and length of queue for physiotherapy, X-ray or magnetic resonance imaging (MRI) scan, surgery and tolerance of powerful analgesics. Regular exercise classes to guide and support are mostly out of scope, save for those who can pay.

The fragmentation of efforts to treat and care has highlighted and exacerbated the difficulties in maintaining resilient balance and continuity in what is done and sustaining ethical governance of the services and technologies employed. Especially so for services working at the interface of mental health problems, physical disability and what are termed illnesses of poverty. With increasing range and effectiveness of interventions have come increasing needs for care, especially in relation to chronic and incurable diseases, and lengthier old age. Much of the caring load is shouldered by families and friends at home, and by the goodwill of neighbours and community volunteers.

Aftermath of War and Seven Decades On

In the UK, experience and attrition of the Second World War was followed by years of hardship in the reconstruction of economy, buildings and lives, buoyed by a spirit of relief and hope for the future. The cost and destruction of wartime created a new ground zero. It opened the way to radical new thinking, with openness expressed through mutual trust in common endeavours.

The hope for transforming change was notably stimulated by William Beveridge’s (1879–1963) report on social services, that had been published in 1942. Politically and professionally contentious at the time, but striking a chord in the country at large, this advocated and came to underpin the reframing of health care services. Its wider focus echoed the social deprivation experienced in the years of recovery from the 1914–18 war and the economic collapse of the 1930s, that impacted and influenced my parents’ lives. My father’s brother, once successful in his work, never recovered zest for life after many years of unemployment and poverty. The report was a powerful signal that shaped policy of the early post-war years. It used graphic language to describe the need for battle on five fronts–want, disease, ignorance, squalor and idleness. Elimination of poverty, a national health service, universal education, good housing and full employment were adopted as essential elements of national reconstruction.5

The UK National Health Service was established in 1948, when I was not yet three years old. It was thought of as a central organization of the professional practice of medicine. Nurses and nursing care were generally thought of as subservient to doctors and medicine, in both gender and professional terms. A generation of young men had died or were severely disabled in warfare and this loss echoed sadly in the lost life opportunities of many women of those times, and of returning soldiers.

In later decades, and battle-scarred in his efforts to promote international focus on climate change, my university physics lecturer John Houghton (1931–2020), who died early in the pandemic from complications of Covid-19 infection, wrote that humankind might only take such major issues seriously after experiencing a disaster. In the information era, advancing technology has increased the potential scale, spread, impact and cost of destructive human-made mess-ups. The experience, today, of disease and threat to livelihood in a viral pandemic may also prove a spur to new thinking. It is in no way the same experience as armed conflict and deprivation of wartime, but in the response to the fears and uncertainties of the times, expressed through mutual support within close neighbourhoods, there is similarity.

In 2020, when for the first time there were more people aged over sixty-five than under five, David Goodhart’s characterization of the social and political crisis of today is again radical in its thinking.6 His diagnosis is of an accumulating underlying imbalance of head, hand and heart, in social, economic and political life. Goodhart observes that society has split between poles of globalism (characterized by what he calls ‘anywhere’) and localism (this characterized as ‘somewhere’). He describes imbalance in social status, value and reward accorded to the contributions of all citizens, reflecting head (cleverness), hand (skill in making and doing) and heart (care). His anywhere and somewhere are metaphors for interacting global and local contexts, that play out in people’s lives. The curriculum of medical education, today, emphasizes integration of knowledge, skills and attitudes, mirroring Goodhart’s triangle of head, hand and heart.

The 1942 Beveridge Report and the 2020 Goodhart book combine observation of ailing society in two different eras with account and reasoning about how these came about and what needed to be, could and should be done about them. Diagnosis and prescription of treatment for ailing society and for an ill patient bear some comparison.

In a clinical setting, with a patient who presents as sick, the professionals’ goal (on which the patient tends to concur!) is to help them cope and get better, as best possible. Easily said–sometimes clearly, straightforwardly and quickly achieved, but often not. Treatment and clinical management goals set, actions taken and their reasoned basis articulated, evolving context monitored, progress made and outcomes resulting: all of these provide evidence to inform the review of what was done, how and why, and possible need for adaptation and change of approach–maybe more of the same, or a different medicine, and maybe less.

In clinical practice, failures tend to disappear out of focus. Patients die, problems of acute concern are resolved, or they dissolve into longer term concern for the effective treatment of chronic illness, adjustment of lifestyle and supportive care. They move beyond clinical professional scope into scope of the coping ability and capacity of patient, family and their local community, and both local and global support services available to them. In society more widely, failures of health care policy may lead to crisis and breakdown, persist, adapted to or unchanged, amplify or decline. Global policy and decision makers perceived to have failed or to be no longer relevant, lose credibility and power. Wider ailments of society become local problems of personal health care–the Beveridge giants, and the Marmot inequalities of health. Policy for health care easily goes astray in the noise and bias of changing times.

Chaotic presentation of illness in a patient has first to be assessed, and immediate priorities coped with, before underlying problems identified can be treated and managed clinically–usually, the earlier addressed the better. Health care starts with patients, family and community. These people are on the frontline of early awareness and experience of the signals and noise generated by the onset of disease. The health care systems must first connect with, cope with and reflect that reality, and be demonstrated and observed to do so. The global and local realities of health care policy, systems and services need to cohere–and be seen to do so, for citizens and professional teams alike–if they are to prove efficient and effective, both in deciding on and achieving their goals. Beveridge, Goodhart and Marmot attest that they do not cohere. The advent and anarchic patterns of adoption of information technology have played a significant part in both revealing and exacerbating this situation.

In the light of recurrent failure, questions arise and persist concerning not only what was aimed for, but also how it was approached and whether it has proved to be, and remains, a realistic goal. They persist in the context of information policy for health care. What and where is the common ground on which citizens, local communities and professionals engage? What and where is the common ground on which health care systems and services engage? What and where is the common ground of information technology and information for health care?

In such deliberations there are helicopter views and views from ground level. High-level views look further, but less specifically and sensitively. Ground-level views may not see beyond the reality that lies nearby in their focus of interest, and can thus obscure, dominate or preclude wider perspectives. Economists talk of macro- and microeconomics. Macroscopic focus is on whole systems, broad brushes and big picture, and head-up overview. Microscopic focus is on parts of systems, fine details and the hard, head-down graft of coping with and implementing action, close at hand. They may be pursuing the same or quite similar goals, in one way or another. One is mainly about what is sought, the other mainly about how to achieve it. These are matters of head and hand, and success often depends on a good heart. Head, hand and heart cannot always be balanced but they need to connect how macro-level goals are tackled at the micro-level. Where they fail to do so, they easily stir angry feelings on all sides.

An incident comes to my mind, involving a rather cantankerous professor of surgery, whose weekly ward round I was invited to attend in about 1969, as I mentioned briefly in Chapter Five. I was also invited to attend an operating theatre, to observe the innovative open-heart surgery of the times, made possible by extracorporeal blood gas exchange. I have vivid memories of those wavering first encounters with acute medicine services! The surgical professor specialized in a technique of gastric surgery that severed the vagus nerve, to treat patients suffering from stomach ulcer–a common approach, then, in combatting the erosions stemming from stomach acidity.7 He approached the bed of a clearly very unwell and seemingly very depressed patient he had operated on a week before and enquired of his wellbeing. Seeing and hearing the patient’s considerable distress, he offered crisp words of sympathy and turned quickly to the ward sister, suggesting she might offer him a glass of sherry each day! Walking to the next bed, closely followed by his senior registrar, he turned to him and said, loudly and angrily, that he did not expect to see that patient still there at the next ward round, and to ‘get that patient well!’ I can see the scene in my mind as I write.

Those were different times and more normalized to what, for us, seems a chillingly autocratic, archly detached and ‘Doctor in the House’ manner in directing clinical teams, but the relevant concern was, and remains, how? This situation, in microcosm, is what can easily happen with health care. If one cannot cope, another one gets reprimanded, and rides the punch as best they can. People do get angry when even their best efforts and intentions run aground. As with the irate professor, intractable challenges give rise to a good deal of anger and finger-pointing within health care–from the top floor of the NHS in Whitehall and its politician and managers to the most remote parts of the community served. As with the hapless senior registrar, teams at the bedside and in the community are all too easily chastized, resulting over time in them losing motivation and sometimes, themselves, falling ill. It takes considerable and invaluable dedication and balance of heart and mind to steady the hand and keep going. Those facing these situations can easily become like the depressed patient in bed. Senior doctors in that long-ago cantankerous professor’s team told me that he drank heavily in his office at work and operated unsafely. He was maybe depressed, too–he died quite young. Health care, like teaching and policing, is a tough profession–tough to organize and tough to cope with.

The Information Age is revealing the inequalities and imbalances of health care in a new light. To the extent that computerization fails to engage realistically with their resolution, it exacerbates them. To be a creative agent of their resolution within the oncoming Information Society, the information utility this book is arguing for must be conceived and created as a balance between local and global services, and the needs and experiences of those they serve. Resilient balance, continuity and governance are central themes it must pursue. BCG (Bacillus Calmette–Guérin) vaccination was highly effective against the tuberculosis epidemic. Information utility must focus on another BCG–Balance, Continuity and Governance–to counter the current plethora of unbalanced, discontinuous and unregulated sources of information. Citizen engagement, professional teamwork, education, innovation and professionalism in health care services are all in need of the common ground that a good information utility can enable and support.

The National Health Service

In Medical Nemesis, Ivan Illich (1926–2002) described how in the fervour of the French revolution, it was promised that liberty equality and fraternity would banish sickness–a national health service would take charge.8 The promises of the UK National Health Service were lofty ideals, but not quite that elevated!

The founding father of the NHS, Aneurin Bevan (1897–1960), was a beacon in my parents’ lives. They were strong believers in the Beveridge and Bevan missions and relied and acted on this belief, thereafter. As mentioned before, my dad left school at fourteen–his father had disappeared to Australia and his mother died of cancer when he was a teenager. My mother left her domestic science college, and a subsequent period on the staff at Gordonstoun School (including looking after Prince Philip when he was a schoolboy there), to head off to Catalonia to look after refugees from the Spanish Civil War. The ever-changing landscape and experience of health care services over the following decades bemused and upset them in equal measure as they grew older. They experienced the evolving science and technology underpinning its methods, and the professions and organizations delivering its services, through the decades of challenged and changing post-war society. It was a kaleidoscope of images and feelings–gratitude, trust and hope mixed with growing experience of incoherence, inconsistency and disappointment.

From the Beveridge Report had emerged the policy and plan for a comprehensive national system of social insurance ‘from cradle to grave’, paid for by working people and providing benefits for those unemployed, sick, retired or widowed. It laid the foundations of the NHS in a society where medicine was less capable, diseases more often short-lived and ageing more rapid. Childcare, and other care services, such as convalescent care, were organized in residential settings, such as the children’s home where I grew up. Mental hospitals provided last resort containment of the uncontained or uncontainable problems of mental illness. They were awful places to experience. I did so in a volunteer work camp in my teens, making a garden for the residents to enjoy, and, in later years, visiting family and friends unlucky to need their care.

In this policy framework, power over the management of the health system and practice of medicine became an increasing concern of central government. Specialization of services, and their associated professional skills, increased alongside advances in knowledge of disease and availability of effective treatments, reinforcing the case made for centralization. Specialism and its associated research, education and regulation could only advance, and be afforded, for the whole population, when organized at district and regional levels and in national centres.

Specialist services are primarily acute and episodic in nature. Even people living in a remote village can and will, for a time, be able to accompany a child being cared for within the unique and necessary environment of a national centre.9 But it is not home, and life must continue at home. There is a natural wish for acute services to be conducted as close to home as possible, and this brings tension–communities campaign for and defend against loss of nearby hospital facilities. Effective acute services delivered at or near home are much desired. A better balance of hospital and home is increasingly within scope of the Information Age.

Social care services are, by their nature, longer term and predominantly less specialized. They are needed, and need to operate, near to home. They have nationally defined frameworks that guide and support good practice, but the management of these services rests with local government. And the disjunction of operation and governance of health and social care services is destabilizing and inefficient. It has reflected lesser social and professional status of care services, seen as priority of heart more than head. Care services are matters of hand, as much as are those of the surgeon’s hand, but, as with many such skills, in Goodhart’s view, not valued as such. A patient looked after with thought and kindness may cure themselves of a stomach ulcer and not require surgical or pharmaceutical intervention. Their health and life may never fully recover from laparotomy and section of the vagus nerve or may experience harmful side-effects from prolonged drug treatment. The cost and benefit from helping a patient cope with and find resolution of a stomach ulcer in a caring community and home setting, with due diligence that nothing more sinister is evolving, and the cost of the potential medical and surgical alternatives, bear no comparison. The achievement of such a win-win scenario for patient and health system will depend significantly on a better connection between these different worlds of health care–a more individual citizen- and patient-focused information utility will be central to the pursuit of that goal.

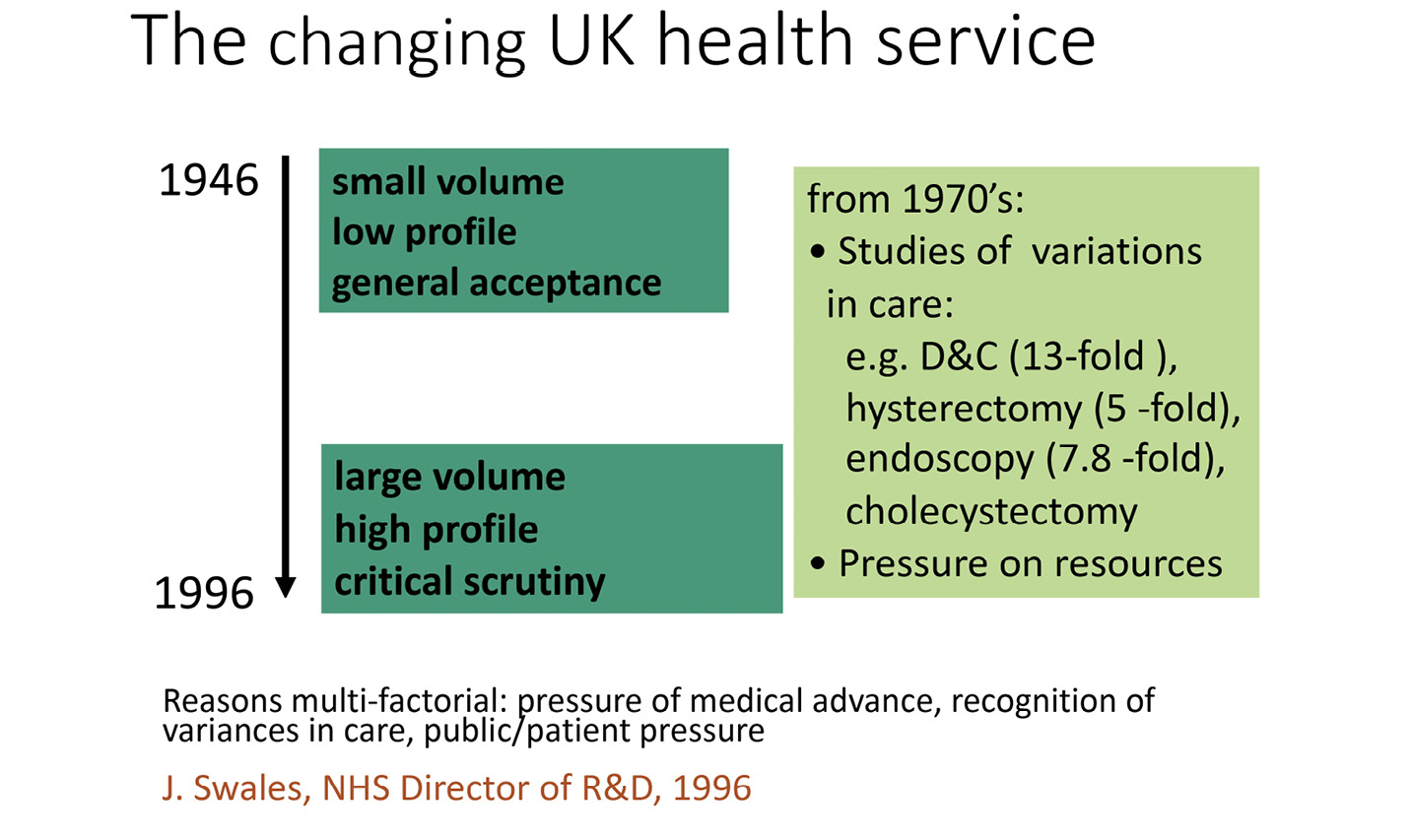

Fig. 7.1 The changing NHS from 1946 to 1996–after a lecture of John Swales, Head of the NHS Research and Development directorate, 1996. Image created by David Ingram (2010), CC BY-NC.

In celebrating the coming fifty-year anniversary of the NHS, halfway through my career, the then head of Research and Development of the NHS, John Swales (1935–2000), gave a seminal lecture at St Bartholomew’s Hospital (Bart’s), charting the changes in the service since its inception. He was a colleague Professor of Medicine of John Dickinson, and came there to conduct final examinations, I recall. They shared an interest in the aetiology and treatment of hypertension. In the lecture, a slide from which I made notes (see Figure 7.1), he observed that medicine at the outset of the NHS might be characterized as small, with low public profile, and enjoying general and uncritical acceptance–its interventions often being relatively ineffective but harmless. By comparison, fifty years on, it was much larger and more effective, while, at the same time, at greater risk of doing harm. It was a high-volume service and under much increased critical scrutiny. He highlighted the pressure of medical advance, variance in pattern and quality of care provided, and public and private pressure as underlying this changing pattern.

Risk and safety have become overarching concerns in medicine. As the tools and methods available have increased in power to do good, they have also increased in power to do harm if misapplied, either by misguided design or unlucky accident. New balances of risk and opportunity, cost and benefit, unfold alongside innovation. As we saw in Chapter Five, new ideas and designs often stretch the boundaries of welcomed, accepted, and trusted practice. Engineering experience and expertise is central in partnering scientific creativity and focusing and guiding its fruits to useful ends.

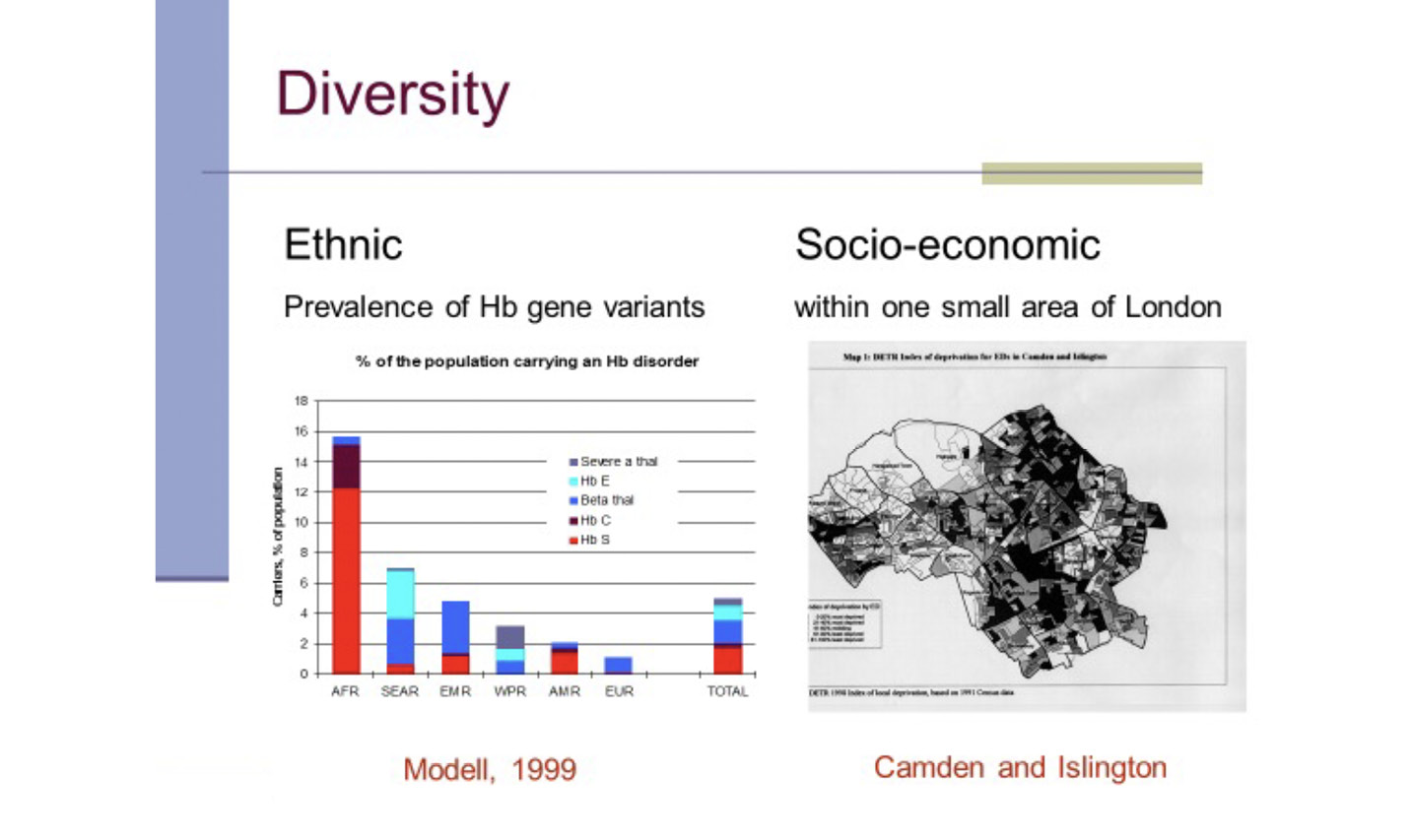

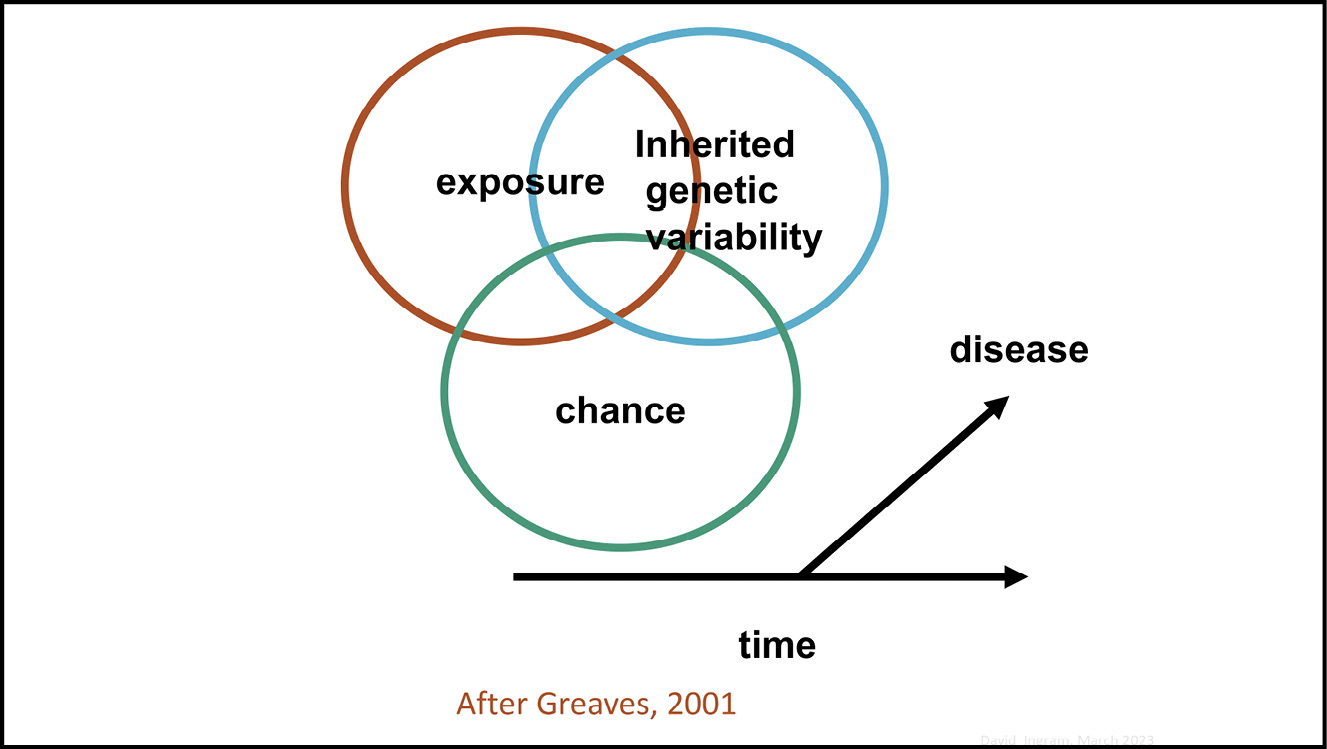

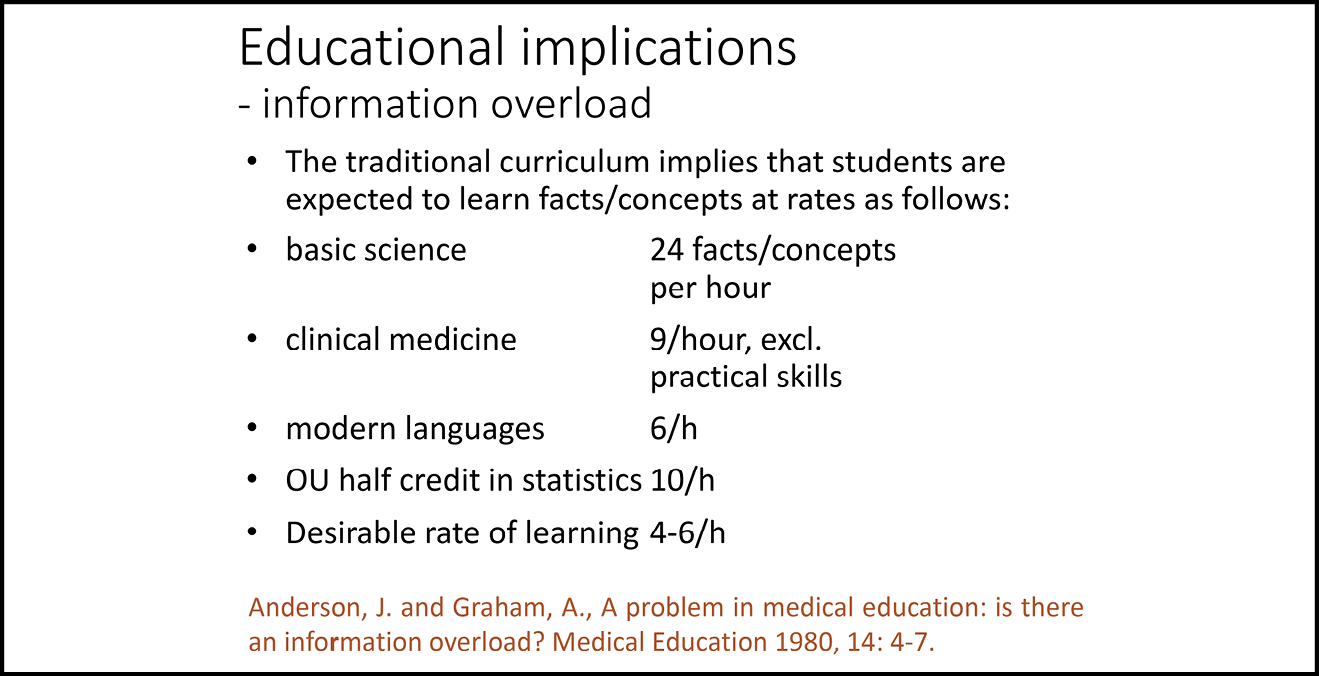

There has been significant change, also, in the demographic diversity of the UK population. Diversity and inequality of health care among different socio-economic and ethnic population groupings, has emerged more clearly as of major significance and concern. It manifests in prevalence of disorders, effectiveness of interventions and inequity in their provision. It has many determinants and Marmot at University College London (UCL) has been a formidable champion of this important research and advocacy. In another dimension, there is considerable genetic diversity–as studied by my colleague Bernadette Modell in the context of her pioneering work, centred in North London and now influential through the World Health Organization (WHO), internationally, which I profile in Chapter Nine. She focused on integrated community and hospital services for people who have inherited or are likely to inherit haemoglobin gene variants associated with the blood disorder, thalassaemia. The ethnic diversity of this variant, globally, and the diversity of socio-economic stratification in one London Borough, are illustrated in Figure 7.2.

Fig. 7.2 Maps of ethnic genetic diversity, internationally, and diversity of socio-economic stratification in one London Borough–after Bernadette Modell, 1999. Image created by David Ingram (2010), CC BY-NC.

Since its inception, the NHS has featured continuously at the centre of UK national and local politics. Different political perspectives have battled one another, aligning with different models of how services should be scoped, organized and managed–operationally, financially, commercially and professionally. In recent decades, ideas have swung like a windvane, buffeted by centralizing, localizing, nationalizing and privatizing winds.

It has become a habit to impose additional breaking changes on ailing services. Review and reorganization have led to costly and unproductive waste of time and resource, at onerously frequent intervals. This has increased the burden on services that were already struggling to keep pace with scientific and technological advances, to improve, achieve and sustain continuity of care. Resource needed at the coalface of care has been diverted to new organizations defining, pushing for, managing and regulating change imposed from above. The standard of ward level accommodation on the ground has been let down–in too many hospitals across the country it is old, decrepit and unclean. This is not a conducive environment for treatment and recovery.

Many people work and seek meaning in their lives through service within the NHS: those that engage clinically and in social care, those that provide and administer support services, and those that manage services and their relationships with organizations beyond the NHS. They constitute a tremendous asset. But such appetite for and commitment to the NHS has tired noticeably in recent times. I have seen this when working with clinical colleagues, close-by to wards, through our clinical family’s experience, and in visits to family and friends being cared for.

These endemic problems of the NHS reflect its scale, range and diversity. It encompasses both laudably leading, and unacceptably and worryingly wayward, facilities and services. Viewed as a whole, governance is also fragmented and unwieldy, hampered by the separation of health and care policy and practice. And as a result, information systems are unfitted to connect with and respond flexibly to local health care needs, and advances in underpinning science and technology. As with the super-tanker delivering oil that cannot slow or change course for many miles, even with the application of maximum thrust generated from its power source, it is set in its course. It would be an unimaginable nightmare to reroute the delivery of oil by running a full tanker aground, mopping up the spillage and sending for another one! Repetitive rerouting of the delivery of health care services is a perilous course.

It is not good sense to project the responsibility for this complexity onto information technology (IT), either as cause or panacea; it is and can be neither. IT mayhem typically reflects poor understanding, inadequate capacity and capability, and poor practice. As a colleague sitting on an overseas national policy board for health IT remarked to me recently, its members know things are not good, have a limited sense of why, have little idea how to improve, but above all fear that what they decide to do will prove a mistake. It is little surprise that senior health managers tend to view too close a connection with IT as career suicide!

When I sat for a period on the equivalent national IT board for the NHS–populated it seemed, mainly by battle weary and sometimes rather cynically resigned managers–I was quietly removed for introducing what I saw as root causes of their dilemmas, that were perhaps too difficult to hear. Or perhaps my thoughts and ideas made no sense to their ears, and they knew better. The then Prime Minister was persuaded to commit many billions to a programme of investment for which the basic tenet and promise from the industry–in simplest terms, pay us enough and we will do it–proved substantially unsound. I watched this path develop in surreal meetings of consultants, companies, health managers and bemused IT departments in our local health economy around UCL. The consultants and companies were very well paid, the real costs in the health economy were hidden, and those keeping services running were distracted and wearied. It was not all bad–some good infrastructure did emerge, but too distant from the direct support of patient care that was needed.

Given that the NHS, corporately, speaks of itself as a learning organization, it is amazing that it retains so little knowledge of past policies and programmes which have gone through multiple groundhog days of rebooted information strategy. Some things are, no doubt, best forgotten, but some of the experience could and should have been learned from, better. The root cause of the problems of IT legacy and underperformance in health lie in inadequacy of method, capability and culture–all problems of connection. They are all problems in need of a good and proven answer to the primary question: How? Answers to What? and Why? questions are endlessly rehearsed and reframed, with little added meaning, as evident from the track of policy statements over fifty years that I lay out below.

The how answers proffered to entice the opening of the public purse were typically slides and spreadsheets, presented by people who had never designed, implemented and operated such systems. Those listening to proposals for significant scale of action pursuant to policy implementation should be guided by a principal imperative–to be informed and critical when reviewing what is being talked about and promised. The response to the challenge of ‘show me’ was, too often, like pointing to a car at a motor show, hidden under covers before launch, to appetize but not reveal. And in any case, there were few experienced mechanics around, to recognize and understand an engine under a bonnet when they saw one. The who, when and where answers were a mixture of assumption and delegation. The domain had become a minefield of political, commercial and managerial mayhem.

It is a good goal to seek to use IT to help and support people, services and organizations as they adapt and grow, locally and across the now almost fully connected world. Wisdom in pursuing this would be to recognize a new culture of information as an organic entity, best served by identifying ways to nurture and help it grow well. How we do that matters as much as, if not more than, what we do. The only route to doing things better is to learn how to do them better. The NHS should have identified and learned from IT pioneers, who were already charting the way and building it around them. In practice, it marginalized them and placed its trust in hubristic and unmet promises from people and vested interests of less relevant track record, placing its chips on squares where burden was often added, more than relieved.

Balance

There are many kinds of balance and imbalance in play in health care. They reflect the complex contingencies of individual, family, community and environment. They span personal, professional, scientific, social, economic and political domains, and changes among them over time. Imbalance and inequality of health care have persisted and become further highlighted in the anarchy accompanying transition through the Information Age.

The human body is an autonomous entity, evolved to preserve homeostasis–resilient immediate bodily balance and long-term sustainability. Medicine is focused on supporting or restoring this homeostasis. Accidents and disorders of all kinds disturb and threaten this balance–mutation of genes and accident and shock of traumatic events can grow to overwhelm both physiological and emotional balance. There is ever-growing scientific and clinical knowledge of the body’s homeostasis and how best to cope with and treat the disturbances and threats that arise. Metabolic and physical balance and resilience get harder to sustain with age–I know that experimentally at my age, when dance and Pilates are proving amazing examples of how one can help oneself to maintain balance of body and mind, while keeping fit and having fun.

Good health care is a balance of giving and receiving, of what can and should be offered and done in support, and what can and should be expected and accepted, by and from whom. Everyone professionally involved in that balance is bringing themselves, and their knowledge, experience and expertise, to bear on supporting health and providing care. They are often exposed to extremes of human need and suffering of those they serve, and difficult to achieve expectations vested in them, as the supporting professionals. They themselves have special needs. We all need to be cared for and we all need to give and receive care. There is, as ever, a balance of rights and responsibilities.

The centre of gravity, or point of balance, of the expectations and experience of patients and professionals receiving and delivering health care services is not a fixed point and has changed considerably over time, as has the trust that holds things together; very much so in the context of the Information Age. The fulcrum has not easily adjusted in keeping with this change, and health care systems have become overburdened and swung increasingly out of balance. This instability has led to overloaded services and related critically adverse events, litigations and enquiries, reflecting failure, dissatisfaction and public concern. Inevitably, such imbalance does sometimes ramify in thoughtless, incompetent and uncaring action of service personnel, with potentially unhappy, harmful and inequitable consequences for patients. But the high level of personal commitment of its coalface workforce is a common ground on which the professions pride themselves and the NHS depends, and that workforce sometimes experiences overwhelming personal pressure in delivering and sustaining wished for high standards of care. Health care services look to be at their affordable limits in society. Something more than money is needed to restore balance.

The state of the health care system and the state of health of the individual citizen are connected. Governments may issue White Papers about ‘The Health of the Nation’ but, in their essence, health and care are personal matters. As with clinical decisions about the health of an individual patient, policy for health care services often does not have clearly right and wrong answers. It reflects a balance of advocacy and decision on behalf of both citizens and services. Patient care is nowadays seen, more explicitly, as a balance of a patient’s individual needs and wishes, the roles of professionals and services that are there to treat and support them, and the roles they themselves can and should play, in sustaining and maintaining their own health. Whatever the choices made, decisions reached and actions taken or not taken, there are consequences, for better and for worse. These balances have become more evident and explicit in the Information Age.

Continuity

Continuity of health care services is a central concern in need of closer attention. The anecdotes from my family’s village life were examples of the limited range of what was possible in the countryside, with radio but no television, and with the small community hospital, dentist, pharmacy, library and bookshops five miles away. Very few people possessed the rudimentary small cars of the era and there were only twice-daily buses to the nearby town. The next level of hospital service was centred twenty-five miles away. But there was, despite that, good continuity of care and communication, in community life and through local visit and telephone. By and large, people expected and were expected, to cope as best they could. Villagers feeling ill suffered more than they perhaps should or might have, accepted the realities of what was, and generally trusted in the good intentions of all concerned, with limited expectations and a generally good spirit, in my recollection. Expected lifespan was a lot shorter, of course. There were many inequalities of village life in the countryside, including burdens of disability and poverty, but the lone, multi-village doctor was not in the firing line of people’s dissatisfactions.

Health care services through the intervening decades since then have become more expensively capable and more extensive, specialized and fragmented. Specialized services exist within more tightly managed boundaries of professional roles and responsibilities. Consequently, the patient and their ongoing care and support needs are partitioned across many interfaces of specialism and organization. These interfaces are often seemingly ownerless. Each side has its own image and perception of the world on the other side of the interface. Admission and discharge across the interface seem akin to steps through the magic window of Phillip Pullman’s trilogy, His Dark Materials, between different physical and emotional worlds of bodily health and continuing care.10

ConCaH, which stood for Continuing Care at Home, was a small national organization set up in the mid-1980s by an amazing GP pioneer, Bob Jones, working in Seaton on the southwest coast in Devon. He was a bundle of good humour and immense energy. In the 1980s, we worked together under the auspices of the Marie Curie Foundation, developing a videodisc-based professional educational resource entitled ‘Cancer Patients and Their Families at Home’.11 He asked me to become one of the ConCaH patrons, to represent the potential of IT to transform the then current scene in improving continuity of care.

One of the activities Bob pioneered was a series of one-day meetings, linked with the national Parkinson’s Disease Society, in which he was also active. The purpose was to bring together patients with Parkinson’s disease and the different professionals providing them with health care support, to share their different perspectives and experiences of their services. In the mornings, the professionals each separately described their roles and contributions–secondary and primary care doctors and nurses, community nurses, occupational health and social care teams–sometimes seven key workers for a single patient. In the afternoon session, patients being looked after by these people shared their experiences of the care and support that they received. This led into further discussion and re-visiting of the morning session.

One notable case study was of a family with whom education and employment services were also involved, and their description of a week in which there were twenty-seven unannounced and uncoordinated visits to their home. It was an extreme example but differences in mutual awareness among professionals, and lack of coherent information and continuity of support for patients, was a recurring theme.

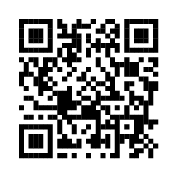

There is something reminiscent, here, of the Thomas Lincoln (1929–2016) story I recounted in the Introduction, where the extent of data collection in the management of severe pneumonia correlated with an inability to act effectively to combat the disease. There is also something of the example mentioned in Chapter Four, on the modelling of clinical diagnosis, where information overload was seen to confuse rather than clarify decision and action. And something of the information and entropy thread followed in Chapter Six, descriptive of order and disorder of systems.

Chaotic times can reveal underlying strengths as well as lurking problems. Personal crisis experienced can reveal and engender self-reliance and a stoic capacity to cope, as well as an inability to do so. But the discontinuity of care highlighted in the ConCaH story is costly and inefficient, as well as ineffective and unnecessary. We should not complain too loudly, though. This is a future we have created, and it can be created differently. Edmund Burke (1729–97), the Irish politician whose statue stands outside Trinity College Dublin, and which I often passed by when attending meetings to examine students at the College, wrote in 1770 about the ‘cause of the present discontents’. He wrote that ‘To complain of the age we live in, to murmur at the present possessors of power, to lament the past, to conceive extravagant hopes of the future, are the common dispositions of the greatest part of mankind’.12

Disjoint sources of information foster the noisy complexity of information systems, obscuring signals that need to be seen and heard, and leading to the fragmentation of services that depend on them. There is an example to learn from, of things managed better than this. Within single and more acutely urgent professional domains, managed and treated by one well-led and focused team, services seem more often to be enabled to perform well. This was a key theme of Atul Gawande in his book, Better, where he described his survey and visits to regional centres of excellence in the USA, for patients treated for cystic fibrosis. His goal was to understand what made one better than another, in terms of their organization, leadership and teamwork, and the quality of service they provided.13

In chronic disease management, which interfaces hospital and community-based services and self-care, isolated records have not connected well, with gaps persisting and little continuity. Such disconnection has pervaded more widely in the Information Age. On average, of the order of twenty percent of professional time, it has been estimated, is spent in managing information. Assembling good and useful data costs time and effort, of course, but twenty percent is a considerable overhead and imposes significant operational burdens on teamwork. If the information systems being fed are not well-tuned in support of health care needs, the loss of resource and capacity to treat and care is disabling–potentially making things worse, overall, not better.

Governance

Governance, which I take to include issues of professional ethics and regulation as well as oversight of services, has assumed heightened importance in the Information Age. The needs and legal requirements to handle personal data confidentially and meet more exacting standards for demonstrating the effectiveness of services and safety of medicines and devices, have multiplied in recent decades. Professions are nationally regulated. Medicines and devices are overseen by national bodies, consistent with international agreements which govern the markets and industries that supply them. Information systems are slowly being assimilated within this framework. Health services embody a mixture of local and national governance and accountability, within the organizations that manage them and the communities they serve. Each level of governance has different requirements and views of operational records of care. Health services are, in the main, governed nationally, high up, and care services locally, low down. Both are experienced locally, by patients, carers and professionals. There is one operational reality to observe, record and account for. There are many and inconsistent accounts, which can then easily misrepresent and confuse.

Regarding local services, the situation is well symbolized by the Escher lithograph entitled Up and Down (1947; discussed in Chapter 6). The world as a small boy sees it, looking from low down, and the small boy as the world seems him, from high up. The small boy on the ground might be a patient. The viewer above a governor or regulator, of one sort or another. The join of perspectives is seamlessly fantastical.

Systems of governance seek to provide balance and continuity between the dual perspectives represented in this image. For this to succeed, they need trusted common ground on which to operate. To be effective, this requires coherent data collected with a minimum of burden on practice. Critical incident reporting across the NHS, involving thirty different formats of data collection, is not a happy state-of-affairs, as I refer to in the section below on the ‘wicked problem’ of health policy. It reflects a general lack of coherence of data and record, and that has become progressively unmanageable and ungovernable.

People do not doubt the good motives and intentions in play on all these levels. But the picture one sees and the evidence one collects depends on where one is looking from and the spectacles one is wearing. It is a picture in which the viewer is also a participant, as the Escher Up and Down lithograph depicts. The boy pictured looking from bottom up, sees himself in the picture seen looking from top down. A similar illusion features in Print Gallery (1956), where the picture is of a boy looking at a picture in a gallery, which morphs, as one’s eye moves (through top left, top right, bottom right, bottom left) into a picture of himself, within the gallery, viewing the picture.14

The experience and resources on which all services draw, is best shared efficiently, cooperatively and collaboratively, and supported with coherent data, not beset by unnecessary duplication of effort. This requires common ground of information systems, not just a postal service between different ones. Although that too can be useful, it is not enough. Policy failure at this level reinforces damaging professional and organizational boundaries, and the failure of resilient balance and continuity of services for those in need. The information utility required can only grow from this common ground. Common ground is open ground, and it is governance of this open ground, on which we need to focus, as we progress from Information Age to Information Society.

From Local to Global Village

There were advantageous characteristics of the village community of my childhood, which it would be good to see revived and renewed in the global village community of today. A principal aim of Part Three of the book is to propose and show how an ecosystem of health care information can be imagined and created, to meet the needs of the global villager and village, as a utility that realizes and adapts to new benefits achievable in the Information Age and avoids falling into new bear traps. This is a tale of two villages.

I have lived in both these villages. The first was the tiny village of my childhood, which I have already described–let us call it Localton, with its nearby local hill of challenges faced in everyday life there. A mountain of wider national challenges loomed from afar but were only sparingly connected with the local hill that dominated and most affected local lives.

The nineteenth-century pattern of remote and isolated village life of Localton is no more in the English countryside, but still lived in much of the world. In my great aunts’ village, movement between villages was conducted by horse and cart and Shanks’s pony (on foot), as described in Chapter Five when discussing the arrival of information technology. Night-time reading was by oil or gaslight. Roughly laid out paths made for bumpy rides on early bicycles, arriving from Germany. Discovery and new means of travel and navigation between countries had been arriving slowly within local awareness, over hundreds of years, through conflict and commerce. Mass transport by ship, car and aeroplane, and their enabling and supporting infrastructures and regulation, started slowly and then arrived in waves over a century or so.

The second village is the city village in which I now live, called Fleetville. It is a distinct and now quite prosperous central area of the city of St Albans, having once been a poorer area, housing families working in the factories nearby. I will call it Globalton. It is in some ways quite like the Localton of my childhood–almost all daily needs within walking distance–but very different in its transport connections and links within multiple global virtual communities. It has its local hill of challenge in everyday life–keeping the community centre alive, regulating local car parking–but is immediately connected, through the Internet and other media, with the global mountains of challenge further afield.

Characterizing such village community today, as described in the Sunday Times newspaper yesterday, is central Walthamstow in East London–of interest and memory to me as it is where my father grew up. This kind of village is now described as a ‘twenty-minute neighbourhood’, and comprises:

- Home, children’s play areas, amenity green space, bus stop–within walking distance of five minutes;

- Shops, bakery, butcher, cafes, nursery, pub/restaurant, hairdresser, primary school, village green, elderly day care centre, medical centre, community allotments/orchard–within walking distance of ten minutes;

- Employment opportunities, workshops, shared office spaces, secondary school, gym and swimming pool, sports pitches, large green spaces in woodland–within walking distance of fifteen minutes;

- Multifunctional community centre, business academy, college, bank, post office, place of worship, garden centre–within walking distance of twenty minutes.

A work in progress, opposed with scepticism and objection five years ago, and still no doubt with teething problems and troubles anew. It has been pedestrianized, and cars are owned by only forty-nine percent of households, compared to seventy-seven percent for the whole of the UK. Although quite small, it has proved viable and resilient in fostering new growth, its community strengths providing a foundation for reconstruction and rebirth.

Goodhart discussed the challenge of finding a new balance of status and reward, between the ‘anywhere’ and ‘somewhere’ of life today. His anywhere is big and global in scope and application, and his somewhere is small and local in everyday life. Globalton is both local and virtual community, and Globalton villagers live double lives. In the everyday, Globalton villagers share and navigate their ‘somewhere’ activities and the challenges of the local hill. In their virtual lives, work and travels, they connect with and engage with village communities elsewhere, and in the ‘anywhere’ challenges on global mountains, experienced and framed more widely. In the Covid months, local group exercise and dance classes switched, with impressive dexterity and success, to individual Zoom participation from the home. Much group activity was transferred online but is eager to return onto local ground.

In Small is Beautiful, Ernst Schumacher (1911–77) used the phrase ‘think globally and act locally’ to bridge responses to the big challenges of global mountain with local community and business, contributing towards their solution by acting on a small scale on the local hill.15 The phrase is attributed originally to the Scots biologist, town planner and social activist Patrick Geddes (1854–1932). Some very successful charitable endeavours have achieved synthesis of this kind, bridging local lives and wider concern to contribute practically to solution of problems on the global hill. The Oxfam and Amnesty International movements have been creative and successful with this approach, although not without their own problems on both these hills. The Internet has been a great enabler of local sharing and support in our global village–WhatsApp groups have bubbled into life along many streets.

The reverse mindset, of thinking locally and parochially, generalizing from problems on the local hill to justify and pursue self-interested action wider afield, has also been empowered in Globalton. The information revolution has harmfully enabled and conflated big and global thought and action anywhere, with small and local thought and action somewhere. Burglars in Localton tended to think locally and act locally, breaking in to burgle on a small scale, somewhere near home. Scammers in Globalton think globally and act globally, to deceive and rob anywhere in the world. One of our credit cards was scammed recently and, in a matter of days, thirteen thousand pounds of fraud had been charged to it in a total of some twenty places across the country! Social activists also think and act globally on major issues of the day and combine forces though social media to focus and coordinate local action.

Matthew Arnold’s (1822–88) book, Culture and Anarchy, was a mid-nineteenth-century take on conflicts of culture in human society.16 I remember reading at school about his take on ‘Barbarians’ and ‘Philistines!’ A hundred years on, in the late 1950s, Charles P. Snow (1905–80) divided cultures between the sciences and the arts. The Information Age has spread and amplified cultural division.17

Some prophets of change envisage and target a future culture of society, characterized by the ‘sweetness and light’ that Jonathan Swift (1667–1745) wrote about at the turn of the eighteenth century–a mature sense of beauty combined with alert and active intelligence! The culture of Globalton is an organic one, growing much faster than the Localton culture of my childhood, within times of Whitehead anarchy. Localton culture was grounded and sceptical. Globalton culture seems more beguiled by and susceptible to the promise of magic bullets; these sometimes get blocked and backfire in their rifle turrets.

In their inukbook on the future of the professions in the Information Society, Richard and Daniel Susskind set out what they admitted was a very wordy and legalistic grand bargain governing future professional relationships with citizens.18 They saw such change as inevitable, with adaptation to the new reality being primarily a challenge of culture, values and expectations. I draw on their ideas in Chapter Eight, in discussion of the shape of health care professions in the future Information Society. There is a similar challenge facing each global villager, in combining their global thinking and action ‘anywhere’ with local thinking and action ‘somewhere’.

These are much the same challenges that Karl Popper (1902–94) addressed in his book The Open Society and Its Enemies.19 There is enduring conflict. Chapters Eight and Nine develop a vision of purpose, goal, method, team and environment for the creation of a care information utility, in the context of the transition from local to global village. Chapter Eight and a Half describes my experience of the past thirty years in working towards that end.

Instability of the Global Village

What promises to integrate and make whole, can lead to instability and fragmentation. The information landscape can become one of isolated power and influence, orchestrated globally from safe, high-up places. And into the intervening gaps and holes, the less powerful and more exploitable can easily fall, be it by default, lack of care, or incautious and foolish intent. The Internet has connected local villagers and village life into a virtual global village community. Between global villages, today, there are heightened inconsistencies and inequalities of health care. And there is heightened awareness of these, and of the global dimensions of the challenges they pose.

Along my songline, the technologies of telephony and broadcasting, and the superseding digital carriers over land, under sea and relayed by satellites, have evolved into high capacity, superfast broadband. The information highway has channelled rivers of information through every village, flooding out across every plain. Mobile telephony has transformed life on a global scale. It provides a seemingly limitless capacity capillary network of information flow, circulating pervasively in the world. And low-level satellite networks promise yet more. The network is good at delivering information; some is vital and benevolent and some harmful and malevolent. It is poor at clearing up information litter and removing its addictive substance and noxious toxin. It transports secret and criminal content that few know to be there.

Governments were left far behind in adjusting to this revolution. In failing to protect and prepare effectively for new governance, they delegated or abdicated power to eager new information barons of industry, commerce and crime. By default, citizens became clients of global information corporations and monopolies. Governments are now struggling to retrofit vehicle production standards, rules and regulations of the road, and means of navigation, amid information traffic that is fast-moving in all directions. It is a scary and unruly place for citizens to navigate–personal survival favours running for cover!

This revolution has transformed and tested the balance, continuity and governance of services. Human relationships and attitudes are also in rapid transition, challenging belief, culture and values. Facebook is the most wonderful of enablers of social life and the most awesome of Faustian bargains in its misuse and abuse. It is part-motorway and part-car. Unlike these, it has risen virtually unrecognized, hidden in plain sight. Global village citizens and burghers were bewitched by, and welcomed in, what was camouflaged and portrayed as a gift. This gift transformed into a magical power to connect any person with anything. It opened, far and wide, a Pandora’s box of unknowable sequelae. It enabled local eyes to see into the global village and this brought new personal power. Local eyes and ears could be conditioned and manipulated, with image and conspiracy planted from far away, in furtherance of wider and unknown powers.

In the information pandemic that followed, education, research and scholarship, trade and profession all started to operate differently. Cooperation, collaboration and supply lines of trade connected, extended and flattened around the globe. And the politics of the global village polis entered an uncertain evolving order and chaos. Thomas Friedman wrote The World is Flat and Francis Fukuyama, The End of History, imagining these new bridges to the future and their implications.20

There is not yet a discernible centre or law of the land in this global village. There is not yet politics fit for such global polis. There is shared intention and cooperation in building the information network, but little power in finding common ground on which to regulate it. Rather, it has become an instrument and battlefield of conflict and interest. The landscape is then left to the exercise of unbridled and arbitrary power. Attempts to shore up existing frameworks of law and regulation have led to artfully less equitable circumvention of accountability.

The Industrial Revolution was a seedbed of wealth creation and human emancipation, however imperfectly and however unfairly, as it was of empire. From the shocks and after-shocks of conflict and disease, and progressive social emancipation, consensus was created and led towards global institutions of finance, enterprise, health and governance, founded on belief in human rights and strong democratic institutions. They trusted in, and hoped to foster, the better angels of our nature, as Steven Pinker described them.

The Information Age is a comparable leap forward and disruption of status quo. It is probing the limits of social cohesion and resilience of the global village life it has led to. This is playing out in the wider context of global disruption and inequalities of climate, economy and politics. These perturbations are of such scale as defies local resolution and such nature that challenges global action. Global viral pandemic, as much as global climate, transcends boundaries and floats under and over drawbridges. Information for health care is pervasive, both global and local utility. It requires adaptable fusion of both global and local architecture, with global and local governance. This is the space that the creation of information utility will populate.

Lifespan, Lifestyle and Health Care

In his third chapter of On the Origin of Species, Charles Darwin (1809–92) wrote about struggle between species:

It is good thus to try in imagination to give to any one species an advantage over another. Probably in no single instance should we know what to do. This ought to convince us of our ignorance on the mutual relations of all organic beings; a conviction as necessary as it is difficult to acquire […] When we reflect on this struggle, we may console ourselves with the full belief, that […] the vigorous, the healthy, and the happy survive and multiply.21

This was his observation and reasoning about the natural world. Survival and procreation reflect the biology of life and the behaviour and circumstances of living. In human society, we might simplify these under the headings of lifespan and lifestyle.