32. Somax2 and Reinterpreting

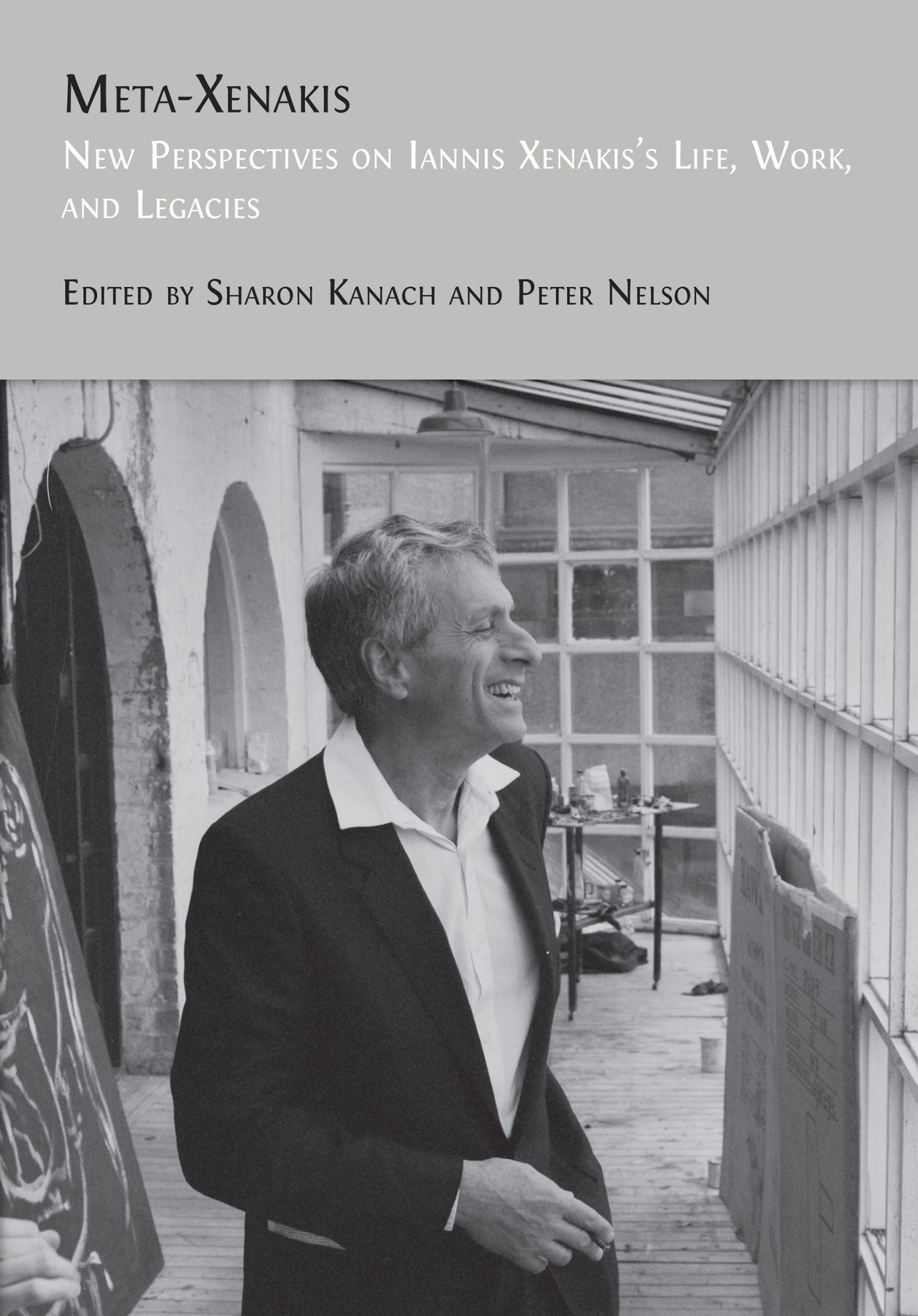

Iannis Xenakis

Mikhail Malt and Benny Sluchin

© 2024 Mikhail Malt and Benny Sluchin, CC BY-NC 4.0 https://doi.org/10.11647/OBP.0390.34

Somax2 Introduction

Somax2 it is currently being developed by Joakim Borg, Gérard Assayag, and Marco Fiorini at IRCAM.1 Somax2 comes from a long lineage of computer-aided improvisation environments developed by the Representation Musicales (RepMus) Team at IRCAM, based on what we call the “OmMax co-improvisation paradigm.” This paradigm is based on a loop process between Listening, Learning, Modeling and Generating process. The previous Omax environment has been refined into several co-creative softwares: ImproteK/Djazz, Dyci2, and Somax itself. The actual version is a recent development including algorithm improvements from the former Somax version and previous work among members of the RepMus team.2

Software agents provide stylistically coherent improvisations based on learned musical knowledge while continuously listening to and adapting to input from musicians or other agents in real time. The system is trained on any musical materials chosen by the user, effectively constructing a generative model (called a “Corpus”), from which it draws its musical knowledge and improvisation skills. Corpora, inputs, and outputs can be MIDI as well as audio, and inputs can be live or streamed from MIDI or audio files. Somax2 is one of the improvisation systems descending from the well-known Omax software, presented here in a totally new implementation. As such, it shares with its siblings the general loop (listen/learn/model/generate), using some form of statistical modeling that ends up creating a highly organized memory structure from which it can navigate into new musical organizations, while keeping style coherence, rather than generating unheard sounds as other machine learning (ML) systems do. However, Somax2 adds a totally new versatility by being incredibly reactive to musicians’ decisions, and by putting its creative agents to communicate and work together in the same way, thanks to cognitively inspired interaction strategies and a finely optimized concurrent architecture, make all its units cooperate together smoothly.

Somax2 allows detailed parametric controls of its players and can even be played alone as an instrument in its own right, or even used in a composition’s workflow. It is possible to listen to multiple sources and to create entire ensembles of agents where the user can control in detail how these agents interconnect and “influence” each other.

Somax2 is conceived to be a co-creative partner in the improvisational process, where the system, after some minimal tuning, is able to behave in a self-sufficient manner and participate in a diversity of improvisation set-ups and even installations.

The following links provide demos and performances:

- Project page: http://repmus.ircam.fr/somax2

- Forum Ircam Somax2 page: https://forum.ircam.fr/projects/detail/somax-2/

- Somax2 demo: https://vimeo.com/558962251

- Somax2 public performances: https://vimeo.com/showcase/somax2-perfs

- REACH Project and performances featuring AI software: https://vimeo.com/showcase/musicandai

Somax2 in a Nutshell

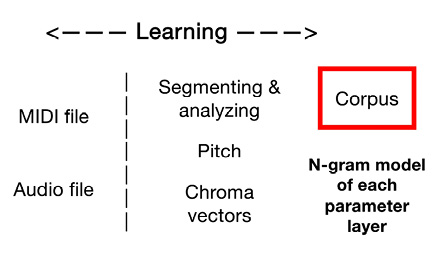

In Somax2, everything begins with the learning process, where a MIDI or an audio file is segmented and analyzed from the point of view of pitch and chroma vectors, building what we call a “Corpus”; i.e., a set of N-gram models of each parameter layer (Figure 32.1).

Fig. 32.1 Somax2 learning step. Figure created by authors.

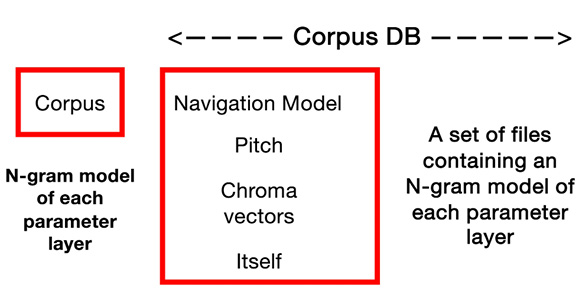

After this first learning stage, we reach to build a Corpus. This Corpus will be a navigation model for the system (Figure 32.2).

Fig. 32.2 The corpus as a navigation model. Figure created by authors.

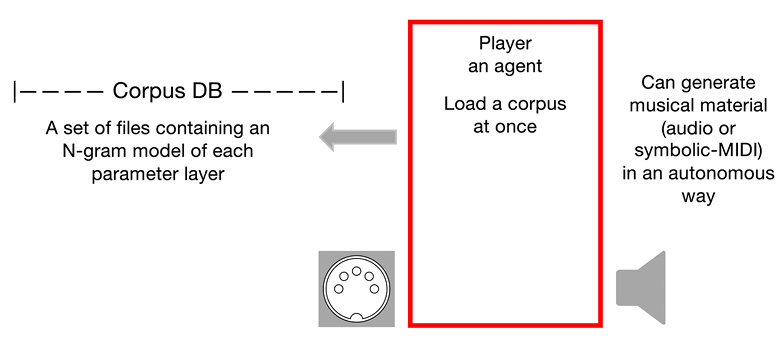

Each corpus can be called by what we call “a player,” that can generate, in an autonomous way, musical material (audio or symbolic-MIDI) (Figure 32.3).

Fig. 32.3 The agent player bounded on a given Corpus. Figure created by authors.

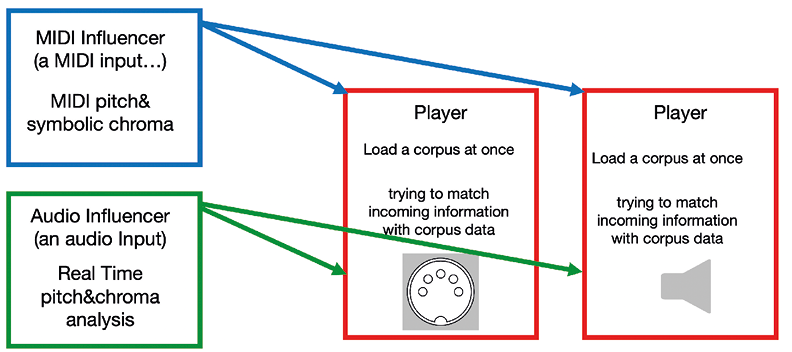

But, also, the player(s) (we can have several at the same time) can have their behavior influenced by a MIDI device or by an audio stream (Figure 32.4).

Fig. 32.4 Somax2 players being “influenced” by external, audio, or MIDI data streams.

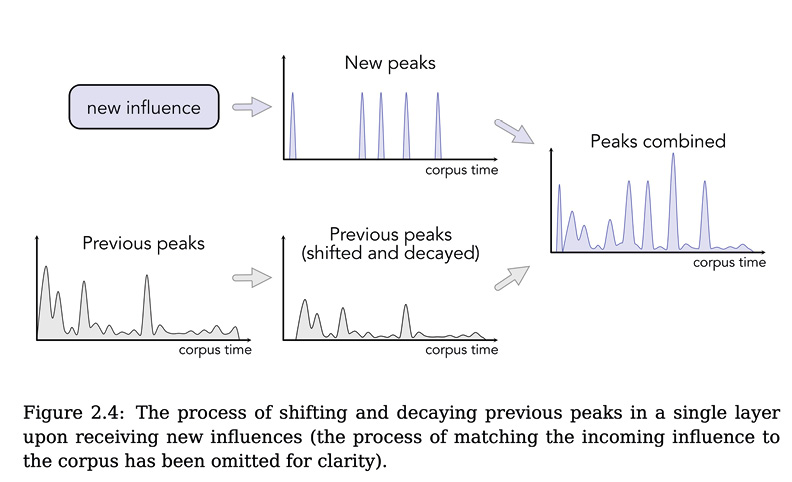

An important aspect of this matching part is the pitch and harmonic memory, which we can set in Somax2. At each step, the player not only tries to match the actual incoming information but it also takes into consideration past information. This is a very important parameter (Figure 32.5).

Fig. 32.5 Pitch and harmonic memory model. Borg, 2021a, p. 8.

For a more detailed description, we suggest reading Joakim Borg’s excellent internal technical report, “The Somax2 Theoretical Model.”3

References

ASSAYAG, Gerard, BLOCH, George, CHEMILLIER, Marc, CONT, Arshia, and DUBNOV, Shlomo (2006), “Omax Brothers: A Dynamic Topology of Agents for Improvisation Learning,” in Jun-Cheng Chen, Wei-Ta Chu, Jin-Hau Kuo et al. (eds.), Proceedings of the 1st ACM Workshop on Audio and Music Computing Multimedia, Santa Barbara, California, p. 125–32.

ASSAYAG, Gerard (2021), “Human-Machine Co-Creativity,” in Bernard Lubat, Gerard Assayag, and Marc Chemillier (eds.), Artisticiel / Cyber-Improvisations, La Rochelle, Phonofaune.

BONNASSE-GAHOT, Laurent (2012), “Prototype de logiciel d’harmonisation/arrangement à la volée,” Somax v0, Internal Report, IRCAM.

BONNASSE-GAHOT, Laurent (2014), “An Update on the SOMax Project,” IRCAM-STMS, Internal Report ANR Project Sample Orchestrator, http://repmus.ircam.fr/_media/dyci2/somax_project_lbg_2014.pdf

BORG, Joakim (2020), Dynamic Classification Models for Human-Machine Improvisation and Composition, Master’s dissertation, IRCAM and Aalborg University Copenhagen, http://repmus.ircam.fr/_media/dyci2/joakim-borg-master-2019.pdf

BORG, Joakim (2021a), “The Somax2 Software Architecture,” Internal Technical Report, IRCAM, http://repmus.ircam.fr/_media/somax/somax-software-architecture.pdf

BORG, Joakim (2021b), “The Somax2 Theoretical Model,” Internal Technical Report, IRCAM, http://repmus.ircam.fr/_media/somax/somax-theoretical-model.pdf

BORG, Joakim, ASSAYAG, Gerard, and MALT, Mikhail (2022), “Somax2 a Reactive Multi-Agent Environment for Co-Improvisation,” in Romain Michon, Laurent Pottier, and Yann Orlarey (eds.), Proceedings of the 19th Sound and Music Computing Conference, Saint-Etienne, Université Jean Monnet, INRIA, and FRAME-CNCM, p. 681–2, https://hal.science/hal-04001271/document

CARSAULT, Tristan (2017), “Automatic Chord Extraction and Musical Structure Prediction Through Semi-Supervised Learning, Application to Human-Computer Improvisation,” Master’s dissertation, IRCAM, UMR STMS 9912, http://repmus.ircam.fr/_media/dyci2/carsault_tristan_rapport_stage.pdf

CARSAULT, Tristan, NIKA, Jérôme, and ESLING, Philippe (2019), “Using Musical Relationships Between Chord Labels in Automatic Chord Extraction Tasks,” in Emilia Gómez, Xiao Hu, Eric Humphrey, and Emmanouil Benetos (eds.), International Society for Music Information Retrieval Conference (ISMR 2018), Paris, ISMR, p. 18–25, https://doi.org/10.48550/arXiv.1911.04973

CARSAULT, Tristan (2020), “Introduction of Musical Knowledge and Qualitative Analysis in Chord Extraction and Prediction Tasks with Machine Learning,” doctoral dissertation, Sorbonne Universités, UPMC University of Paris 6, https://theses.hal.science/tel-03414454v2/document

CARSAULT, Tristan, NIKA, Jérôme, ESLING, Philippe, and ASSAYAG, Gerard (2021), “Combining Real-Time Extraction and Prediction of Musical Chord Progressions for Creative Applications,” Electronics, vol. 10, no. 21, p. 2634, https://hal.sorbonne-universite.fr/hal-03474695/document

FELDMAN, Benjamin (2021), “Improving the Latent Harmonic Space of Somax with Variational Autoencoders,” Master’s dissertation, Centrale Supelec, IRCAM, UMR STMS 9912, http://repmus.ircam.fr/merci/publications

LÉVY, Benjamin, BLOCH, Georges, and ASSAYAG, Gérard (2012), “OMaxist Dialectics: Capturing, Visualizing and Expanding Improvisations,” Proceedings of the International Conference on New Interfaces for Musical Expression, Ann Arbor, Michigan, p. 137–40, https://doi.org/10.5281/zenodo.1178327

LÉVY, Benjamin (2013), “Principes et architectures pour un système interactif et agnostique dédié à l’improvisation musicale,” doctoral dissertation, Sorbonne Universités, UPMC University of Paris 6, https://benjaminnlevy.net/files/BLevy-PhD-v1.0-C.pdf

NIKA, Jérôme, DÉGUERNEL, Ken, CHEMLA-ROMEU-SANTOS, Axel, VINCENT, Emmanuel, and ASSAYAG, Gérard (2017), “DYCI2 Agents: Merging the ‘Free,’ ‘Reactive,’ and ‘Scenario-based’ Music Generation Paradigms,” International Computer Music Conference, October, Shanghai, China, https://hal.science/hal-01583089

SLUCHIN, Benny, CONFORTI, Simone, and MALT, Mikhail (2022), “Somax2, a Reactive Multi-Agent Environment for Co-Improvisation,” Performance at Sound and Music Computing Conference, 7 June, Saint-Etienne, France.

XENAKIS, Iannis (1986), Keren (score EAS 18450), Paris, Salabert.

XENAKIS, Iannis (1989), Keren, recording in Benny Sluchin and Pierre Laurent Aimard (1989), Le Trombone Contemporain, Paris, Adda—581087.

1 Joakim Borg, https://www.ircam.fr/person/joakim-borg; Gérard Assayag, https://www.ircam.fr/person/gerard-assayag/; Marco Fiorini, https://forum.ircam.fr/profile/fiorini/. The development of Somax2 is part of the project REACH supported by the European Research Council under Horizon 2020 program (Grant ERC-2019-ADG #883313) and project MERCI supported by Agence nationale de la Recherche (Grant ANR-19-CE33-0010).

2 See Borg, 2020, 2021a, 2021b; Assayag, 2021; Bonnasse-Gahot, 2012; Carsault, 2017, 2020; Carsault et al. 2021.

3 Borg, 2021a.