8. Pictured hate:

A visual discourse analysis of derogatory memes on Telegram

Lisa Bogerts, Wyn Brodersen, Maik Fielitz, and Pablo Jost

©2025 Lisa Bogerts et al., CC BY 4.0 https://doi.org/10.11647/OBP.0447.08

Abstract

Memes have become a propaganda weapon of far-right groups. While several studies highlight the strategic use of memes in far-right contexts, there is little empirical research on which groups these memes target, and how. As the visual stigmatisation of outgroups is a central means of communicating far-right worldviews, this study examines the visual propaganda of far-right and conspiratorial actors from a quantitative and qualitative perspective. To do this, we analysed memetic communication using computational and interpretive tools selected according to the visual discourse methodology. We collected our material from 1,675 alternative right-wing German-speaking channels of the messenger service Telegram, which we categorised into different sub-milieus and monitored continuously. Our findings suggest that there are significant differences in the way certain groups are targeted and a tendency to highlight the trigger points of current polarised public debates.

Keywords: memes, Germany, Telegram, far-right, conspiracy theories

Introduction

Digitalisation has led to the emergence of new formats and dissemination strategies for far-right politics, particularly aimed at younger generations. With the advent of social media and the potential for building a large online audience, far-right actors have adopted communication tactics that have the potential to go viral in online contexts. These include a shift towards visual and audio-visual propaganda, as well as the targeting of online-savvy milieus that congregate to attack individuals and marginalised groups (Askanius 2021a, Thorleifsson 2021). From these converging online milieus, far-right terrorists have been recruited. Before, during and after their killing spree, several far-right terrorists publicly referred to meme cultures and encouraged their audience to produce memes glorifying the violence of perpetrators.

Memes have become an effective tool of far-right online propaganda, as well as a common way of expressing emotions and political ideas. In fact, politics, social relations, and public entertainment are today hardly imaginable without the use of memes (Mortensen and Neumayer 2021). As a pervasive digital phenomenon, they combine political messages with (moving) images from pop or everyday culture. In extremist contexts, memes have the potential both to radicalise and to make far-right ideas mainstream. On the one hand, they make extremist ideas mainstream by appealing to popular communication habits (Schmid 2023). On the other hand, they may have a radicalising effect on consumers as the massive spread of hatred may contribute to turn towards the conduct of political violence (Crawford and Keen 2020).

Because they are semiotically more open than pure text, image-based memes circumvent analogue and algorithmic content moderation. In fact, memes disseminated by notorious actors often only imply extremist messages, while refraining from clearly expressed extremism (Bogerts and Fielitz 2019). In light of this, research has examined cross-platform circulation (Zannettou et al. 2018), strategic mainstreaming (Greene 2019) especially through the use of humour and irony (Mc Swiney et al. 2023), as well as the aesthetic features of far-right memes (Bogerts and Fielitz 2023).

However, even though we know a lot about the strategic use of memes, few studies show empirically how groups such as women, queer persons, or Jews are attacked by derogatory memes—even less over a longer period. As the (visual) stigmatisation of outgroups is a central vehicle for communicating far-right worldviews (Winter 2019), this study scrutinises the visual propaganda of far-right and conspiracist actors using computational methods and interprets selected images according to visual discourse analysis (Bogerts 2022). We gathered our material from 1,675 far-right and conspiracist German-speaking channels on the messenger service Telegram.

Our findings indicate that there are significant differences in the ways certain groups are caricatured by diverging visual elements, aesthetic styles and rhetorical means. Furthermore, we found a tendency to emphasise the trigger points of current polarised public debates. To explain how we reached these conclusions, we begin by delving into the state of research on far-right memes in digital communication. Next, we present our methodology and the quantitative results. We then dive deeper into the narratives, elements, and persuasion strategies of misogynistic, trans-hostile and antisemitic memes and, finally, examine which group of derogatory memes are disseminated most widely. By comparing different forms of visual discrimination, we can better understand how different memes contribute to spreading ideologies of inequality—a central element of far-right politics—from below.

Far-right (and) hate memes in digital communication

With the proliferation of audiovisual platforms, memetic content has become a central element of everyday communication. Originally, the phenomenon was broadly defined and stems from evolutionary biology. The term “meme” goes back to Richard Dawkins (2006) and is etymologically composed of two parts: Mimesis for imitation, and Gene for genetics. Similar to the gene, the meme spreads in the “meme pool” (Dawkins 2006: 192), but, unlike genetics, memes do not reproduce and rather infect, like a virus. When a meme goes viral, it is constantly being imitated, but through mutation it adapts to new contexts and constantly forms new variants (Dawkins 2006). Dawkin’s general understanding of a meme as a spreading idea—or, as Richard Brodie (2009) describes it, as a virus of the mind—therefore cannot be limited to material or digital entities.

In digital communication, a meme is usually understood as an image-text combination in which a text is layered on top of an existing image (macro). Since both the text and the macro contain references to other memes or cultural phenomena, memes are characterised by “complex reference structures” (Nowotny and Reidy 2022: 33) and fall into two categories. On the one hand, there are those memes that are shared as a trend, such as a successful video, without change; on the other hand, there are those types of memes that become known only through changes in form and content (Marwick 2013). As this research is interested in the latter and based on large datasets prepared for automated analysis, we chose a minimal definition of memes as image-text combinations shared for the purpose of broad diffusion (see also Schmid et al. 2023). Screenshots of text messages, thumbnails, statistics, charts, stock photos, and product advertisements and photographs without text were excluded from the analysis.

Understood as the “intentional production and dissemination of ‘a group of digital objects’ [...] transformed by the transmission of many users through the Internet” (Shifman 2014: 41), the online memes encompasses a variety of content and format types. Memes are used to convey the idiosyncrasies of everyday life, which often defy verbal expression (von Gehlen 2020). They reflect the prevailing zeitgeist of simplifying the complexities of the world into a format that can be quickly consumed. And they are an effective means of attracting attention. It is therefore not surprising that memes are also used strategically to achieve political goals. The so-called ‘meme wars’ of the US alt-right, which erupted around the first election of Donald Trump in 2016, are a case in point (Dafaure 2020, Donovan et al. 2022).

Since then, memes have served as a means of disseminating extremist ideas to the masses, often in ways that are both timely and pop-cultural. In this context, memes convey far-right messages in a seemingly innocuous way, creating their own unique viewing habits and dynamics. The use of humour and irony allows extreme ideas to be expressed in a deliberately ambiguous way (Askanius 2021, McSwiney et al. 2021). They are semiotically open as they communicate on different levels and address different audiences. This means that the messages conveyed in a meme may never be fully understood by recipients, as the true origin of memes is often unclear. Elements of far-right ideology can thus circulate freely, even if they are shared by organisations with different agendas.

Unlike text, image-based memes can be grasped in a matter of seconds. Through their repetitive consumption, they appeal to both affect and cognition (Huntington 2015). To be created and understood, they require subcultural knowledge of codes and aesthetic composition, as well as an understanding of the factors that contribute to the virality of online materials (Grundlingh 2018). These skills are disseminated and acquired in specific online forums, such as 4chan, which are notorious for generating some of the most popular internet trends while also facilitating extremist communication (Philipps 2015). Consequently, despite the anonymity that memes offer their creators, they have the potential to rely on shared symbols, aesthetics, and modes of communication (Beyer 2014).

Many memes, not only in extremist contexts, refer to a rough net-cultural atmosphere and use humour at the expense of minority groups (Beran 2019). Stereotypical characters are combined with depictions of ingroup superiority to convey derogatory messages. We also find multiple discriminatory messages against different groups combined in one meme. Concerningly, research on intergroup conflict suggests that group degradation like this can be effective. According to the concept of group-focused enmity, diverse groups are cumulatively degraded on the basis of allegedly immutable characteristics in order to justify ideologies of inequality (Zick et al. 2009). However, the persuasive power of memes does not necessarily derive from ideological indoctrination, but also from sophisticated aesthetics, creative in-jokes and the potential for virality (Miller-Idriss 2020).

Methodology

To investigate the cross-phenomenal patterns of hate memes, we used a combination of computational and qualitative methods. We selected a dataset of 4,584 public German-speaking channels and groups on Telegram, which have shared around 8.5 million images since 2021.1 These channels and groups are constitute a network of monitored Telegram channels by forwarding messages via public channels. Due to the diversity of their orientation, we further classified these channels in order to better analyse and categorise their ideological orientation and their shifts in discourse positioning.

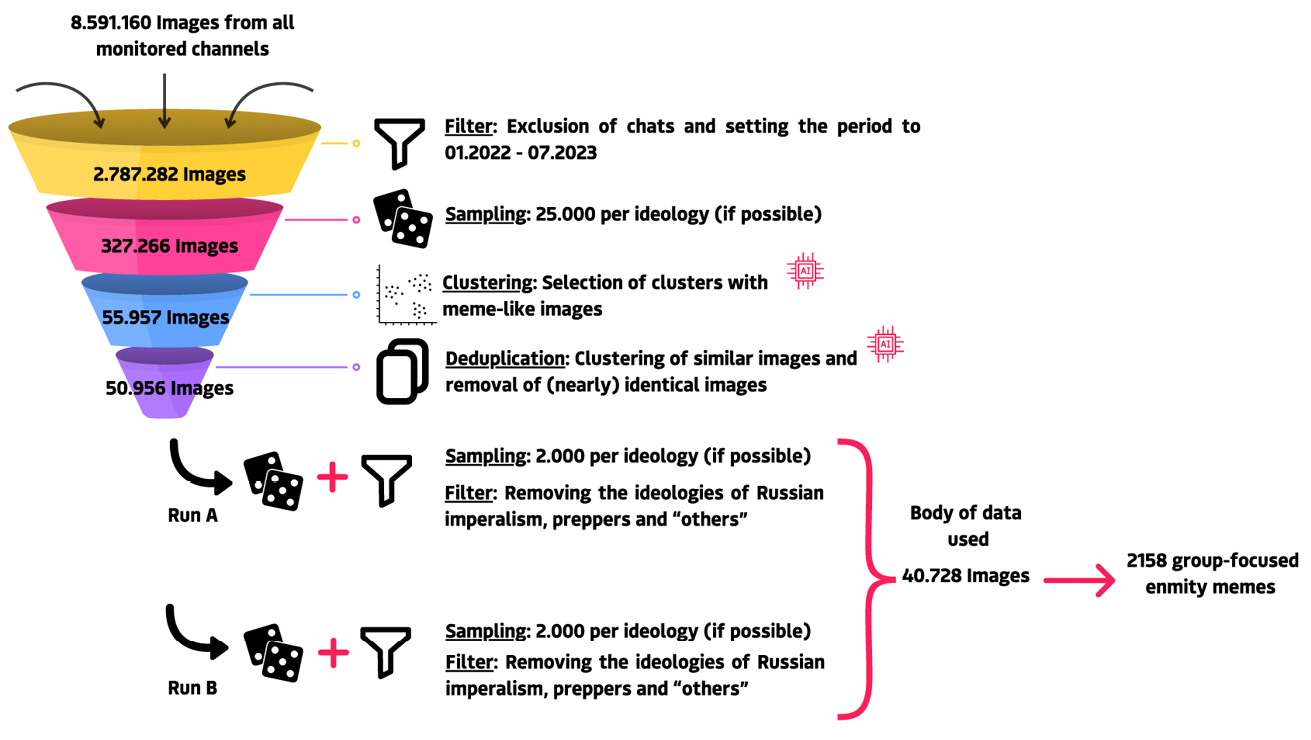

We were interested in text-image combinations that potentially discriminate against one or more of the following groups: women, the LGBTQ community, Muslims, Jews, and people of colour in general. To generate a diverse dataset while minimising pandemic-related content, the time period was limited to 1 January 2022 to 30 June 2023. From the 2,787,282 remaining images a random sample of 25,000 images were selected that were equally distributed along diverse sub-milieus identified by the in-house monitoring of the Federal Association for Countering Online Hate.2

To increase the likelihood of selecting files containing both text and images, the 327,266 remaining images were filtered using the image embeddings from OpenAI’s CLIP model (version clip-ViT-B-32), which is trained to understand both text and images. This automated process reduced the dataset by 82.8%, minimising the risk of excluding potentially interesting memes. Next, a random sample of 2,000 images per subset was selected and deduplication was applied using CLIP embeddings, cosine similarity and a high threshold to eliminate nearly identical images. This resulted in a final corpus of 40,728 images, which were used for manual annotation.

Fig. 8.1 Visualisation of the multi-stage sampling process

In the first step (see description below), the images were annotated by a group of students who underwent a three-stage training programme to sensitise them to the content. The annotation process involved the research team in complex and nuanced steps to ensure thorough and accurate classification.

The material was analysed using a visual discourse analysis approach. Following Gillian Rose (2016: 187), we understand “visual discourse to be a set of visual statements or narratives that structures the way we think about the world and how we act accordingly” (Bogerts 2022: 40). For our analysis it is particularly important what social groups are made visible, how often they are depicted and how they are represented. Therefore, we combined a quantitative content analysis—i.e. counting of the visual elements (Bell 2004)—with the qualitative identification of narrative structures and strategies of persuasion, which also takes into account the image-text relationship that is characteristic of memes as we define them in this chapter. In order to delve deeper into the visual terrain of far-right memes, we proceeded according to five steps.

In the first step, the material was sorted according to the five pre-defined group-related hate categories [1] (antisemitism, misogyny, LGBTQ hostility, hostility towards Muslims, racism) and one category for other affected groups. Each image was annotated three times and only those with at least two concurring annotations, e.g. as conveying “racism,” were selected for the category “racism”. To achieve consistent annotation among the research team, we agreed on definitions about what each form of group-related degradation entails, which were informed by research literature e.g. on forms of antisemitism and misogyny. Multiple classifications within one image were possible and received special attention due to the intersectionality of the phenomena.

Secondly, the narratives [2] of the memes were annotated to understand the degrading arguments made by the images in the respective categories. These narratives were derived inductively from the material and specified with the help of research literature on group-related hate. In other words, the research team went through numerous memes from each group-related category and identified recurring themes, ‘arguments’, and stories. For instance, it became obvious that many memes conveying racism portrayed racialised people as being “criminal”, a “threat to public health” or a threat to the alleged “purity of an imaginary German völkisch community”. After deriving several such key narratives, we tested whether they were exhaustive and as unambiguous as possible, and revised them when necessary (Bell 2004: 15-16). In this step, memes that had previously been incorrectly annotated as one category in the first step were now reassigned to the correct category.

To go beyond the tendency to interpret memes according to their text elements and gain insights into visual communication, the visual elements [3] of the memes were annotated according to classic content analysis (Bell 2004). Several types of visual elements were distinguished (people in general, specific celebrities, objects, nature, and symbols), each containing 5–11 different elements (Bogerts 2022: 42). To do so, as in the previous step, the research team went through the material with “fresh eyes” (Rose 2016: 205) in order to see what is usually overlooked when superficially and subconsciously interpreting images. For instance, the category “symbols” contained annotations like “German flag” or “other flags”, the category “objects” included “weapons” or “money”.

Persuasive meme strategies also work with different rhetorical means [4]. Depending on which feeling the meme producer aims to evoke in the consumer to make the message convincing, they might choose a certain form of “argumentation”. As humour is ubiquitous in memes, we went through the material to test which memes were intended to be humorous and which ones use other means of persuasion. As a result, in this final step, we annotated the rhetorical means of humour, outrage (about an alleged behaviour of the targeted group), open threat of violence against the marginalised group, ingroup victimhood/reverse victim and offender, and ingroup superiority or pride. We were already familiar with the latter categories from a previous study (Bogerts and Fielitz 2019), where we had observed ingroup “victimhood” e.g. of white Germans who feel disadvantaged by refugees who receive social security benefits in Germany, and ingroup superiority e.g. by white Germans who expressed feeling (racially) superior to racialised (non-white) people.3

Following this, the aesthetic styles [5] of the images were annotated. In doing so, the researchers paid further attention to the visual characteristics of memes that might influence our interpretation and classification subconsciously. We inductively derived from the material several aesthetic styles that meme producers employed to communicate their messages. This procedure builds on our previous study on far-right memes (Bogerts and Fielitz 2019) where we had identified a limited set of typical aesthetics that seem to make a meme attractive or persuasive in the eye of its producer, depending on the content of the message. Recognising some of these aesthetics and identifying new ones, we categorised all memes as modern photography, historical imagery (photography, painting), comic/cartoon, advertisement/fake advertisement, pop culture reference, statistics/diagrams, screenshots, chat aesthetics (emojis, etc.), or collage.

Lastly, a regression analysis [6] was conducted to examine which group of memes went viral in the Telegram sphere. Virality, in this context, was measured by the number of times a message was forwarded. The analysis is based on 2,158 messages. For comparison, a random sample of 6,474 messages from 322 channels where memes were also shared was used as a reference dataset, resulting in a combined total of 8,632 messages. Two models were developed for this analysis. The first model used the distinction between messages containing hate memes and those without as the independent variable, with the analysis conducted on the entire dataset. The second model focused on the meme-specific dataset to assess the influence of hate categories on virality. These categories were treated as independent variables. In both models, the number of subscribers to the channels was included as a control variable to mitigate potential bias from channels with disproportionately high reach.

Results

In examining our data, we focus on four dimensions. Firstly, we present the statistical frequencies of memes in different sub-milieus, breaking down the data to quantify which of the pre-defined groups were most targeted. We then move to a more fine-grained qualitative examination, looking at the narrative composition of misogynistic memes to better understand the versatility of memes within a single category of group-focused enmity (whereas misogyny is also the most prevalent). In a third step, we illustrate our approach of element coding and visual rhetorical strategies in the cases of LGBTQ hostility and antisemitism. Finally, we measure the virality of the memes in our dataset using regression analysis methods. Our aim was to find out whether hate memes are more widespread than other formats, and whether there are different rates of spread between the categories of group-focused enmity studied.

Quantitative analysis

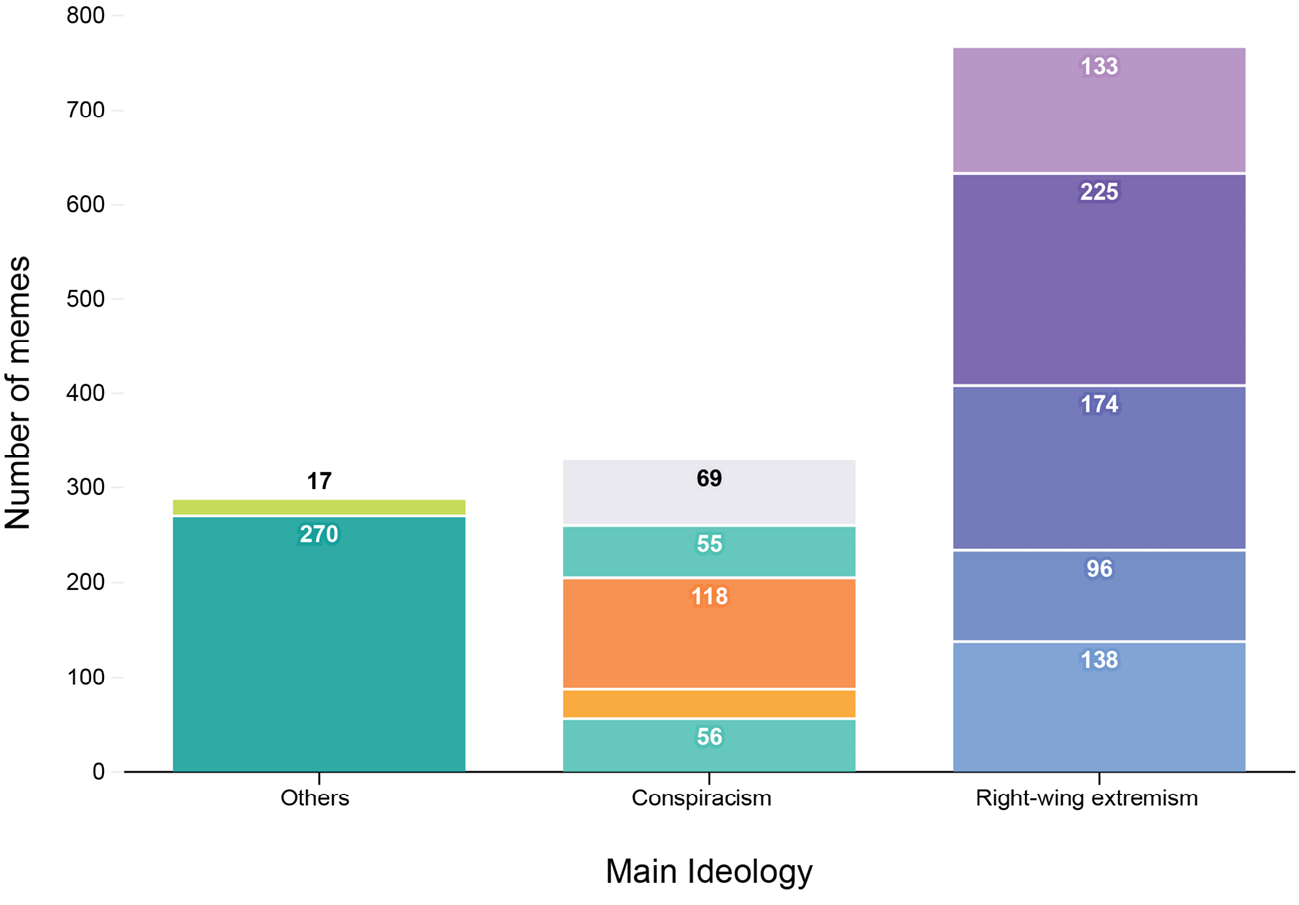

Fig. 8.2 Meme prevalence divided into ideological sub milieus (n=2.158)

In the quantitative part of the analysis we find significant differences in the use of memes by different anti-democratic sub-milieus and targeted groups. From a global perspective, a total of 5.3% (2,158) of the memes in our sample were annotated and interpreted as derogatory memes at the expense of our predefined groups. At first glance, this seems like a small number, as the Telegram platform has been described as a hotbed of extremist communication, especially in Germany (Buehling and Heft 2023, Jost and Dogruel 2023). There are profound differences in the use of such memes. In particular, far-right actors (766) were found to share hate memes more often than those in the conspiratorial milieu (287) or other channels (287). This is not necessarily surprising, as we see a strategic use of memes in far-right contexts.

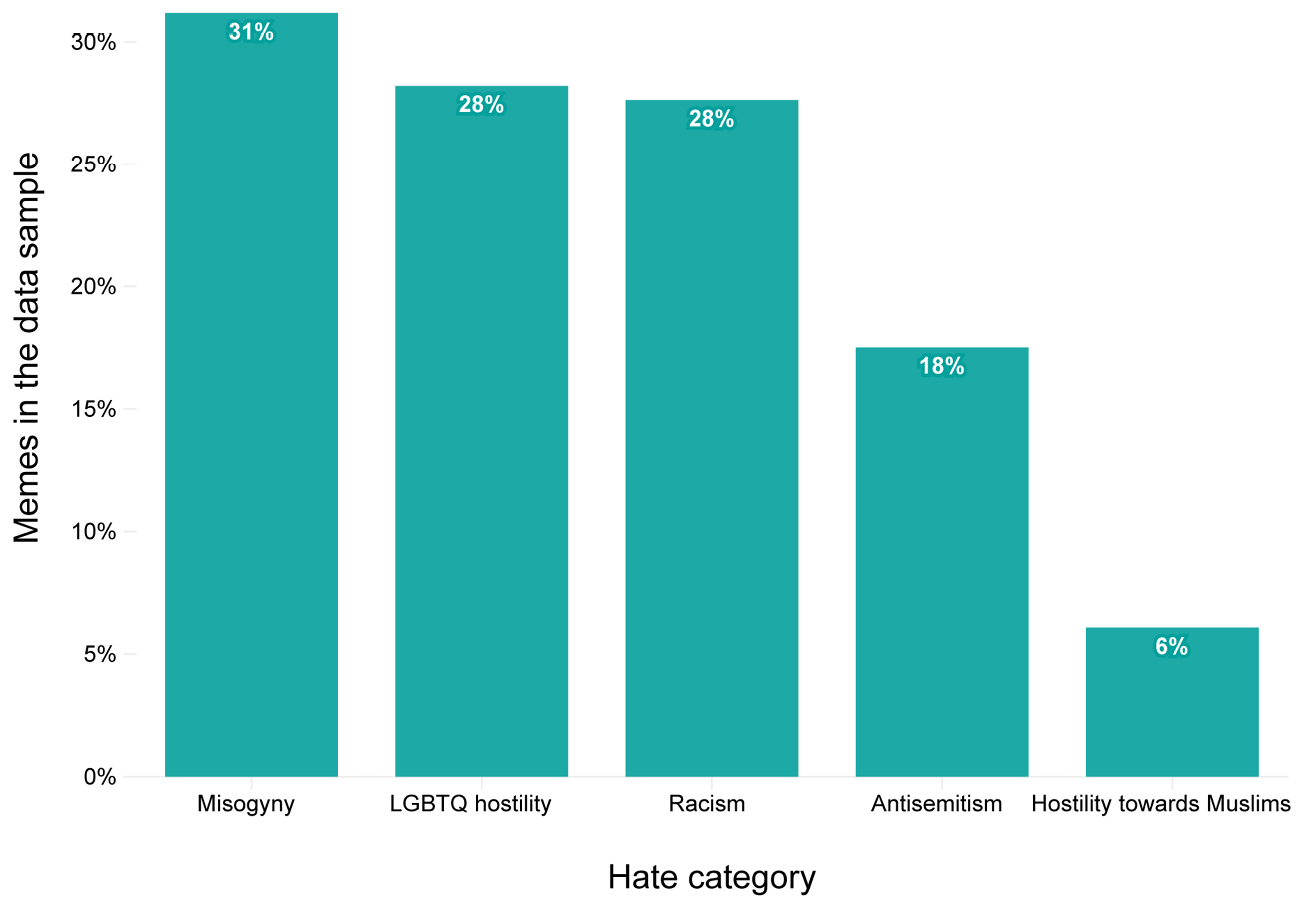

This is also reflected in the results of the annotation process in general. When analysing a representative sample, most hate memes can be categorised as misogynistic. In total, 31% of the derogatory memes fall into this category and can be read or interpreted as misogynistic. There were also significant levels of racist references and LGBTQ hostility, each present in 28% of the images. Antisemitic content made up 18% of the hate memes analysed, while hostility towards Muslims was the smallest category at 6%. Notably, 9% of the memes showed intersections of several hate categories. Our findings highlight the frequent overlap between misogyny and LGBTQ hostility, as well as between racism and hostility towards Muslims.

Fig. 8.3 Relative frequency of hate categories

A more detailed picture emerges when looking at which ideological actors discriminate against which marginalised social groups. The channels used by QAnon supporters are particularly noteworthy. Within these channels, almost one in three (32%) hate memes can be interpreted as antisemitic. Hate memes from these channels account for 19% of all antisemitic memes in the dataset. Similarly, in esoteric channels, 39% of hate memes can be interpreted as racist, accounting for 18% of all racist memes in the dataset. The far-right populist channels are striking. Channels from this ideological spectrum degrade women the most. Almost half (49%) of the hate memes used here can be classified as misogynistic. This accounts for more than a quarter (28%) of all misogynistic memes in the entire dataset.

The narrative composition of misogynistic memes

Fig. 8.4 Pie chart of narratives identified as misogynistic, segmented by percentage

Deeper insights into the form of hate were gained by annotating the underlying narrative structures. In the context of misogyny, this highlights that more subtle forms of degradation, often expressed as crude chauvinistic humour, are more dominant than open hate. Most misogynistic memes draw on common gender stereotypes between men and women (21%), depict women as sexual objects (17.7%), or portray them as stupid (16.2%). Although these forms of representation dominate the data set, we also identified more explicit forms of misogyny. For example, 5.5% of misogynistic memes trivialise (physical) violence against women and 6% deny their self-determination.

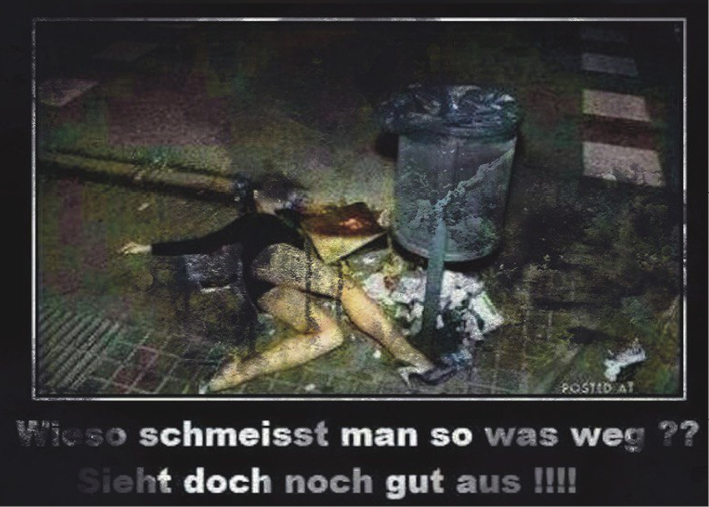

Figs. 8.5, 8.6 and 8.7 Misogynistic memes depicting women as sexual objects

Body features are overtly sexualised or women are presented to the viewer—who is apparently assumed to be a heterosexual male—as freely available sexual fantasies. For example, one meme shows a young woman with bare legs lying next to a rubbish bin in the street. The text reads: “Why would you throw something like that away? It still looks good!!!!”4 (Fig. 8.5). Another meme shows a young woman opening a flat door from the inside. She is naked below the waist and waves an imaginary visitor away: “Gas bill? Can you please come later, I’m just paying the electricity!” (Fig. 8.6) In addition to portraying the woman as a sex object, this also conveys a message of denied self-determination by implying that women have no money of their own and can only ‘pay’ with sexual services (6%). This example also shows that memes can convey a misogynistic message even if it is not their main message but rather as a side effect that is supposed to entertain the viewer. For example, this meme seems to mainly allude to rising gas and electricity prices in 2022 and the indignation felt by many consumers in Germany.

Even without explicit nudity, memes can degrade women by treating them as sex objects, for example, when beautiful women are oversexualised and women’s bodies that deviate from the norm are devalued. Here, for example, is the famous film scene of a laughing Marylin Monroe with her dress caught by the wind and her legs exposed, next to Green Party politician Ricarda Lang, who is also wearing a dress. The caption reads: “Let’s hope it stays windless”. Although this motif of devaluation based on appearance is less common (16%) than that of oversexualisation, it conveys a similar message: women are supposed to be ‘eye candy’ for the male viewer. However, in addition to this sexist interpretation, there is also the possibility that the devaluation relates solely to body shape, i.e. it is ‘only’ fatphobic. There is therefore a degree of ambiguity which, coupled with the humorous wink, can be perceived differently by different recipients. At the same time, this gives an opportunity for disseminators to deny misogyny. In principle, however, physical devaluation is a widespread form of misogyny, and women are disproportionately affected by fatphobic hostility.

Elements, rhetoric, and style of hate memes

While deciphering narratives requires a great deal of interpretation, it is even more unclear what exactly is depicted in these memes that evokes different perceptions and attributions of meaning and allows for broad receptivity. In order to better understand how the resonance of memes works, we have also coded individual visual elements such as people, objects, animals, or symbols. This makes it possible to describe images as systematically and objectively as possible, and can help to contextualise visual carriers of devaluation and identify implicit patterns of persuasion. In this regard, studies show that the frequency of certain elements provides clues to the argumentative structure of memes (Bogerts and Fielitz 2019).

The distribution of image elements across the analysed hate categories initially reveals predictable patterns. For instance, in racist and anti-Muslim memes, the most frequently classified visual element is a non-white man (11% and 20% in each category). Antisemitic memes predominantly depict economically influential individuals (12%) or celebrities (8%), while misogynistic memes overwhelmingly feature women (20%).

However, a closer look reveals less obvious visual elements that provide a deeper understanding of ideological dynamics. In the LGBTQ category, for example, it is notable that political logos that stand for a political movement, such as the pride flag, are just as common (9%) as depictions of visibly trans/non-binary persons (8%). This may indicate that the visual discourse in this category is partly transphobic, but also targets the movements advocating for diversity, queer feminism, or queer politics. This ambiguity and ambivalence makes it difficult to distinguish between a critique of progressive politics and explicit transphobia. The intent of the creator remains blurry, as it is unclear in which context a specific meme was first shared.

This highlights a central characteristic of memes: they rarely convey clear messages and almost never construct arguments, but play with ambiguity and work associatively (Shifman 2013). In the case of LGBTQ hostility, this means that memes often exist in undefined grey areas or border zones. The frequency of certain image elements also shows that these memes often represent not only the target group itself, but also other social groups. For example, the frequent appearance of white women and children in memes related to racism (7% and 5%) and LGBTQ hostility (8% and 7%) suggests that these categories increasingly include threat scenarios in their messaging. In this case, racialised migrant men are portrayed as a threat to white women and LGBTQ persons as a threat to children and the traditional institution of the family.

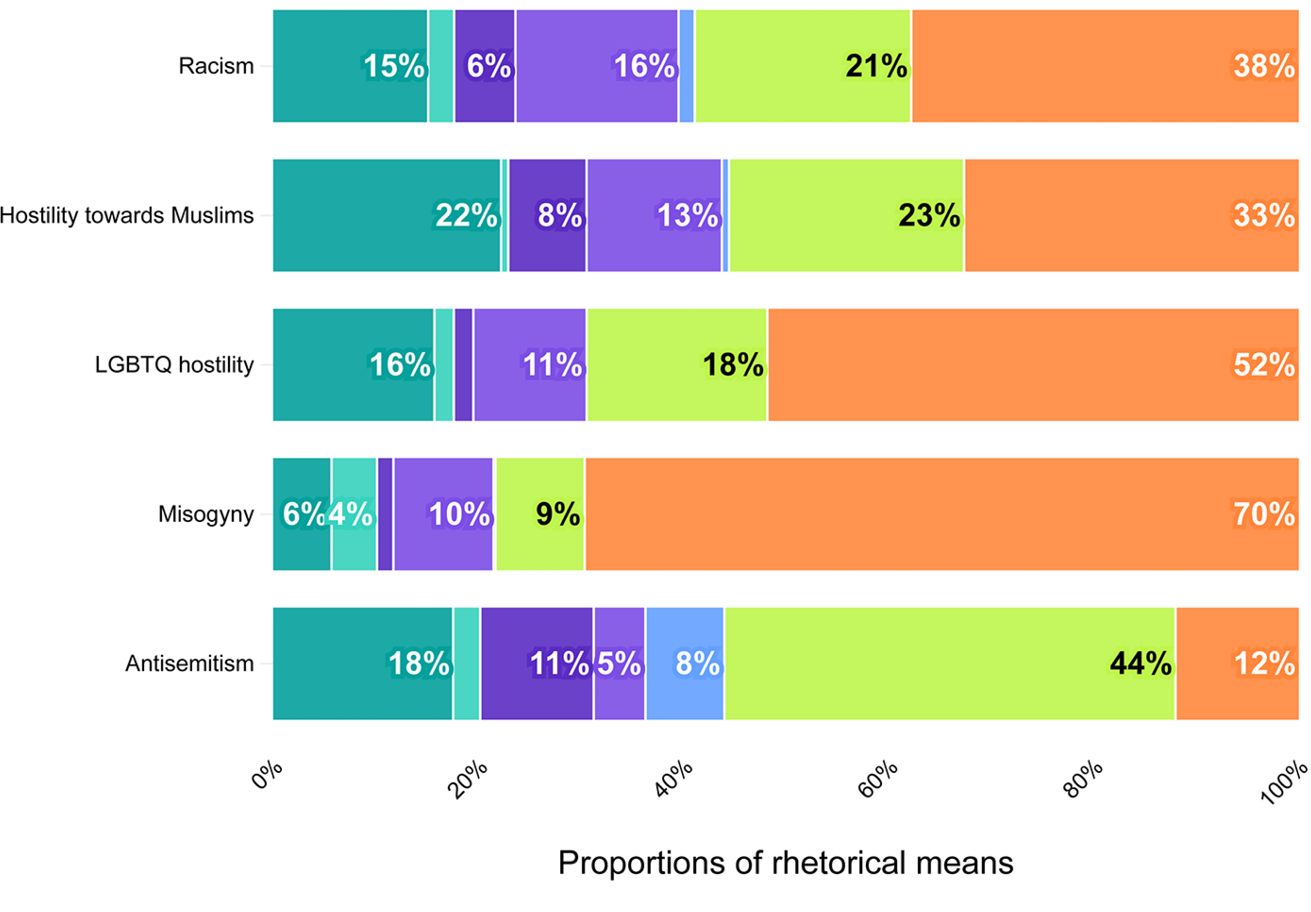

The contextualisation of hate memes must also include the means of persuasion—in particular, characteristics within each category in terms of the rhetoric used. For instance, the rhetoric of ‘outrage ‘is more pronounced in anti-Muslim memes (22%) than in other hate memes, and racist memes use the rhetoric of ethnic superiority and pride (16%) more than in other hate categories. The prevalence of humour within the categories is particularly striking. Both LGBTQ hostility (52%) and misogynist memes (70%) use humour more often than other categories, while humour seems to play a comparatively very small role in antisemitic memes (12%).

Fig. 8.8 Rhetoric of hate categories segmented by percentage

It is noticeable that antisemitic memes mainly try to convince with alleged facts (44%). This rhetorical style is by far the most common in this category. This is in line with theoretical approaches in antisemitism research, as the phenomenon is primarily understood as a “rumour about the Jews” (Adorno 2005: 110). Conspiracy myths and ideologies are often an integral part of modern antisemitism when they provide a model for explaining the complexity of the modern world (Rathje 2021). Antisemitism has a long tradition of being expressed through visual imagery (Kirschen 2010), which has adapted its form over time and can be seen in today’s digital cultures (Zannettou et al. 2018).

Particularly recurrent images are those that became especially well known in the course of the protests against the COVID-19 measures, such as images of software billionaire Bill Gates or investor Georg Soros. These individuals were repeatedly accused of having secret plans, ranging from alleged ‘population replacement’ to remote control by chip via vaccination. An example of this is the meme below. It shows a cartoon-like depiction of the economist and president of the World Economic Forum (WEF), Klaus Schwab. The word “reset” can be read as a possible allusion to the conspiracy narrative of the Great Reset. This is an initiative of the World Economic Forum that aimed to introduce economic reforms in the face of the COVID-19 pandemic. The conspiracy theory interpretation of the project, however, sees a secret alliance of elites behind it, who would have invented a pandemic to enslave the population and achieve global power. The conspiracy theory combines and continues existing narratives such as “The Great Replacement” and “New World Order”. The pop-cultural iconography of the meme suggests a connection to John Carpenter’s 1988 film They Live, in which the protagonist discovers that many of the world’s authority figures are actually malevolent aliens controlling ordinary people for their own ends. While memes require a certain level of literacy to decipher, they leave interpretation to their recipients. Whether the Schwab meme is a pop-cultural and consumer-critical allusion, or whether it is intended to portray him as a manipulative string-puller who subjugates the world’s population, is in the eye of the beholder.

Fig. 8.9 Meme about the conspiracy theory of the “great reset” with antisemitic connotations

Antisemitism is often expressed in coded language or metaphorically, not least because the accusation carries particular weight after the Holocaust, and its expression is considered taboo in large parts of German society. Whereas, in the 19th century, the term “anti-Semite” was freely chosen by open enemies of the Jews as a self-description, today hardly anyone openly admits to this resentment. At the same time, we can see that antisemitism is used in different ways in our stakeholder groups. Neo-Nazi channels, for example, dispense with humour and use the rhetoric of superiority to devalue Jews (and alleged Jews), while conspiracy theory channels deal with alleged facts in a more abstract way and are much more coded. Open threats of violence are very rarely expressed in antisemitic memes on Telegram, which is probably related to the possibility of legal consequences (in Germany).

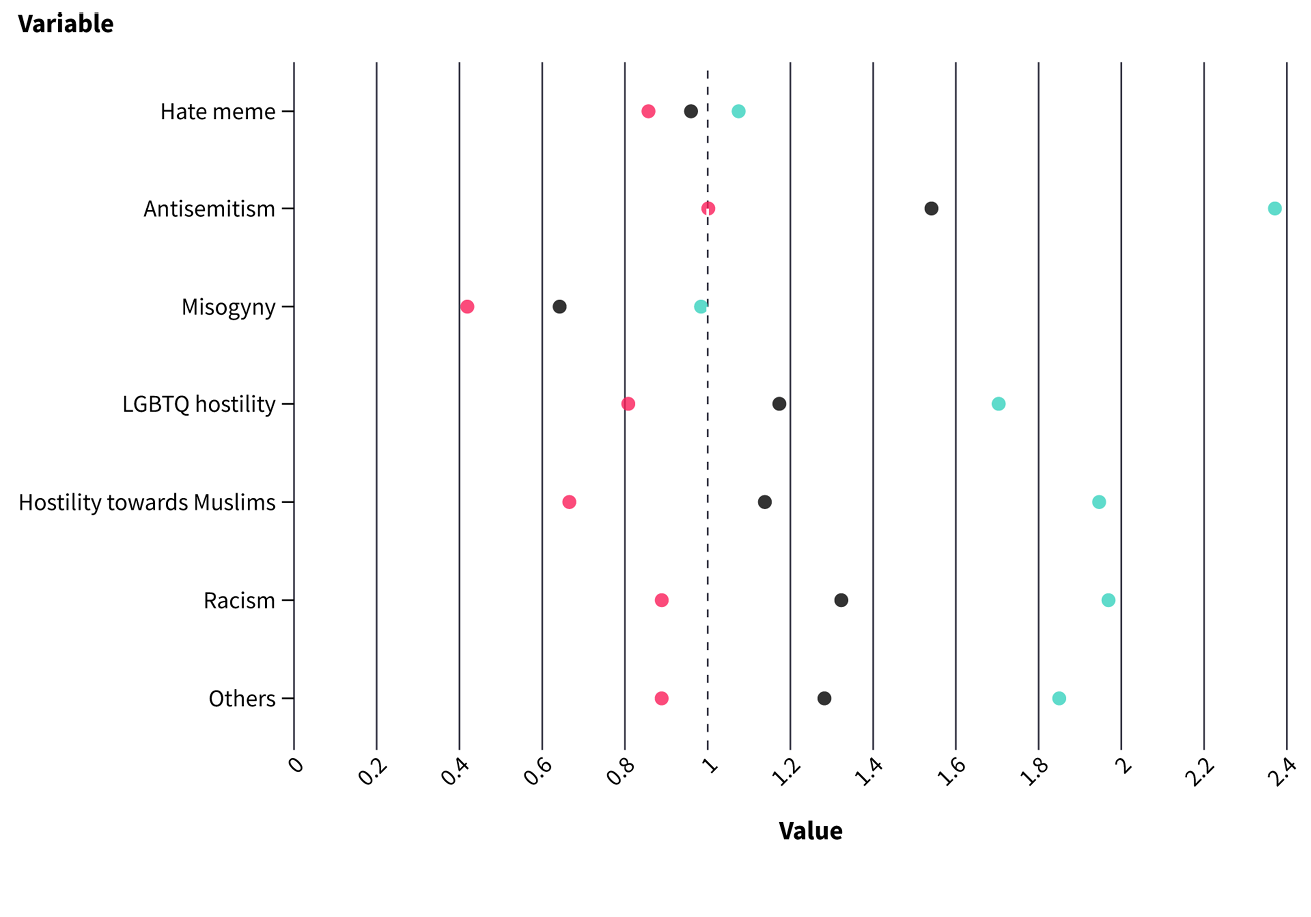

Measuring virality through regression analysis

Moving away from the concrete composition of visual content, we were interested in measuring if (and which) hate memes go viral on Telegram. The analysis is based on 2,158 messages. For comparison, a random sample of 6,474 messages from 322 channels where memes were also shared was used as a reference dataset, resulting in a combined total of 8,632 messages. Two models were developed for this analysis. The first regression model (first row of Fig. 8.11) shows that the independent variable meme/no meme has almost no effect on sharing between channels. The second regression model (below the first row of Fig. 8.11) shows that hate categories as an independent variable has only a small effect on sharing. Specifically, misogynistic memes are shared less on average, while antisemitic memes are shared more than memes in other hate categories.

Fig. 8.10 Negative binomial regression on the influence of meme type on the number of shares5

At first glance, these results seem counterfactual: while we have seen that women are particularly targeted by memetic hate communication, the results of the calculated regression models indicate that misogynistic memes spread less in the analysed Telegram channels than memes of other hate categories. One possible explanation is that these memes are so ubiquitous and familiar that there is little incentive to forward such messages. On the contrary, antisemitic memes are forwarded more often than other hate memes, even though they are much less present in the dataset overall. This may be due to the fact that antisemitic memes have a certain newsworthiness that distinguishes them from the derogatory everyday humour of most hate memes.

Discussion

The analysis has shown that the visual representation of group-focused hate is multifaceted and opens up new facets for thinking about image-based forms of far-right “politics from below”. 5.3% of the analysed images were classified as derogatory against one or more of the predefined groups. The results of the regression analysis suggest that content conveying group-related hate rarely goes viral. One reason for the limited spread of hate memes in the German Telegram sphere may be the design of the platform. Communication via the channels is one-to-many, which excludes members from a collaborative process of meme creation. In addition, the target and user groups on Telegram are likely to be significantly different from those on platforms considered to be meme hubs, such as 4chan, imgur, or 9GAG. As a text-specific hybrid medium, Telegram does not reward images as much as platforms with algorithmically organised news feeds. Instead, the frequent, attention-grabbing posting encourages the DIY mentality inherent in participatory forms of image production. This means that images are created and shared according to one’s own standards.

This aspect is reinforced by a lot of banal content with primitive forms of jokes and rude humour. The visual content on this instant messaging service is more likely to address borderline areas, associated with chauvinistic everyday humour, and less likely to promote explicitly extremist world views. If we take the example of online misogyny and LGBTQ hostility, we often find subtle expressions of discrimination and hate in the form of gender stereotyping. Many of these memes operate in a grey area, as they can be understood both as a critique of gender and sexual identity policies and as a devaluation of the people to whom these policies apply. This ambiguity or diversity of interpretation allows for the activation of multiple trigger points in an emotionally charged debate, particularly around the right to gender self-determination. Hence, group-related enmity is often used as a pretext to criticise liberal democracy as a whole.

Conclusion

This exploratory study has examined the memetic communication of group-focused hate as a form of everyday discrimination, but also of far-right politics. In our multilevel analysis, we found that the target groups of these memes are addressed differently in terms of frequency, rhetoric, and use of visual elements. Most of the imagery we analysed contains subtle forms of hatred and discrimination, and not explicit threats against marginalised persons, e.g. with (physical) violence, as one might expect. On the one hand, this may be due to the format of memes as a truly ambiguous form of communication, allowing for different interpretations and thus appealing to different audiences. On the other hand, the alternative right-wing Telegram scene in Germany is subject to a much stricter penal system than, for example, in the US, which is why public communication is much more coded than elsewhere. This said, we are aware that such offences are shared anonymously on other platforms such as less moderated imageboards. In light of this, our findings must be understood in the context of a specific platform culture. Furthermore, we need to acknowledge natural biases in the interpretations of the memes. Although coders are trained to be as objective as possible, many of the coding decisions are influenced by individual sensitivity to a form of degradation, prior knowledge, and political beliefs. Therefore, the results must always take into account how an academically socialised audience reads the memes. In addition, our material is a reflection of the time period we studied. It began after the coronavirus protests had died down in the winter of 2021/22, and extended until the beginning of the Alternative für Deutschland’s (AfD) breakthrough beginning in the summer of 2023, which created a much more hostile political climate. As memes relate to current events, our findings therefore only provide insights into an episode of memetic communication of group-based enmity on a particular platform at a particular time. A similar snapshot of what happens on other platforms would presumably be different.

Nevertheless, by comparing not only statistical data but also qualitatively contextualising the elements of visual discourse, our study offers comparative insights into the narrative packaging of memes, as well as the rhetorical and aesthetic means used to communicate group-based enmity. This calls for further research on comparability across different time periods and political milieus in order to better understand the specificity of memes as a form of far-right mobilisation.

References

Adorno, Theodor W., 2005. Minima Moralia: Reflections on a Damaged Life. London/New York: Verso.

Askanius, Tina, 2021. “On Frogs, Monkeys, and Execution Memes: Exploring the Humor-Hate Nexus at the Intersection of Neo-Nazi and Alt-Right Movements in Sweden”. Television & New Media, 22 (2), 147–165. https://doi.org/10.1177/1527476420982234

―, and Nadine Keller, 2021. “Murder fantasies in memes: fascist aesthetics of death threats and the banalization of white supremacist violence”. Information, Communication & Society, 24 (16), 2522–2539. https://doi.org/10.1080/1369118X.2021.1974517

Bell, Philip, 2004, “Content Analysis of Visual Images”. In: T. van Leuwen and C. Jewitt (eds), The Handbook of Visual Analysis. London: Sage, 10–34.

Beran, Dale, 2019. It Came from Something Awful: How a New Generation of Trolls Accidentally Memed Donald Trump into the White House. New York: St. Martin’s Press.

Beyer, Jessica Lucia, 2014. Expect Us. Online Communities and Political Mobilization. Oxford: Oxford University Press.

Bogerts, Lisa, 2022. The Aesthetics of Rule and Resistance. Analyzing Political Street Art in Latin America. New York: Berghahn Books Incorporated.

―, and Maik Fielitz, 2019. “‘Do You Want Meme War?’. Understanding the Visual Memes of the German Far Right”. In: Maik Fielitz and Nick Thurston (eds), Post-Digital Cultures of the Far Right. Online Actions and Offline Consequences in Europe and the US. Bielefeld: Transcript, 137–153.

―, and Maik Fielitz, 2023. “Fashwave. The Alt-Right’s Aesthetization of Politics and Violence”. In: Sarah Hegenbart and Mara Kölmel (eds), Dada Data. Contemporary Art Practice in the Era of Post-Truth Politics. London: Bloomsbury Visual Arts, 230–245.

Brodie, Richard, 2009. Virus of the Mind. The New Science of the Meme. Seattle: Hay House.

Buehling, Kilian and Annett Heft, 2023. “Pandemic Protesters on Telegram: How Platform Affordances and Information Ecosystems Shape Digital Counterpublics”. Social Media + Society, 9 (3), Article 20563051231199430. https://doi.org/10.1177/20563051231199430

Cicchetti, Domenic V. and Sara A. Sparrow, 1981. “Developing criteria for establishing interrater reliability of specific items: applications to assessment of adaptive behavior”. American Journal of Mental Deficiency, 86 (2), 127–137.

Crawford, Blyth and Florence Keen, 2020. “Memetic irony and the promotion of violence in chan cultures”. Centre for Research and Evidence on Security Threats, London.

Dawkins, Richard, 2006. The Selfish Gene. 30th Anniversary edn. New York: Oxford University Press.

Dafaure, Maxime, 2020. The “‘Great Meme War’: the Alt-Right and its Multifarious Enemies”. Angles. New Perspectives on the Anglophone World, (10), 1–28.

Doerr, Nicole, 2021. “The Visual Politics of the Alternative for Germany (AfD): Anti-Islam, Ethno-Nationalism, and Gendered Images”. Social Sciences, 10 (1), 20. https://doi.org/10.3390/socsci10010020

Donovan, Joan, Emily Dreyfuss and Brian Friedberg, 2022. Meme Wars. The Untold Story of the Online Battles Upending Democracy in America. New York: Bloomsbury Publishing.

Gagnon, Audrey, 2023. “Far-right virtual communities: Exploring users and uses of far-right pages on social media”. Journal of Alternative & Community Media, 7 (2), 117–135. https://doi.org/10.1386/jacm_00108_1

Galip, Idil, 2024. “Methodological and epistemological challenges in meme research and meme studies”. Internet Histories, 1–19. https://doi.org/10.1080/24701475.2024.2359846

von Gehlen, Dirk, 2020. Meme. Muster digitaler Kommunikation. Berlin: Verlag Klaus Wagenbach.

Grundlingh, L., 2018. “Memes as speech acts”. Social Semiotics, 28 (2), 147–168. https://doi.org/10.1080/10350330.2017.1303020

Hokka, Jenni and Matti Nelimarkka, 2019. “Affective economy of national-populist images: Investigating national and transnational online networks through visual big data”. New Media & Society, August. https://doi.org/10.1177/1461444819868686

Jost, Pablo and Leyla Dogruel, 2023. “Radical Mobilization in Times of Crisis: Use and Effects of Appeals and Populist Communication Features in Telegram Channels”. Social Media + Society, 9 (3), Article 20563051231186372. https://doi.org/10.1177/20563051231186372

Kirschen, Yaakov, 2010. “Memetics and the Viral Spread of Antisemitism Through ‘Coded Images’ in Political Cartoons”. Institute for the Study of Global Antisemitism and Policy.

Landis, J. Richard and Gary G. Koch, 1977. “An Application of Hierarchical Kappa-type Statistics in the Assessment of Majority Agreement among Multiple Observers”. Biometrics, 33 (2), 363–74.

Macklin, Graham, 2019. “The Christchurch Attacks: Livestream Terror in the Viral Video Age”. CTC Sentinel, 12 (6), 18–29.

Marwick, Alice, 2013. “Memes”. Contexts, 12 (4), 12–13.

McSwiney, Jordan, Michael Vaughan, Annett Heft and Matthias Hoffmann, 2021. “Sharing the hate? Memes and transnationality in the far right’s digital visual culture”. Information, Communication & Society, 1–20. https://doi.org/10.1080/1369118X.2021.1961006

Miller-Idriss, Cynthia, 2020. Hate in the Homeland. The New Global Far Right. Princeton: Princeton University Press.

Mortensen, Mette and Christina Neumayer, 2021. “The playful politics of memes”. Information, Communication & Society, 24 (16), 2367–2377. https://doi.org/10.1080/1369118X.2021.1979622

Nowotny, Joanna and Julian Reidy, 2022. Memes – Formen und Folgen eines Internetphänomens. Bielefeld: Transcript.

Phillips, Whitney, 2015. This is Why We Can’t Have Nice Things. Mapping the Relationship Between Online Trolling and Mainstream Culture. Cambridge: MIT Press.

Rathje, Jan, 2021. “’Money Rules the World, but Who Rules the Money?’ Antisemitism in post-Holocaust Conspiracy Ideologies”. In: Armin Lange, Kerstin Mayerhofer, Dina Porat and Lawrence H. Schiffman (eds), Confronting Antisemitism in Modern Media, the Legal and Political Worlds. Berlin/Boston: De Gruyter, 45–68.

Regier, Darrel A., William E. Narrow, Diana E. Clarke, Helena C. Kraemer,, S. Janet Kuramoto, Emily A. Kuhl and David J. Kupfer, 2013. “DSM-5 Field Trials in the United States and Canada, Part II: Test-Retest Reliability of Selected Categorical Diagnoses”. American Journal of Psychiatry, 170 (1), 59–70.

Rose, Gillian, 2016. Visual Methodologies. An Introduction to Researching with Visual Materials. 4th edn. London: Sage.

Schmid, Ursula Kristin, 2023. “Humorous hate speech on social media: A mixed-methods investigation of users’ perceptions and processing of hateful memes”. New Media & Society, Article 14614448231198169. https://doi.org/10.1177/14614448231198169

―, Heidi Schulze and Antonia Drexel, 2023. “Memes, humor, and the far right’s strategic mainstreaming”. Information, Communication & Society. https://doi.org/10.1080/1369118X.2024.2329610

Schulze, Heidi, Julian Hohner, Simon Greipl, Maximilian Girgnhuber, Isabell Desta and Diana Rieger, 2022. “Far-right conspiracy groups on fringe platforms: a longitudinal analysis of radicalization dynamics on Telegram”. Convergence: The International Journal of Research into New Media Technologies, 28 (4), 1103–1126. https://doi.org/10.1177/13548565221104977

Shifman, Limor, 2013. “Memes in a Digital World: Reconciling with a Conceptual Troublemaker”. J Comput-Mediat Comm, 18 (3), 362–377. https://doi.org/10.1111/jcc4.12013

―, 2014. Memes in Digital Culture. Cambridge: MIT Press.

Thorleifsson, Cathrine, 2021. “From cyberfascism to terrorism: On 4chan/pol/ culture and the transnational production of memetic violence”. Nations and Nationalism (28), 286–301. https://doi.org/10.1111/nana.12780

Tuters, Marc, 2019. “LARPing & Liberal Tears. Irony, Belief and Idiocy in the Deep Vernacular Web”. In: Maik Fielitz and Nick Thurston (eds), Post-Digital Cultures of the Far Right. Online Actions and Offline Consequences in Europe and the US. Bielefeld: Transcript, 37–48.

Winter, Aaron, 2019. Online Hate: “From the Far-Right to the ‘Alt-Right’ and from the Margins to the Mainstream”. In: Karen Lumsden and Emily Harmer (eds), Online Othering. Exploring Digital Violence and Discrimination on the Web. Cham: Springer, 39–63.

Zannettou, Savvas, Tristan Caulfield, Jeremy Blackburn, Emiliano De Cristofaro, Michael Sirivianos, Gianluca Stringhini and Guillermo Suarez-Tangil, 2018. “On the Origins of Memes by Means of Fringe Web Communities”. https://doi.org/10.48550/arXiv.1805.12512

Zick, Andreas, Carina Wolf, Beate Küpper, Eldad Davidov, Peter Schmidt and Wilhelm Heitmeyer, 2008. “The Syndrome of Group-Focused Enmity: The Interrelation of Prejudices Tested with Multiple Cross-Sectional and Panel Data”. Journal of Social Issues, 64 (2), 363–383. https://doi.org/10.1111/j.1540-4560.2008.00566.x

Zidani, Sulafa, 2021. “Messy on the inside: internet memes as mapping tools of everyday life”. Information, Communication & Society, 24 (16), 2378–2402. https://doi.org/10.1080/1369118X.2021.1974519

1 For more information on the methodology of the Telegram monitoring, see: https://machine-vs-rage.bag-gegen-hass.net/methodischer-annex-01/

2 This typology encompasses the following German-specific sub groups: Neo-Nazis, Sovereign Citizens (Reichsbürger), Populist Right, New Right, Extreme Right, Conspiracy Ideologues, Esotericists, QAnon, Anti-Vax activists and the Querdenken movement.

3 The choice of reliability coefficients was based on scientific recommendations (Holsti reliability coefficient, Krippendorff’s alpha) and popularity in the field (Cohen’s kappa). According to the literature on intercoder reliability, a classification of “excellent” (greater than 0.8), “good” (0.6 to 0.8), and “moderate” (0.4 to 0.6) is considered acceptable (Cicchetti and Sparrow 1981, Landis and Koch 1977, Regier, et al. 2013). For the purposes of this research, a conservative mixed approach was used. Significant differences in reliability scores were observed in the annotation of hate categories [1], partly due to the fact that more than 95% of the data were not assigned to any category in this step. Disagreements in annotation were resolved by at least two researchers. A notable trend across all hate categories is that narratives [2] that require literacy (e.g. knowledge of conspiracy theories) were also particularly difficult for researchers to annotate, resulting in lower reliability scores. In all subsequent analysis steps [3–5], reliability scores ranged from moderate to excellent agreement. Notably, items requiring background knowledge were significantly more difficult to consistently annotate than descriptive items such as objects.

4 All quoted texts in this section are translated by the authors and are written in German in the original memes. All memes being shared here are graphically edited to avoid uncritical reproduction and to give anonymity to the people who are depicted.

5 Negative binomial regression models with fixed-effect estimation and varying intercepts for 322 channels; baseline model (above red line) only includes hate meme; full model (below red line) includes all types of memes; all models are controlled for number of subscribers.